More about Google Cloud Storage

- Google Cloud Persistent Disk Explainer: Disk Types, Common Functions, and Some Little-Known Tricks

- How to Mount Google Cloud Storage as a Drive for Cloud File Sharing

- How to Deploy Cloud Volumes ONTAP in a Secure GCP Environment

- A Look Under the Hood at Cloud Volumes ONTAP High Availability for GCP

- Google Cloud Containers: Top 3 Options for Containers on GCP

- How to Use Google Filestore with Microservices

- Google Cloud & Microservices: How to Use Google Cloud Storage with Microservices

- High Availability Architecture on GCP with Cloud Volumes ONTAP

- GCP Persistent Disk Deep Dive: Tips, and Tricks

- How to Use the gsutil Command-Line Tool for Google Cloud Storage

- Storage Options in Google Cloud: Block, Network File, and Object Storage

- Provisioned IOPS for Google Cloud Persistent Disk

- How to Use Multiple Persistent Disks with the Same Google Cloud Compute Engine Instance

- Google Cloud Website Hosting with Google Cloud Storage

- Google Cloud Persistent Disk: How to Create a Google Cloud Virtual Image

- Google Cloud Storage Encryption: Key Management in Google Cloud

- How To Resize a Google Cloud Persistent Disk Attached to a Linux Instance

- How to Add and Manage Lifecycle Rules in Google Cloud Storage Buckets

- How to Switch Between Classes in Google Storage Service

- Cloud File Sharing Services: Google Cloud File Storage

Subscribe to our blog

Thanks for subscribing to the blog.

June 18, 2020

Topics: Cloud Volumes ONTAP Cloud StorageGoogle CloudAdvanced7 minute readPerformance

Google Cloud Persistent Disk is the built-in block storage service among the Google Cloud storage options. It is a fundamental building block that provides virtual instances with persistent disks and overall basic data management capabilities.

The storage performance of a Google Cloud persistent disk can vary greatly depending on the configuration and storage options you have in use. When creating an instance and evaluating the Google Cloud storage options, the user has the possibility to choose among three different types of persistent disk:

- Standard is for processing workloads that primarily use sequential I/Os.

- SSD is for better performance with single-digit millisecond latencies.

- Local SSDs have lower latency but without any redundancy capabilities and are only available during the instance lifetime.

When it comes down to the configuration of a persistent disk, the key performance factors we want to influence are the IOPS (Input/Output Operations per Second) and throughput.

In this blog post we are going to explain how IOPS works in Google Cloud and describe the steps involved in creating a Google Cloud persistent disk with provisioned IOPS.

IOPS and Storage Performance

There are many aspects to consider when storing data in the cloud. Characteristics such as size, location, and growth capabilities are a few that first come to mind. All these characteristics are of course important and can influence your overall storage performance. And as engineers know well, cloud storage performance is paramount.

A poorly chosen storage type and configuration can drag the health of an entire system down.

In the end, the performance of Google Cloud block storage boils down to how many IOPS and throughput the persistent disk has available.

What Is IOPS?

IOPS, or Input/Output Operations per Second, is a unit of measurement used in storage devices. The number of IOPS is greatly influenced by the access pattern (sequential or random) used, or, in other words, the way we read or write data to the disk. As such, in real-world applications it is often hard to calculate or predict the minimum amount of IOPS required. When a workload involves small I/Os (from 4KB to 16KB) the amount of random IOPS is usually the main limiting factor to consider in storage performance.

IOPS and Google Cloud Persistent Disks

When purchasing a physical hard disk, the manufacturer will indicate the expected number of IOPS the device is capable of producing. Similarly, when creating a cloud-based persistent disk, it is important to know the expected number of IOPS. Storage in Google Cloud works in such a way that the larger the persistent disk size, the higher is the amount of IOPS one can expect.

It is not possible to input the desired amount of IOPS and thus, the way to create a persistent disk with provisioned IOPS is a bit tricky and involves a combination of the persistent disk type (Standard or SSD), machine type, and disk size. The machine type imposes per-instance limits and therefore requires careful selection when optimizing the storage performance.

Using a standard persistent disk, we can achieve up to 7500 read and 15000 write IOPS when the disk size is higher than 10 TB. In turn, with SSD persistent disks we can get up to 100000 read and 30000 write IOPS for disks with 4 TB or more. It’s also important to note that when you have multiple disks in the same virtual instance, the performance limits are going to be shared between all the attached disks. Take a look at this complete table in the Google Cloud documentation to get some guidance when creating persistent disks with provisioned IOPS.

When it comes to IOPS and throughput, the higher the number, the better performance. However, a persistent disk with a high amount of IOPS can be quite expensive because the disk type and size dictate the expected number of IOPS. You can also say, with IOPS and throughput, the higher the number, the more it is going to cost you.

These cost factors make it important to select the proper combination for your use case. Since there are no minimum IOPS requirements for workloads, we should maximize our persistent disk investment in use cases that benefit from higher amounts of IOPS and throughput. Typical use cases are relational databases such as MySQL, Oracle DB, MS SQL Server, and big data solutions such as Hadoop and Spark. These cases are truly demanding and are often used to benchmark storage performance.

Steps to Create a GCP Persistent Disk with Provisioned IOPS

Step 1: Create Two New Instances

Our goal is to have two persistent disks whose storage performance we can compare, so we will start by creating two Google Cloud instances. The instance creation process can be done rather simply, using the default settings and identical to the one described in this blog post.

Step 2: Creating Two Persistent Disks with Different IOPS

We will create two persistent disks with provisioned IOPS by using different sizes (200GB and 600GB). The amount of expected IOPS and its relation to the disk size can be found here.

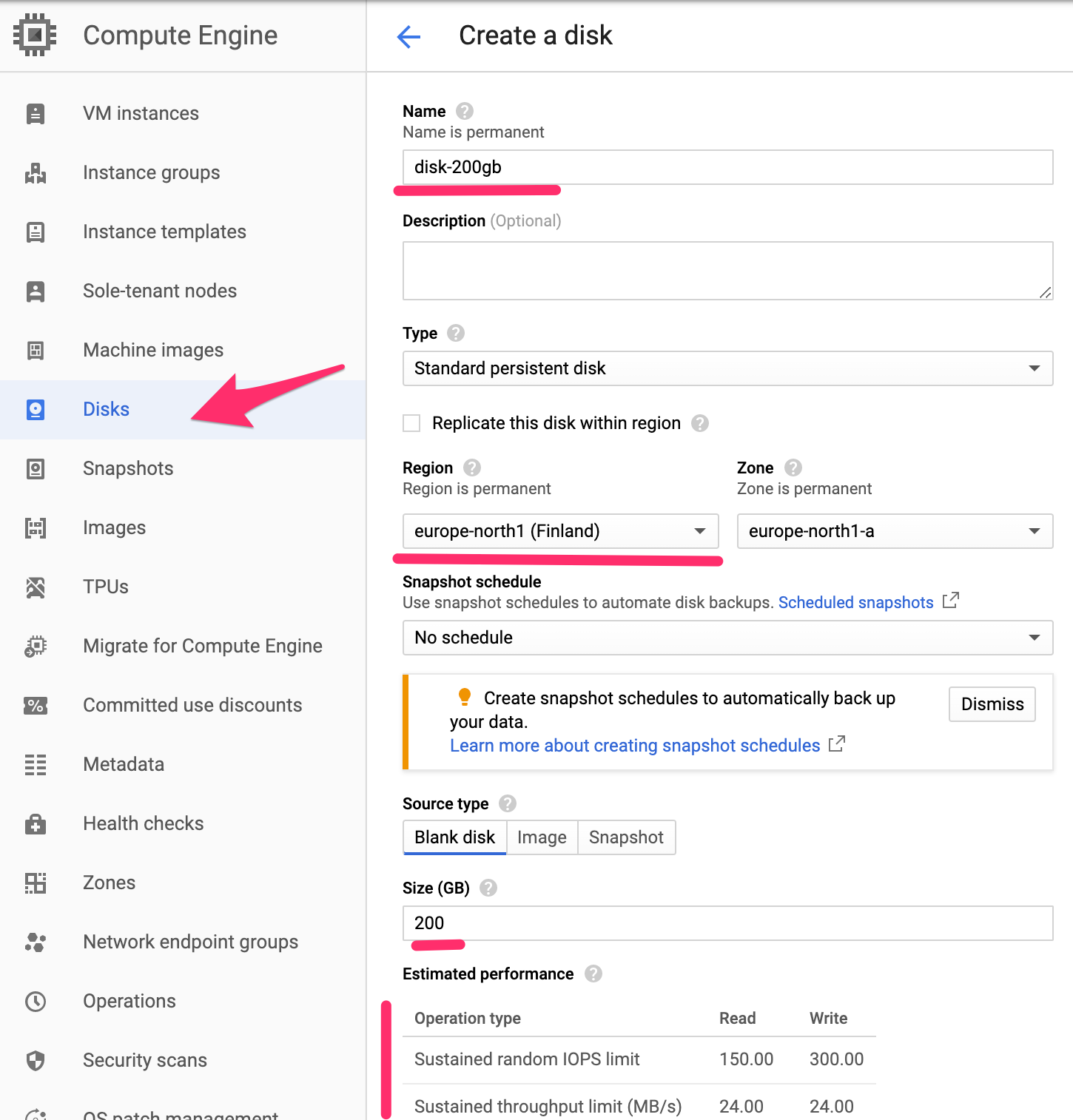

1. In Google Cloud Compute Engine, navigate to the Disks section and press the “Create Disk” button to initiate the process.

2. Fill in the persistent disk information such as a unique name, a region (same as the instances), and size (200 GB). Note the generated information regarding the expected performance metrics such as IOPS and throughput.

Creation panel of a new Compute Engine Persistent Disk.

3. Create a second persistent disk with the size of 600 GB by repeating the steps 1 and 2 listed above.

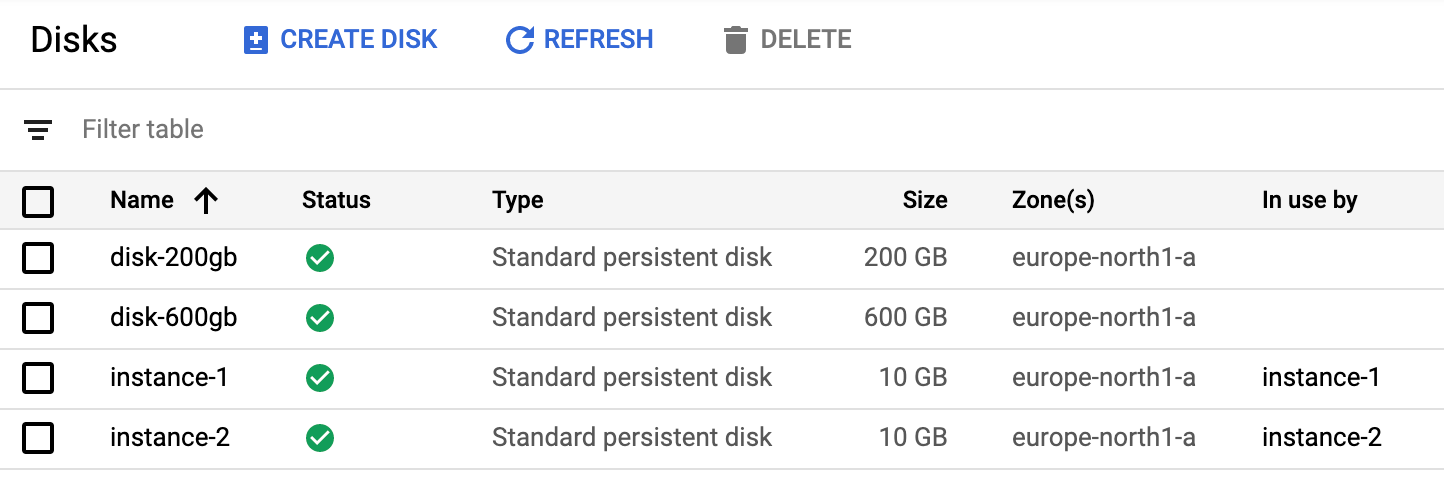

4. The newly created disks should now appear as available in the list of Compute Engine Persistent Disks.

Compute Engine Persistent Disk list.

Step 3: Attach the Persistent Disks to the Instances

With the newly created disks ready to be put to use, we need to attach them to the virtual instances. We are going to attach the 200 GB disk to instance-1 and the 600 GB disk to instance-2.

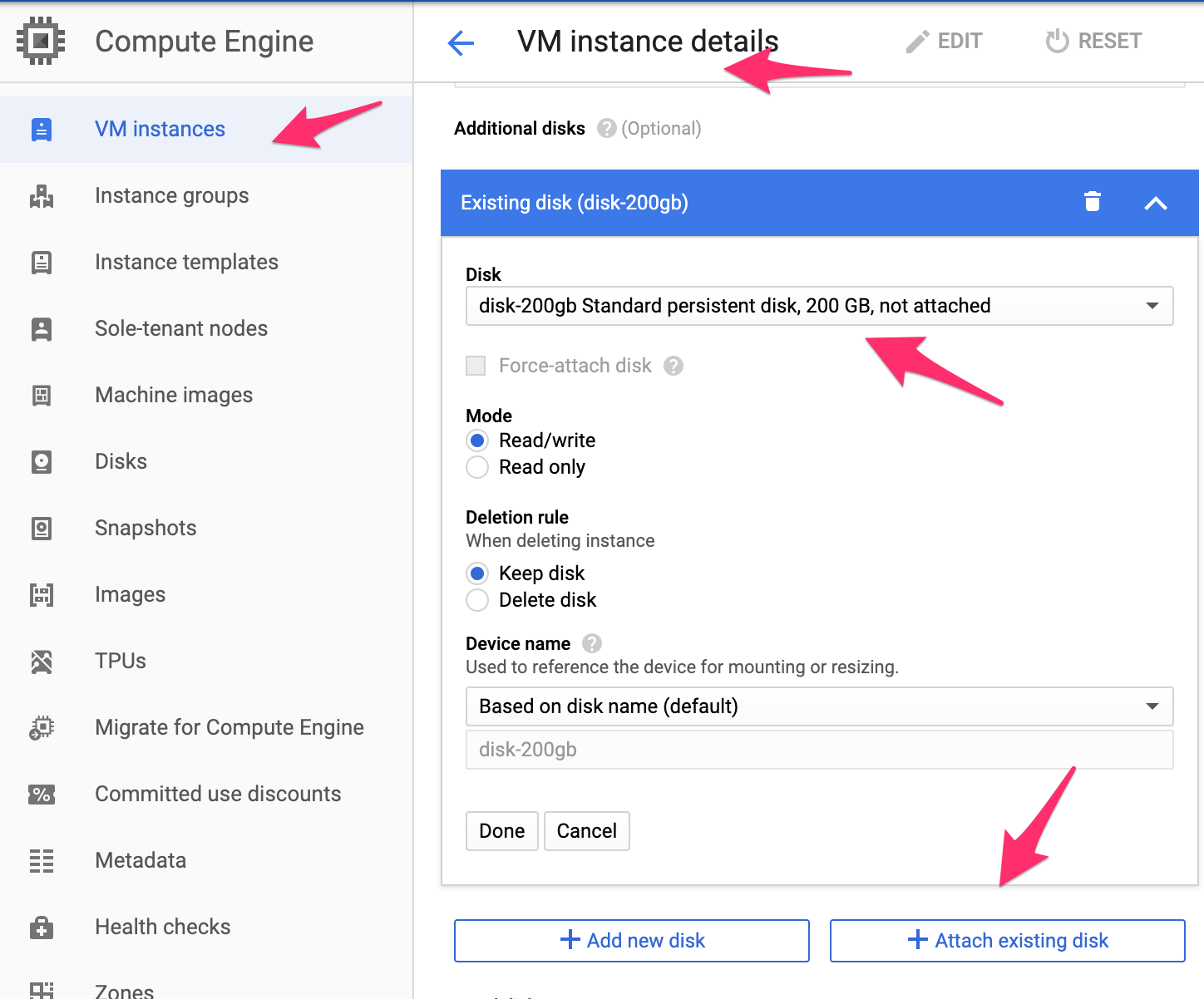

1. Start off by heading to the left-hand panel. In the VM Instances section, edit the configuration of instance-1.

2. Choose to add the existing 200 GB persistent disk as additional disk to the instance configuration.

VM Instance configuration.

3. Repeat the steps 1 and 2 in this section to attach the 600 GB disk to instance-2.

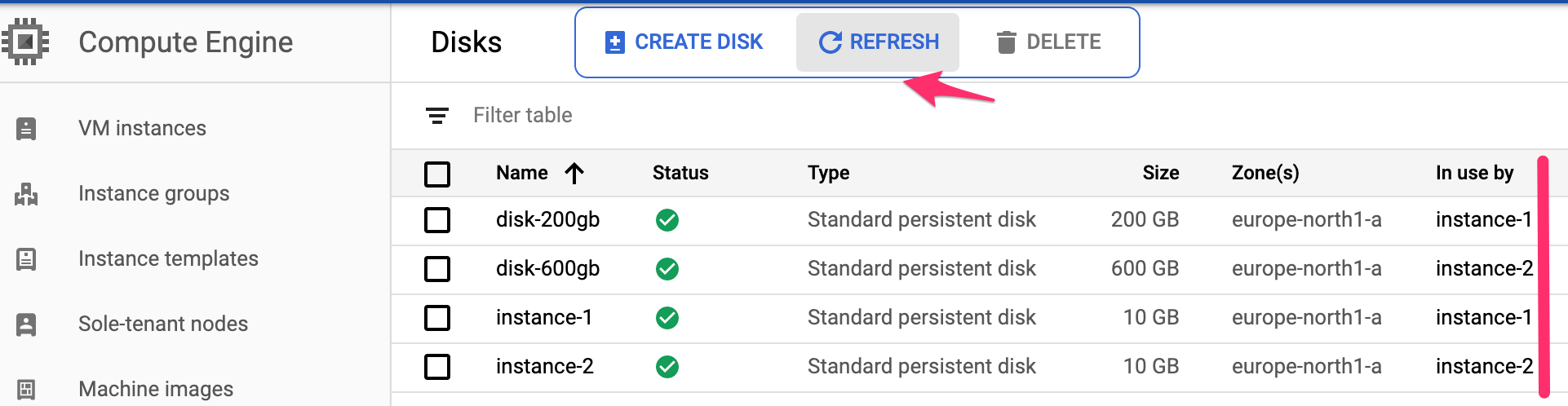

4. Navigate to the Persistent Disk list on the left-hand panel and confirm that all disks are now in use by the correct virtual instances.

The list of Compute Engine Persistent Disks.

Step 4: Simple Performance/Storage IOPS comparison

To be able to see the IOPS difference between the two persistent disks, we will login to each instance and run a simple read IOPS performance test using fio (flexible I/O tester tool).

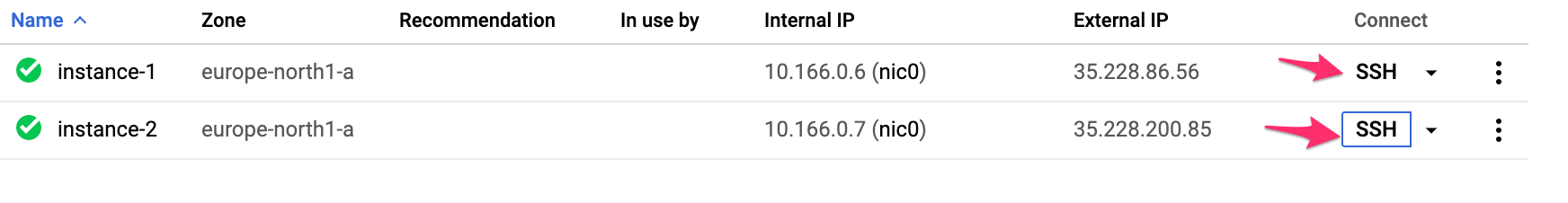

1. Go to the VM instances section and initiate a browser-interactive SSH connection with each instance by pressing the “SSH” button.

VM Instances list.

2. In each instance, install the fio tester tool by running the following command:

sudo apt-get update && sudo apt-get install fio -y

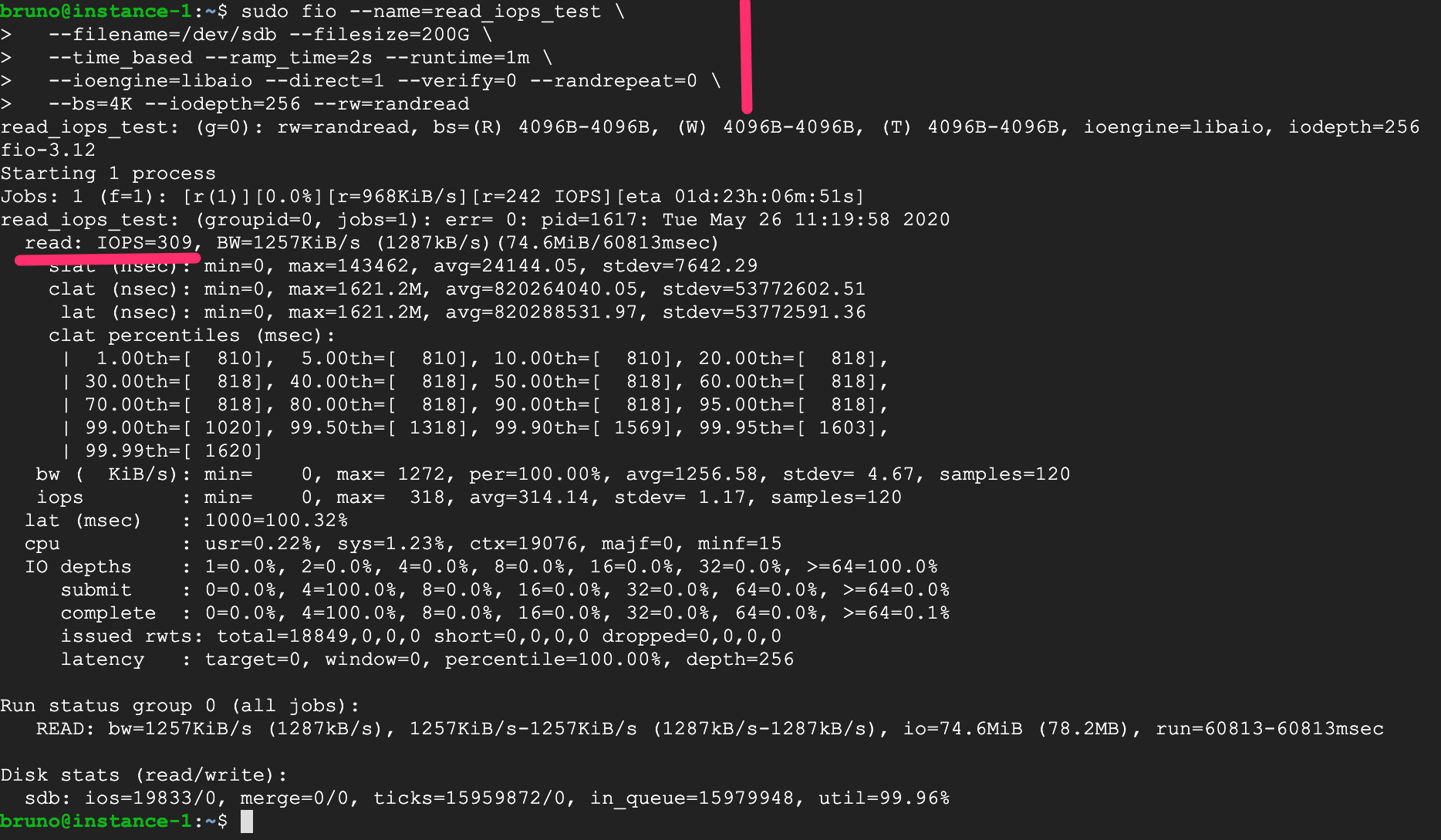

3. Inside the SSH connection of instance-1, execute the fio tool to test the read IOPS of the persistent disk attached (/dev/sdb):

sudo fio --name=read_iops_test \

--filename=/dev/sdb --filesize=200G \

--time_based --ramp_time=2s --runtime=1m \

--ioengine=libaio --direct=1 --verify=0 --randrepeat=0 \

--bs=4K --iodepth=256 --rw=randread

Instance-1 read IOPS testing.

4. As expected, the amount of IOPS (309) is on the level we expected for a standard persistent disk with 200 GB.

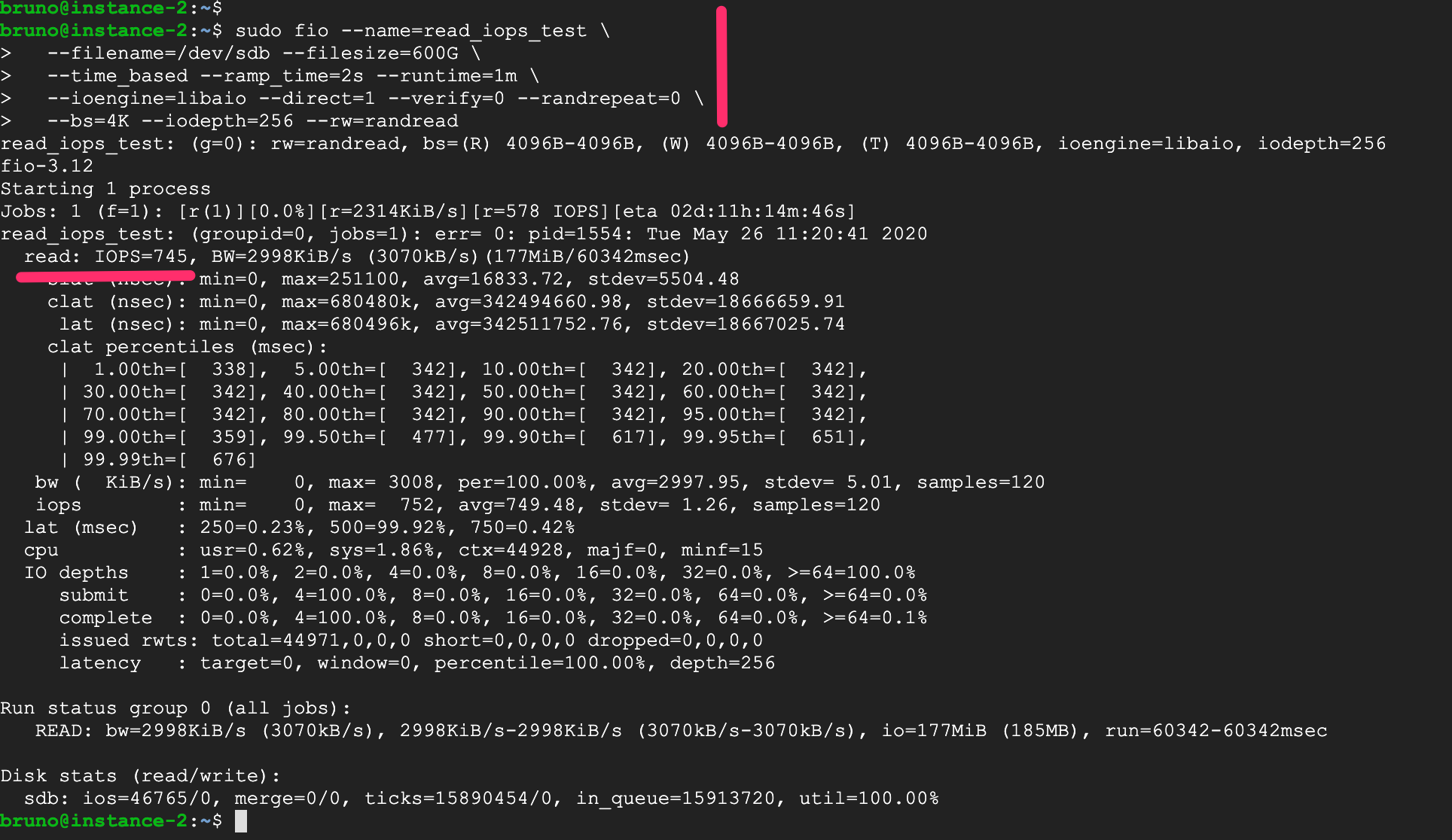

5. Go to the SSH connection of instance-2 and execute the same read IOPS test. Remember to change the value of the filesize parameter to 600 GB.

Instance-2 read IOPS testing.

6. The results show an amount of IOPS (745) that match the expected level for a standard persistent disk with 600 GB.

7. Analyzing the other metrics in the results we can also observe that the higher amount of provisioned IOPS translated into a higher throughput and lower latency compared with the 200 GB persistent disk.

Conclusion

Storage performance has a big impact on the overall health of a system. Provisioning a persistent disk with a high amount of IOPS is a fairly basic operation to carry out, but it takes some careful consideration to find the proper combination of disk type, size, and instance type without driving the costs too high.

However, there are costs to subpar storage performance too. For example, a slow relational database system can cause a lot of idle computing time spent in waiting for SQL statements to be performed. The solution is a high-performance storage that can operate at reduced costs. One way to do that is by using NetApp Cloud Volumes ONTAP for Google Cloud.

The Cloud Volumes ONTAP data management platform includes advanced data management features such as cost-cutting storage efficiencies, data tiering from Persistent Disk to Google Cloud Storage, and space- and cost-efficient storage snapshots and data clones that can help reduce the expense of your high performance storage in Google Cloud by as much as 70% in some cases.