More about Google Cloud Storage

- Google Cloud Persistent Disk Explainer: Disk Types, Common Functions, and Some Little-Known Tricks

- How to Mount Google Cloud Storage as a Drive for Cloud File Sharing

- How to Deploy Cloud Volumes ONTAP in a Secure GCP Environment

- A Look Under the Hood at Cloud Volumes ONTAP High Availability for GCP

- Google Cloud Containers: Top 3 Options for Containers on GCP

- How to Use Google Filestore with Microservices

- Google Cloud & Microservices: How to Use Google Cloud Storage with Microservices

- High Availability Architecture on GCP with Cloud Volumes ONTAP

- GCP Persistent Disk Deep Dive: Tips, and Tricks

- How to Use the gsutil Command-Line Tool for Google Cloud Storage

- Storage Options in Google Cloud: Block, Network File, and Object Storage

- Provisioned IOPS for Google Cloud Persistent Disk

- How to Use Multiple Persistent Disks with the Same Google Cloud Compute Engine Instance

- Google Cloud Website Hosting with Google Cloud Storage

- Google Cloud Persistent Disk: How to Create a Google Cloud Virtual Image

- Google Cloud Storage Encryption: Key Management in Google Cloud

- How To Resize a Google Cloud Persistent Disk Attached to a Linux Instance

- How to Add and Manage Lifecycle Rules in Google Cloud Storage Buckets

- How to Switch Between Classes in Google Storage Service

- Cloud File Sharing Services: Google Cloud File Storage

Subscribe to our blog

Thanks for subscribing to the blog.

July 25, 2021

Topics: Cloud Volumes ONTAP Google CloudAdvanced10 minute readKubernetes

In the early days of software development, when computational resources were limited and expensive, it was common to design entire complex systems to be deployed and operated in a single machine where multiple services co-existed. Nowadays, microservices architecture is the new normal.

In this blog, we take a closer look at microservices and provide a step-by-step guide on how to leverage Google Cloud in microservices architectures using Google Cloud Storage.

Read on below to find out:

- What Are Microservices?

- How Google Cloud & Microservices Can Leverage Google Cloud Storage

- A Typical Microservices Architecture Example

- How to Implement a Google Cloud & Microservices Solution with Google Cloud Storage

- How Do I Deploy Microservices in Google Cloud?

What Are Microservices?

With the technology advancements of the past years and hardware (physical and virtual) becoming affordable, it was clear that those monolithic architectures wouldn’t be able to cope with the requirements of a modern software solution. Numerous problems were caused by having everything bundled together in the same machine: scalability, high availability, disaster recovery, maintainability, and so on.

The microservices concept was born out of the need to decouple the systems in these monolithic architectures in order to meet today’s business scale and agility requirements and demands. With microservices, technological advancements such as Google Cloud migration and containerization are leveraged to break apart systems into smaller, more flexible components that work together as a solution.

How Google Cloud & Microservices Can Leverage Google Cloud Storage

Google Cloud Storage (GCS) is a managed storage service that is part of the Google public cloud offering. The concept of a managed object storage service such as GCS is to provide near-unlimited storage of files (known as objects) via an HTTP API with simple GET and PUT operations.

In a Google microservices architecture, the usage of GCS plays an important role when the microservice requires data, such as files, to be persistent. Storing the files outside the microservice's own file system enables the service to remain stateless. This means that multiple instances of the same microservice can be executed in parallel while getting and putting files to GCS. Because of its near-infinite scalability, low operational overhead, and affordable cost per GB, Google Cloud Storage is a great choice to enable scalability and decoupling without much engineering effort.

However, it should be noted that this approach has its own shortcomings. The only way to interact with GCS is via an API, CLI, or SDK, which means that there is no possibility to mount a GCS bucket in the filesystem. Another aspect to consider is that GCS is billed based on the volume of data stored and amount of HTTP operations. This creates a challenge when handling a large volume of small files because it will generate a large number of operations for the retrieval and storage of each object.

When facing these limitations, a different type of storage service or potential bigger architectural changes are worth exploring.

A Typical Microservices Architecture Example

To understand how Google Cloud and microservices with GCS work in practice, we are going to look at an example microservice architecture and show step-by-step how microservices can be used for their use case.

ACME Inc. is a news media company that receives multiple images from a vast network of sources worldwide. To speed up the process and agility of classifying these images, the organization is planning to develop a software solution that can leverage different tools to analyze the images and automatically extract relevant metadata information from those images. This solution will play a critical role in helping reporters and editors select the best images to include in their articles and publication.

To show how microservices can be leveraged in this case, we are going to develop a solution that will retrieve images from a remote image library via an API and use Google Vision, a pre-trained AI/ML API service, to detect labels associated with each image.

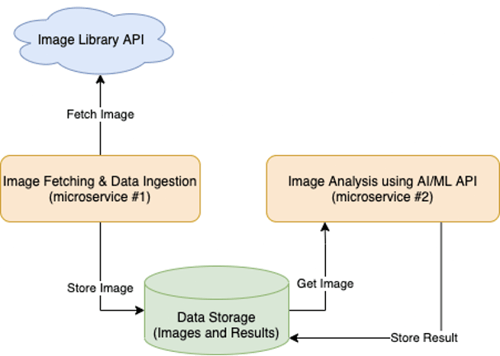

Our architecture will be based on two microservices, one for image fetching and data ingestion, and another for the image analysis using the AI/ML API. A Google Cloud Storage bucket will be used to temporarily store and share the images across the two microservices and to store the analysis result.

Example Microservices Solution Architecture

How to Implement a Google Cloud & Microservices Solution with Google Cloud Storage

1. Create a Google Cloud IAM Service Account

To get started, navigate to the Google Cloud Platform Identity and Access Management (IAM) service in the web console.

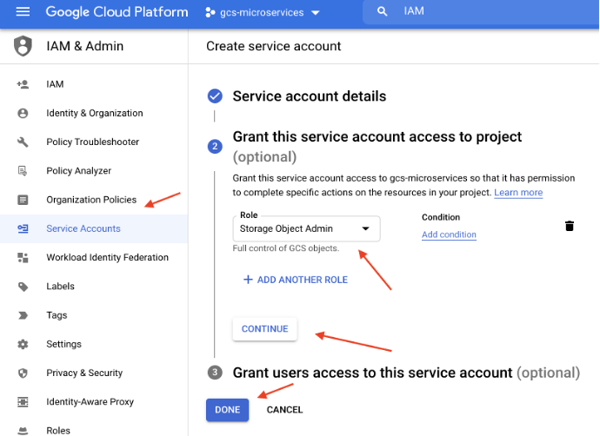

Create a new service account and grant access to GCS with the “Storage Object Admin” role. This service account will be used by the microservices to interact with the Google Cloud Storage service.

Create an IAM service account panel

Create an IAM service account panel

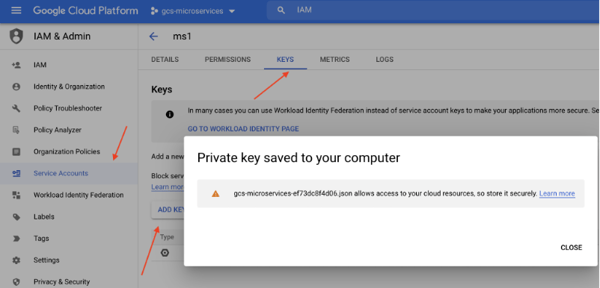

After the service account is created, navigate to the “Keys” tab and add a new key. Save the private key in your local machine in JSON format.

Saving the IAM Service Account Key

Saving the IAM Service Account Key

2. Enable Google Vision API

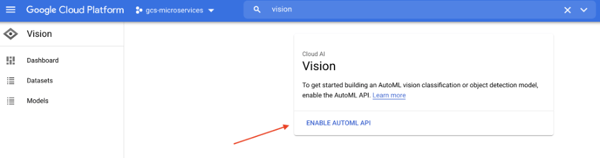

Next, navigate to the Google Vision service. When prompted, enable the AutoML API. This will enable us to leverage Vision API to analyze the images.

Enabling the Google Cloud Vision API

Enabling the Google Cloud Vision API

3. Create a Google Cloud Storage Bucket

The microservices will leverage Google Cloud Storage to store the images and analysis results. In GCS, files are stored inside a bucket, a logical resource that groups multiple objects. If you have never created a bucket before, simply follow these steps to learn how to do it.

You can choose any bucket name you want (though the name needs to be globally unique) and adjust the microservices code accordingly. For reference, in our example below we named our example gcs-microservices.

4. Create the Image Fetching Microservice

In your local machine, create a folder named ms1 and copy the previously downloaded IAM service account key file to it with the name gcp-credentials.json.

Insider the folder, create two files: Dockerfile and entrypoint.sh. Copy and paste the following code to each of those files respectively.

#####

## Microservice 1: Fetching and storing photos

#####

FROM debian:latest

# Set the working directory to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

ADD . /app

RUN apt-get update && apt-get install wget curl apt-transport-https ca-certificates gnupg -qy

RUN echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] http://packages.cloud.google.com/apt cloud-sdk main" | tee -a /etc/apt/sources.list.d/google-cloud-sdk.list && curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key --keyring

/usr/share/keyrings/cloud.google.gpg add - && apt-get update -y && apt-get install google-cloud-sdk -y

ENTRYPOINT bash entrypoint.sh

ms1 Dockerfile

#!/bin/bash

## Adjust the bucket name accordingly.

GCS_BUCKET_NAME=gcs-microservices

##

gcloud auth activate-service-account --key-file=gcp-credentials.json

while true

do

echo "Downloading a new random image"

IMAGE_NAME=$(tr -dc A-Za-z0-9 </dev/urandom | head -c 10).jpg

wget -O $IMAGE_NAME https://picsum.photos/1024/768

echo "Storing the photo in Google Cloud Storage bucket"

gsutil cp $IMAGE_NAME gs://$GCS_BUCKET_NAME

echo "Deleting temporary local file"

rm -fr $IMAGE_NAME

echo "Wait for 10 seconds before downloading again"

sleep 10

done

ms1 entrypoint.sh

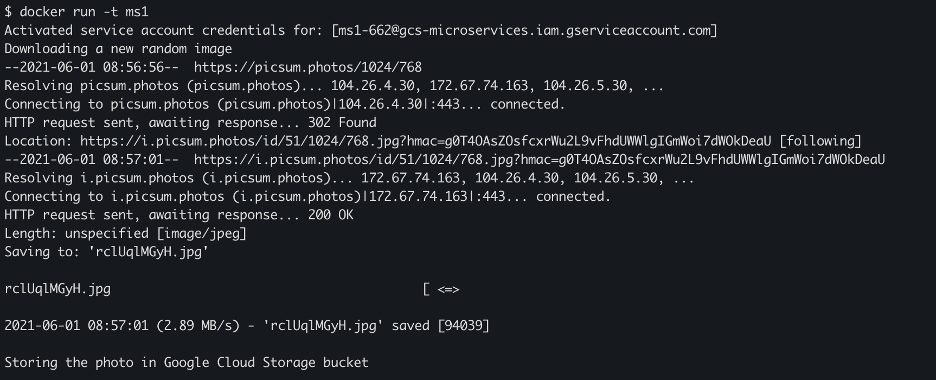

This code will create a Docker container that will download a new image from a remote API (i.e. picsum.photos) and store it in the Google Cloud Storage bucket every ten seconds.

To build the container and start the microservice, use the commands docker build -t ms1 . and docker run -t ms1 inside the ms1 folder. In case you don’t have Docker already installed, you can easily download and set up Docker here.

Docker build and run the container

Docker build and run the container

If everything goes well, the first microservice will be up and running, fetching new images from the remote API and storing them in the GCS bucket.

5. Create the Image Analysis Microservice

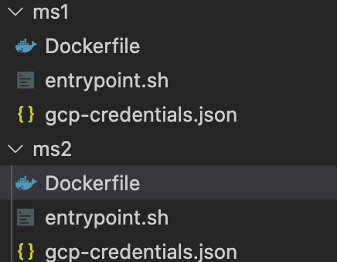

Create a second folder named ms2, and similar to the previous step, copy the Google IAM service account key file with the name gcp-credentials.json.

Create two new files Dockerfile and entrypoint.sh with the code content below.

#####

## Microservice 2: Analyzing Photos with AI/ML API to detect labels

#####

FROM debian:latest

# Set the working directory to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

ADD . /app

RUN apt-get update && apt-get install wget curl apt-transport-https ca-certificates gnupg -qy

RUN echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] http://packages.cloud.google.com/apt cloud-sdk main" | tee -a /etc/apt/sources.list.d/google-cloud-sdk.list && curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key --keyring /usr/share/keyrings/cloud.google.gpg add - && apt-get update -y && apt-get install google-cloud-sdk -y

ENTRYPOINT bash entrypoint.sh

ms2 Dockerfile

#!/bin/bash

## Adjust the bucket name accordingly.

GCS_BUCKET_NAME=gcs-microservices

##

gcloud auth activate-service-account --key-file=gcp-credentials.json

while true

do

echo "Get the first available image from the GCS bucket"

FIRST_IMAGE_OBJ=$(gsutil ls -r gs://$GCS_BUCKET_NAME/*.jpg | head -n1)

if [ -z $FIRST_IMAGE_OBJ ]; then

echo "No available images to analyize, waiting for 30 seconds"

sleep 30

else

FIRST_IMAGE_FILENAME=$(echo $FIRST_IMAGE_OBJ | cut -f4 -d / | cut -f1 -d .)

gsutil cp $FIRST_IMAGE_OBJ .

echo "Analyzing image file using Google Vision API to detect labels associated with it"

gcloud ml vision detect-labels $FIRST_IMAGE_FILENAME.jpg > $FIRST_IMAGE_FILENAME-labels.json cat $FIRST_IMAGE_FILENAME-labels.json

echo "Storying analysis in GCS bucket"

gsutil cp $FIRST_IMAGE_FILENAME-labels.json gs://$GCS_BUCKET_NAME/

echo "Deleting the image file from the GCS Bucket and local temporary file" rm -fr $FIRST_IMAGE_FILENAME.jpg

gsutil rm $FIRST_IMAGE_OBJ

echo "Wait for 3 seconds before processing again"

sleep 3

fi

done

ms2 entrypoint.sh

This microservice will periodically check the GCP bucket content for new, unprocessed images. If a new image is available, it will download the file to the local file system and use the Google Vision API to detect labels associated with it. The results, in JSON format, are then stored in the GCS bucket and the image file deleted.

In terms of structure, you should have the following folders and files:

Folder and file structure

Folder and file structure

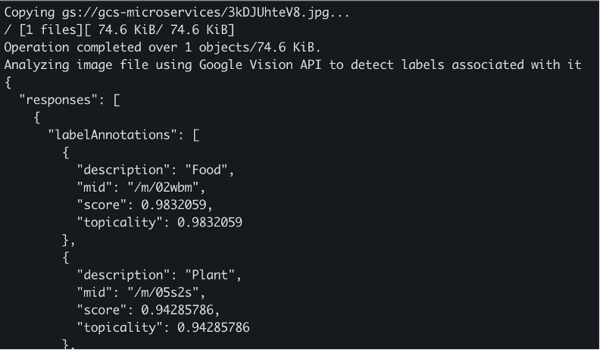

To build the container and start the microservice, use the commands docker build -t ms2 . and docker run -t ms2 inside the ms1 folder.

Running the analysis container

Running the analysis container

If successful, this microservice will output the AI/ML image analysis in JSON format. In the example above, that particular image analysis outcome were the labels Food and Plant with over 90% certainty score.

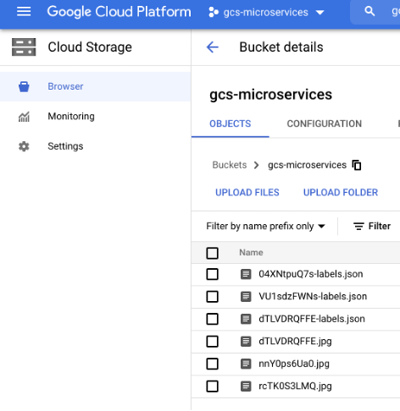

6. Review the Analysis Results

In the web console of Google Cloud Platform, navigate to the Cloud Storage service and find the bucket you previously created. The files inside the bucket should be a mix between new images (.jpg) and analysis results (.json).

GCS Bucket images and results

GCS Bucket images and results

How Do I Deploy Microservices in Google Cloud?

In our example, we were developing and running the microservices in our local machine and leveraging a storage bucket in Google Cloud. To move forward with the solution lifecycle, the next logical step would be to deploy these microservices in a Google Cloud staging or production environment.

While the deployment part is out of scope for our small proof of concept example, it is worth noting that there are different options available in Google Cloud: for simple use cases with one or two containers Cloud Run, a serverless managed service is a great choice, while more complex scenarios might benefit from the Google Kubernetes Engine or Google Anthos services.

Conclusion

No matter how you choose to deploy the microservices infrastructure, it’s always important to consider the storage element in the equation. For customers who have demanding storage requirements, Cloud Volumes ONTAP, the NetApp data management solution, is an option that should be considered as part of your microservices architecture.

Cloud Volumes ONTAP for Google Cloud gives a huge amount of control and flexibility on where and how the data is stored plus offers advanced features, such as:

- Instant, zero capacity cost data cloning technology

- Automatic data tiering between Persistent Disk and Google Cloud Storage

- Data protection for Google Cloud backup with space- and cost-efficient NetApp Snapshot copies

- Cost-cutting storage efficiencies that reduce storage footprint and costs as much as 70%.

Used together, these benefits can solve the microservice data management challenges of the most demanding enterprise customers.