More about Kubernetes Storage

- How to Provision Persistent Volumes for Kubernetes with the NetApp BlueXP Console

- Fundamentals of Securing Kubernetes Clusters in the Cloud

- Kubernetes Storage Master Class: A Free Webinar Series by NetApp

- Kubernetes StorageClass: Concepts and Common Operations

- Kubernetes Data Mobility with Cloud Volumes ONTAP

- Scaling Kubernetes Persistent Volumes with Cloud Volumes ONTAP

- What's New in K8S 1.23?

- Kubernetes Topology-Aware Volumes and How to Set Them Up

- Kubernetes vs. Nomad: Understanding the Tradeoffs

- How to Set Up MySQL Kubernetes Deployments with Cloud Volumes ONTAP

- Kubernetes Volume Cloning with Cloud Volumes ONTAP

- Container Storage Interface: The Foundation of K8s Storage

- Kubernetes Deployment vs StatefulSet: Which is Right for You?

- Kubernetes for Developers: Overview, Insights, and Tips

- Kubernetes StatefulSet: A Practical Guide

- Kubernetes CSI: Basics of CSI Volumes and How to Build a CSI Driver

- Kubernetes Management and Orchestration Services: An Interview with Michael Shaul

- Kubernetes Database: How to Deploy and Manage Databases on Kubernetes

- Kubernetes and Persistent Apps: An Interview with Michael Shaul

- Kubernetes: Dynamic Provisioning with Cloud Volumes ONTAP and Astra Trident

- Kubernetes Cloud Storage Efficiency with Cloud Volumes ONTAP

- Data Protection for Persistent Data Storage in Kubernetes Workloads

- Managing Stateful Applications in Kubernetes

- Kubernetes: Provisioning Persistent Volumes

- An Introduction to Kubernetes

- Google Kubernetes Engine: Ultimate Quick Start Guide

- Azure Kubernetes Service Tutorial: How to Integrate AKS with Azure Container Instances

- Kubernetes Workloads with Cloud Volumes ONTAP: Success Stories

- Container Management in the Cloud Age: New Insights from 451 Research

- Kubernetes Storage: An In-Depth Look

- Monolith vs. Microservices: How Are You Running Your Applications?

- Kubernetes Shared Storage: The Basics and a Quick Tutorial

- Kubernetes NFS Provisioning with Cloud Volumes ONTAP and Trident

- Azure Kubernetes Service How-To: Configure Persistent Volumes for Containers in AKS

- Kubernetes NFS: Quick Tutorials

- NetApp Trident and Docker Volume Tutorial

Subscribe to our blog

Thanks for subscribing to the blog.

September 22, 2021

Topics: Cloud Volumes ONTAP Advanced8 minute readKubernetes

What Is Kubernetes CSI?

Kubernetes CSI is a Kubernetes-specific implementation of the Container Storage Interface (CSI). The CSI specification provides a standard that enables connectivity between storage systems and container orchestration (CO) platforms. It is the foundation of Kubernetes storage management.

The CSI standard determines how arbitrary blocks and file storage systems are exposed to workloads on containerization systems like Kubernetes. Third-party storage vendors can use CSI to build plugins and deploy them to enable Kubernetes to work with new storage systems, without having to edit the core code of Kubernetes.

In this article:

- The Need for CSI

- How to Use a CSI Volume?

- Building Your Own CSI Driver for Kubernetes

- Kubernetes Storage with NetApp Cloud Volumes ONTAP

The Need for CSI

Before the Container Storage Interface, Kubernetes only supported the use of in-tree k8s volume plugins, which had to be written and deployed using core Kubernetes binaries. This meant that storage providers had to check in the core codebase of their k8s plugins to enable support for new storage systems.

Flex-volume, a plugin-based solution, attempted to resolve this issue by exposing the executable-based API to third-party plugins. While this solution operated according to a similar principle as CSI in terms of detachment with k8s binaries, there were a number of issues with this approach. First, it required root access to the master and host file system to enable the deployment of driver files. Second, it came with a significant burden of operating system dependencies and prerequisites that were assumed to be available from the host.

CSI addresses such issues by using containerization and leveraging k8s storage primitives. CSI has become the ubiquitous solution for enabling the use of out-of-tree storage plugins. It allows storage providers to deploy plugins through standard k8s primitives like storage classes, PersistentVolumes (PVs) and PersistentVolumeClaims (PVCs).

The main objective of CSI is to standardize the mechanism for exposing all types of storage systems across every container orchestrator.

Related content: Read our guide to Kubernetes Persistent Volumes

How to Use a CSI Volume?

A CSI Volume can be used to provision PersistentVolume resources, which can be consumed by Kubernetes workloads. You can provision PersistentVolumes dynamically (when they are requested by a workload) or manually.

Dynamic Provisioning

You can create a StorageClass that references a CSI storage plugin. This enables Kubernetes workloads to dynamically create PersistentVolumes. The data saved to those PersistentVolumes is persisted to the storage equipment defined in the CSI plugin.

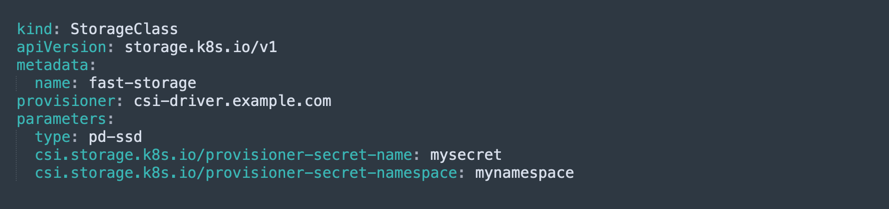

For example, the following StorageClass enables provisioning of storage volumes of type “fast-storage”, using a CSI plugin called “csi-driver.example.com”. (This and the other examples below were shared in the official Kubernetes CSI blog post)

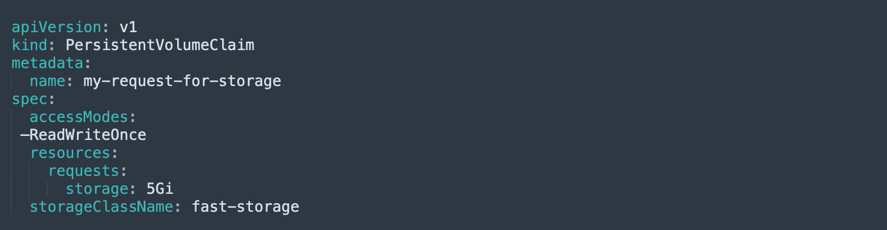

When a Kubernetes entity creates a PersistentVolumeClaim object that requests this StorageClass, a PersistentVolume belonging to the StorageClass is dynamically provisioned. The image below contains an example of a PVC that references the fast-storage StorageClass.

Note that in the StorageClass definition above there are three parameters: type, and two secrets called mysecret and mynamespace. When the PVC is declared, here is what happens behind the scenes:

- The StorageClass performs a CreateVolume call on the CSI plugin (csi-driver.example.com), passing the parameters, including the secrets, which enable access to the storage device.

- Kubernetes automatically creates a PersistentVolume object, representing a storage volume that is physically stored on the CSI plugin device.

- Kubernetes binds the PersistentVolume (PV) object to the relevant PersistentVolumeClaim (PVC).

- From this point onwards, the pods or containers that made the claim can make use of the storage volume.

Read our blog post: Dynamic Kubernetes Persistent Volume Provisioning with NetApp Trident and Cloud Volumes ONTAP

Manual Provisioning

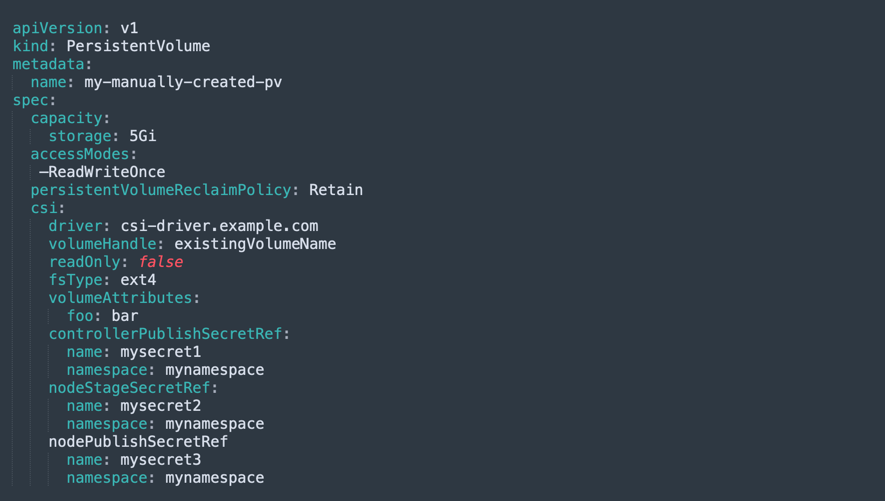

You can manually provision a volume in Kubernetes and make it available to workloads without using the PVC mechanism. The image below provides an example of a PV object that allows workloads to use a storage volume called “existingVolumeName”. As above, the CSI volume references the storage plugin csi-driver.example.com.

Attaching and Mounting Volumes

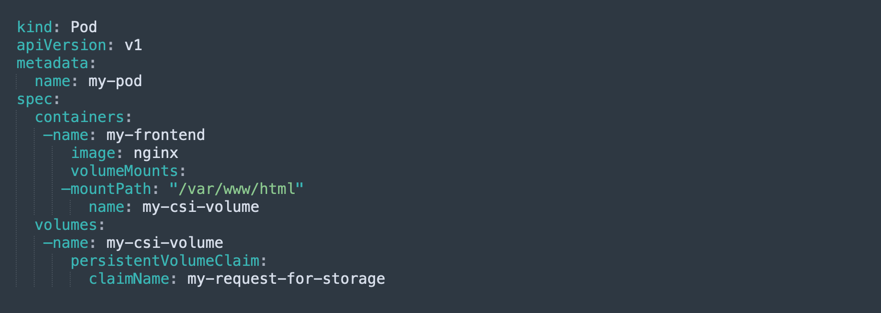

The image below provides an example that shows how a Kubernetes pod template can reference a PVC to access a CSI volume.

When a PersistenVolumeClaim appears in the pod template, every time the pod is scheduled, Kubernetes triggers several operations on the CSI plugin, including ControllerPublishVolume, NodeStageVolume, and NodePublishVolume. This creates a storage volume, mounts it, and makes it available for use by containers running in the pod.

Building Your Own CSI Driver for Kubernetes

CSI Driver Components

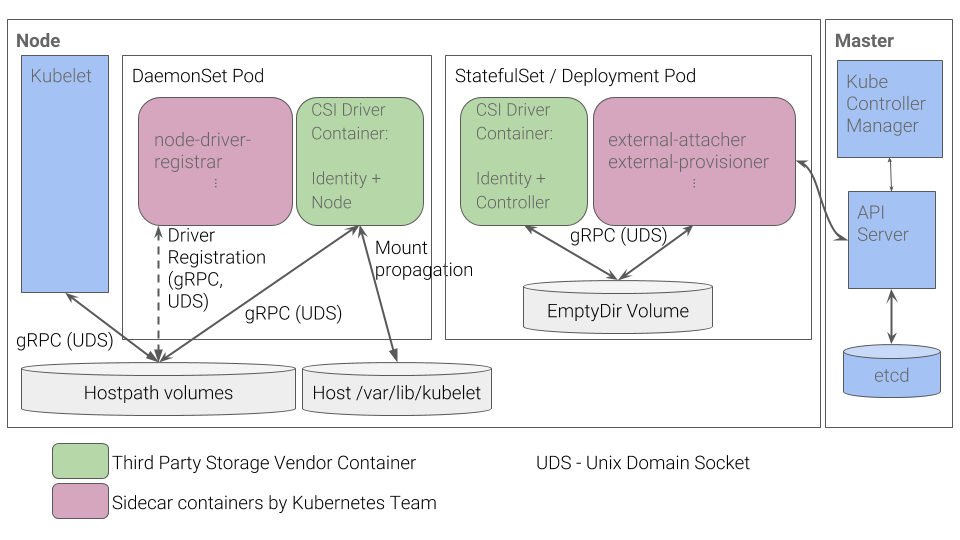

CSI drivers in Kubernetes are typically deployed with controller and per-node components.

Controller component

The controller plugin is deployed as either a Deployment or a StatefulSet and can be mounted on any node within the cluster. It comprises a CSI driver that implements a CSI Controller service as well as a sidecar container (or multiple containers). A controller sidecar container usually interacts with Kubernetes objects and also makes calls to the CSI Controller service.

The controller does not require direct access to a host - it can carry out all operations via external control-plane services and the Kubernetes API. It is possible to deploy multiple copies of a controller component for high availability (HA), but you should implement leader election to make sure that only one controller is active at any given time.

Among the controller sidecars are an external-provisioner, an external-attacher, an external-snapshotter and an external-resizer. The inclusion of certain sidecars in a deployment can be optional, depending on the specifications detailed in the sidecar page.

The controller communicates with sidecar containers that are tasked with managing Kubernetes events. The controller then sends relevant calls to the CSI driver. A UNIX domain socket is shared via an emptyDir volume, enabling the calls between sidecars and the driver.

Controller sidecars use role-based access control (RBAC) rules to govern their interaction with Kubernetes objects. The sidecar repositories provide examples of RBAC configurations that you can incorporate into your RBAC policies.

Per-node Component

The node plugin should be deployed across all nodes in a cluster, via a DaemonSet. It comprises the CSI driver implementing the CSI Node service and the sidecar container that serves as a node-driver registrar.

The node component communicates with the kubelet, which runs across all nodes and handles calls for the CSI Node service. The calls can mount or unmount storage volumes from a storage system and make them available for the pod to consume. The kubelet uses a UNIX domain socket, which is shared through a HostPath volume on the host, to make calls to the CSI driver. An additional UNIX domain socket is used by the node-driver-registrar to register the driver to the kubelet.

The node plugin requires direct access to a host to mount driver volumes. In order to make filesystem mounts and block devices available to the kubelet, the CSI driver needs to use a bidirectional mount point that enables the kubelet to see the mounts created by the driver container.

Deployment

Kubernetes doesn't determine a CSI volume driver’s packaging, but it does provide recommendations for simplifying the deployment of containerized CSI drivers on Kubernetes.

Image Source: Kubernetes

Image Source: Kubernetes

Storage vendors are advised to take the following steps when deploying a containerized CSI volume driver:

- Create a container to implement the behavior of the volume plugin and expose a gRPC interface through a UNIX domain socket. The container should be labeled “CSI volume driver” and configured according to the CSI specifications (with controller, node and identity services).

- Group the volume driver container with additional containers provided by the Kubernetes team, such as external-attacher, external-provisioner, cluster-driver-registrar, node-driver-registrar, external-resizer, external-snapshotter and livenessprobe. These containers facilitate the interaction of the driver container with Kubernetes.

- Direct cluster administrators to deploy the relevant DaemonSet and StatefulSet and add support for the vendor’s storage system in the Kubernetes cluster.

Another option for a potential simpler deployment is to have all the components in a single DaemonSet, including the external-provisioner and external-attacher. However, this strategy consumes more resources and requires the use of a leader election protocol (such as https://git.k8s.io/contrib/election) for the external-attacher and external-provisioner components.

Enabling Privileged Pods

The Kubernetes cluster must enable privileged pods to allow the use of CSI drivers. For example, you need to set the --allow-privileged flag to true for the kubelet and the API server—in certain environments, such as kubeadm, GCE and GKE, this is the default.

The API server must be launched with the following privileged flags:

$ ./kube-apiserver ... --allow-privileged=true ...

$ ./kubelet ... --allow-privileged=true ...

Enabling Mount Propagation

The Container Storage Interface requires a mount propagation feature that enables mounted volumes to be shared between containers within the same node or pod. The Docker daemon for a cluster needs to allow mount sharing to enable mount propagation.

Kubernetes CSI with NetApp Cloud Volumes ONTAP

NetApp Cloud Volumes ONTAP, the leading enterprise-grade storage management solution, delivers secure, proven storage management services on AWS, Azure and Google Cloud. Cloud Volumes ONTAP capacity can scale into the petabytes, and it supports various use cases such as file services, databases, DevOps or any other enterprise workload, with a strong set of features including high availability, data protection, storage efficiencies, Kubernetes integration, and more.

In particular, Cloud Volumes ONTAP supports Kubernetes Persistent Volume provisioning and management requirements of containerized workloads.

Learn more about Kubernetes NFS Provisioning File Services with Cloud Volumes ONTAP and Trident.

Learn more about how Cloud Volumes ONTAP helps to address the challenges of containerized applications in these Kubernetes Workloads with Cloud Volumes ONTAP Case Studies.