More about Azure File Storage

- Azure Data Share: The Basics and a Quick Tutorial

- Azure Stack Hub: How It Works, Pricing, and Capacity Planning

- Azure Data Protection: Getting it Right

- Azure Storage Replication Explained: LRS, ZRS, GRS, RA-GRS

- Azure Database Pricing: SQL Database, MySQL, CosmosDB, and More

- Azure Storage Limits at a Glance

- The Complete Guide to Cloud Transformation

- Azure Backup Service: 8 Ways to Backup with Azure

- Azure Data Catalog: Understanding Concepts and Use Cases

- Azure Table Storage: Cloud NoSQL for Dummies

- Address Persistent Storage Woes in Azure Kubernetes Service

- Azure NetApp Files: Performance So Good You’ll Think You’re On Premises

- The Ultimate Azure File Storage Cost and Performance Optimization Checklist

- Azure Keeps Banking Customer Data Secure

- Why Should You Migrate Databases to Azure?

- Out-of-This-World Kubernetes Performance on Azure with Azure NetApp Files

- Myth Busting: Cloud vs. On Premises - Which is Faster?

- A Reference Architecture for Deploying Oracle Databases in the Cloud

- Meet Cloud Sync, the Muscle Behind Cloud Migration

- Azure NetApp Files Enables Elite Enterprise Apps

- Azure NetApp Files Enables an Elite Class of Enterprise Azure Applications: Part One

- Building and Running SaaS Applications with NetApp

- Azure Storage: Behind the Scenes

Subscribe to our blog

Thanks for subscribing to the blog.

January 23, 2018

Topics: Cloud Volumes ONTAP AzureData MigrationAdvanced8 minute read

Microsoft Azure continues to grow its presence in the public cloud market. But familiar as many administrators have become with the service, not everyone is aware of the way that its storage solution is structured. As the software-defined cloud-based storage solution for Azure, Microsoft Azure Storage supports Azure deployments, but what exactly is going on underneath its hood?

It turns out that there is a lot more to storage than meets the eye. In this article, we will cover the various components that make up the Microsoft Azure Storage layer, detail how those components interact with each other, and show how Microsoft achieves the redundancy and high availability of its cloud service.

This is part of an extensive series of guides about cloud storage.

Azure Storage Services

Before we can begin the discussion, it is important to be familiar with the storage services that Microsoft offers:

- Blob

- Table

- Queue

- File

- Disk Storage

Object storage — or Blob storage — contains blobs, which are basically files such as images, Virtual Hard Disks (VHDs), logs and database backups. These are stored in containers, which are similar to folders in a Windows file system. These blobs can be accessed from anywhere using URIs, REST APIs and Azure SDK Client libraries.

Table storage is an NoSQL service from Microsoft, where the data is stored in tabular format. Each row represents an identity and the columns represent the entity’s different properties.

Queue storage is used for message storage and retrieval. This service features RESTful APIs that allow you to build flexible applications and separate functions for better durability across large workloads.

For file storage, Azure offers Azure File Storage. This is a fully managed file share service where the files can be accessed by Server Message Block (SMB) protocol and can be mounted by cloud or on-premises systems running on Windows, Linux and MacOS.

Disk storage on Azure is offered by Azure Disk Storage. Azure Disk Storage provides two options for storage: Unmanaged and Managed. Unmanaged disks are created by tenants under storage accounts and are maintained by the tenant. In Azure Managed Disks, the storage accounts are created and maintained by Azure. They also offer two performance tiers: Standard (backed by HDDs for low IOPS workload) and Premium (backed by SSD for high IOPS requirement).

- Azure Storage also provides several redundancy options based on geography and accessibility. Please find more details here.

The Azure Storage Architecture

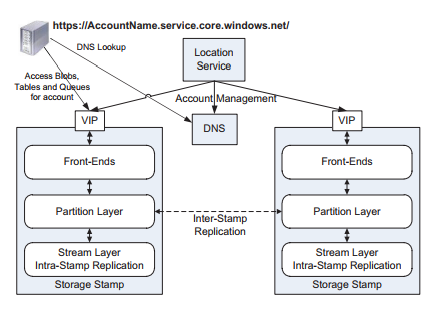

The two main components are storage stamps and location services.

Each storage stamp is a cluster of storage node racks. The number of racks can vary, and each rack exists on a fault domain of its own. These storage stamps can reach up to 70% utilization, after which the location services move the data to a different stamp using inter-stamp replication.

Location services (LS) themselves are geographically distributed services responsible for the management of the storage stamps. This includes mapping a storage account to a storage stamp, managing the namespace of these storage stamps, and managing the addition of new storage stamps (even for new regions).

When a new Azure Storage account is created, LS maps the storage account to a primary storage stamp (based on the region chosen by the end user), stores the account metadata on the storage stamp (after which the storage stamp starts servicing traffic to that storage account), and updates the DNS to point to the various VIPs (Virtual IPs) exposed by the storage stamp. The endpoint format (URIs) for this is https://accountname.service.core.windows.net/.

For example, a storage account by the name “netapptest7” would have endpoints such as https://netapptest7.blob.core.windows.net/ (which is a blob endpoint) and https://netapptest7.file.core.windows.net/ (which is a file endpoint). These would, in turn, point to different VIPs as updated by the location service.

Now let’s delve a bit deeper into the storage stamps as they are responsible for the actual storage transactions. A storage stamp has three layers, namely:

- The front-end layer

- The partition layer

- The stream or distributed file system (DFS) layer

Front-End (FE) Layer

The front-end layer comprises a set of stateless servers whose main function is to serve incoming requests by looking up the AccountName, authorizing and authenticating the requests, and then forwarding them to the relevant partition server. This partition server is chosen based on the storage abstraction which, in turn, translates to a partition layer.

This mapping of partitions is cached by the FE layer in a partition map (map of partition names and servers) object. Some other tasks that the FE layer assists in are caching data that is frequently accessed and streaming large objects from the DFS layer directly.

Partition Layer

The partition layer manages the abstractions mentioned at the beginning of this article (i.e, Blob, Table or Queue). The partition layer is responsible for tracking storage objects in the object table (OT).

The OT is broken down into contiguous rows named RangePartitions, which are then spread across several partition servers. Some of these OTs are the account table (which keeps track of the storage account metadata and configuration assigned to that stamp), the schema table (which holds the schema for all OTs), and the partition map table (which keeps track of all the RangeParitions and their mapping to the partition servers). Individual objects in the partition are determined by the ObjectName.

There are three components of the partition layer: The partition manager, the partition servers, and the lock service.

- The partition manager (PM) is responsible for splitting the OT into RangePartitions and keeping track of these in the partition map table.

- The partition servers (PS) are responsible for serving requests to the RangePartitions as dictated by the PM.

- The lock service chooses the PM and assigns a lease to the PS for serving a specific RangePartition.

Stream Layer

The stream layer is responsible for storing the data bits on the disk and provides an internal interface for the partition layer. The data is distributed for load balancing and replicated for redundancy across servers inside the storage stamp.

The stream layer consists of two main components: Extent nodes (EN) and the stream manager (SM).

The extent nodes (EN) are a set of nodes that contains extent replicas and their blocks. ENs do not understand the abstraction of streams, but keep track of extents (each extent is treated as a file), data blocks and an index which maps data offset to their blocks and file locations. ENs keep track of their extents and the peer replicas for those extents.

The stream manager (SM) is a standard Paxos cluster that keeps track of stream namespaces, extents to stream mapping, and distribution of extents across extent nodes. Based on the replication policy (by default three), the SM polls the EN and checks for compliance.

If the SM detects that the extents are stored on fewer ENs, then it starts a lazy replication to ENs spread across different fault domains. This is how the stream layer works: Extents are the smallest units of replication in the stream layer and are made up of a sequence of blocks. Blocks are the smallest unit of data for reading and writing that are appended to extents.

The partition layer is responsible for appending blocks, tracking extents and controlling the size of each block; it’s the job of the stream layer to store and replicate all of those files as streams, which are lists that point to extents. The streams are maintained by the stream manager and are presented to the partition layer in the form of files.

Replication in Azure Storage

There are two types of replication: Intra-stamp replication and inter-stamp replication. Both of these are used for specific purposes.

- Intra-stamp replication: This type of synchronous replication is handled by the stamp layer to replicate the data within the storage stamp. As the data is spread across separate fault and update domains, data redundancy is ensured in case of disk, server or rack failures. The unit of replication is blocks which make up a storage object.

- Inter-stamp replication: This type of asynchronous replication is handled by the partition layer and helps to provide geo-redundancy in case of a disaster. The whole object or delta changes are replicated to different stamps and aids in disaster recovery and migration of data between storage stamps. This allows replica scenarios like GRS and ZRS.

Azure Storage components and replication (Source).

Final Note

In addition to the different components that make up the Azure Storage layer, there are several other factors that help Microsoft guarantee 99.9% SLA. Some of these factors are:

- Spindle anti-starvation (an IO scheduling mechanism where new IO is not scheduled on spindle disks that have more than 100ms of pending IO).

- Journaling (which reserves the SSD disk for journaling the data written to the EN).

- Read load-balancing (EN determines if the read can be completed within a deadline and this helps the SM to choose a different extent if a deadline cannot be met).

With all these moving parts, it’s clear that there is a lot going on behind the scenes. Companies migrating to Azure have a lot to figure out, and hopefully the outline given above does a little bit to make this part of Microsoft’s public cloud service more understandable.

For users who still want to know more about Azure and how it works, NetApp can help you leverage Azure storage as your cloud provider, both with its new NFS services for Azure and with it’s premiere data management solution, Cloud Volumes ONTAP(formerly ONTAP Cloud) for Azure.

Want to learn more about NetApp’s fully managed file service for Azure? Read this quick breakdown on the benefits and learn more at Azure NetApp Files

See Additional Guides on Key Cloud Storage Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of cloud storage.

File Upload

Authored by Cloudinary

- Angular File Upload to Cloudinary in Two Simple Steps

- Uploading PHP Files and Rich Media the Easy Way

- AJAX File Upload - Quick Tutorial & Time Saving Tips

Multicloud Storage

Authored by NetApp

- Hybrid Deployment with Google Anthos: An Intro

- Multicloud Storage: Everything You Need to Know

- 5 Multicloud Challenges in Data Management

Block Storage

Authored by NetApp