More about Azure File Storage

- Azure Stack Hub: How It Works, Pricing, and Capacity Planning

- Azure Storage Limits at a Glance

- The Complete Guide to Cloud Transformation

- Azure Backup Service: 8 Ways to Backup with Azure

- Azure Data Catalog: Understanding Concepts and Use Cases

- Azure Table Storage: Cloud NoSQL for Dummies

- Azure NetApp Files: Performance So Good You’ll Think You’re On Premises

- Azure Keeps Banking Customer Data Secure

- Why Should You Migrate Databases to Azure?

- Out-of-This-World Kubernetes Performance on Azure with Azure NetApp Files

- Myth Busting: Cloud vs. On Premises - Which is Faster?

- Meet Cloud Sync, the Muscle Behind Cloud Migration

- Azure NetApp Files Enables Elite Enterprise Apps

- Azure NetApp Files Enables an Elite Class of Enterprise Azure Applications: Part One

- Building and Running SaaS Applications with NetApp

Subscribe to our blog

Thanks for subscribing to the blog.

January 26, 2020

Topics: Azure NetApp Files Advanced5 minute read

Editor's note: This blog, originally published in early 2019, has been updated with new (and improved!) performance data to reflect Azure NetApp Files' improved performance.

Enterprises are moving to the cloud (or rather, they’re continuing to move), and Azure is happy to have them. Some companies are selective in their migration, moving only applications that meet specific criteria—such as those without legal restrictions—while others have an all-in mandate. The task of transformation from custom-built data centers to general-purpose cloud is not easy, especially for applications that weren’t born in the cloud. From the retail firm with its enterprise-class databases demanding gigabytes of bandwidth to the financials firm running I/O-hungry Monte Carlo simulations requiring a single namespace and the genomics firm running highly demanding scale-out HPC workloads, stakes are high—but is the cloud ready? Microsoft Azure certainly is, thanks in large part to Azure NetApp Files!

Industry-leading NetApp® ONTAP® data management software is the foundation of Microsoft’s new native first-party file protocol service, Azure NetApp Files. Placed in the Azure data center for a consistent low-latency experience regardless of region, Azure NetApp Files (a NoOps service) is built to provide an on-premises NFS and (new!) SMB protocol experience. Now you can give your born-in-the-cloud applications access to large amounts of I/O with sub-millisecond latency or a large amount of bandwidth for scale-up or scale-out environments.

Azure NetApp Files is a fully managed service built for simplicity, Azure performance, and scalability that will take your business, its applications, and its workflows in the cloud faster than ever before.

Exploring Performance

It’s often easiest to understand the capabilities of a system by way of an example. The rest of this paper explores the capabilities of Azure NetApp Files by using a theoretical application, Acme AppX.

Acme AppX is a home-grown Linux-based application built for the cloud. This app is designed to scale linearly by adding virtual machines as the need for compute power increases. Data access is the name of the game for Acme AppX; rapid accessibility of the data lake is critical, and shared storage is the best option. The I/O patterns of this application are sometimes random and sometimes sequential. When random, low latency is needed for its large amounts of I/O, and when sequential, large amounts of bandwidth are desirable. The random component of the application leads the application admin to rule out object storage from consideration. The team has tried to build their own NFS server farm, but they’re frustrated by the complexity of having to manage the environment. Most shared file service engines are either self-managed (and undesirable); don’t scale far enough, offering a few tens of thousands of operations per second at best; or both, and are ruled out as well.

Azure claims that the newly launched Azure NetApp Files service is different—fully managed and scalable enough to meet the demands of most applications. But what does that mean? Let’s find out.

For more indepth performance information than provided here, please download the paper “Azure NetApp Files: Getting the Most Out of Your Cloud Storage”.

The Workload Generator

The results documented below come from fio summary files. Fio is a command line utility that was created to help engineers and customers generate disk I/O workloads to be used for validating storage performance. We used the tool in a client-server configuration by using a single mixed master/client and many dedicated client virtual machines—thus scale out.

The Work

The tests were designed to identify the limits that the hypothetical Acme AppX may experience, as well as expose the response time curves up to those limits. We ran the following test scenarios to identify the limits:

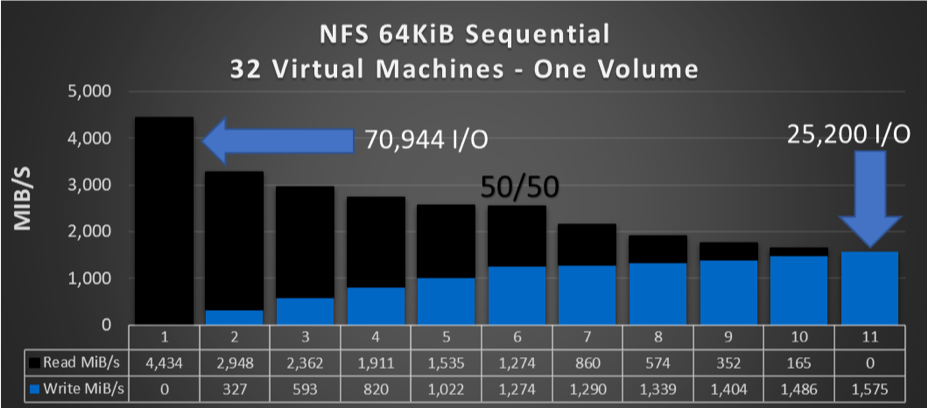

The first graph below represents a 64 kibibyte (KiB) sequential workload and the second 4KiB operation size random. As shown, a single volume is capable of handling between ~4,500MiB/s of sequential reads and ~1,600MiB/s of sequential writes. Equally, a volume can achieve anywhere from ~470,000 random reads to 135,000 random writes.

Volume-Level Performance Expectations

Service Levels and Quotas

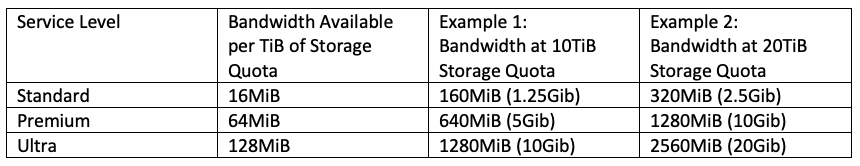

Individual volume bandwidth is provisioned based on a combination of service level and quota. There are three service levels: Standard, Premium, and Ultra. Each service level allocates a different amount of bandwidth per TiB of provisioned capacity. This amount is known in the Azure NetApp Files portal as the storage quota.

Note: Service levels are designed to answer business needs. The Standard service level is intended for situations where capacity is the principle need, while the Ultra service level is intended for use where bandwidth is the principle need. The Premium service level strikes a balance between the two.

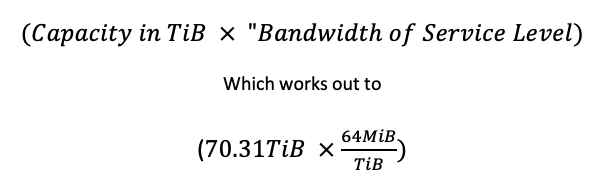

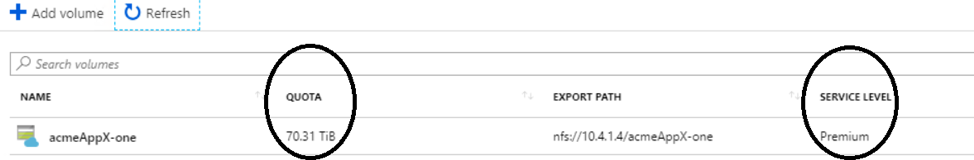

The volume acmeAppX-one, shown below, has 4500MiB/s of provisioned bandwidth—the highest throughput attained by any of the workloads driven against a single volume. To see that 4,500MiB/s of bandwidth has been made available, use the following formula:

Throughput-Intensive Workloads (NFS)

Using fio and a combination of 32 virtual machines, the following throughput numbers were achieved against the acmeAppX-one volume.

I/O-Intensive Workloads

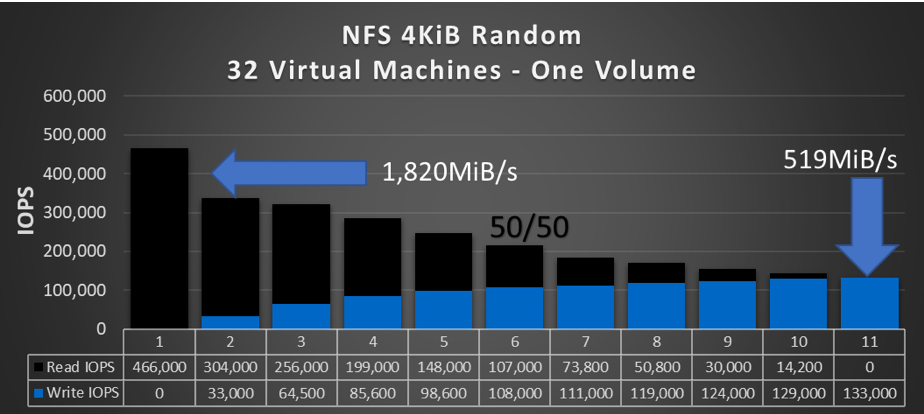

Again, using fio and a combination of 32 storage virtual machines, the following I/O numbers were achieved against the acmeAppX-one volume.

As you can see from the test results, with Azure NetApp Files, you get a sizeable boost in Microsoft Azure performance for your file-based workloads. Your latency-sensitive workloads —think databases—can get sub-millisecond response times (adjacent VMs), driving your transactional performance over 450k IOPS (for a single volume)—a level previously only capable in the data center and on dedicated equipment. And for your throughput-sensitive applications—think streaming and imaging applications—you now get 4.5GiB/s throughput, a level never seen before in the cloud. Reflecting on the results of this Acme AppX example, I can see a whole new possibility for high-performance production applications poised to run on Azure.

Do you want to try this out for yourself? Sign up for your own Azure NetApp Files access and see the performance you can get for your applications. Register today!