More about Kubernetes on AWS

- EKS Back Up: How to Back Up and Restore EKS with Velero

- How to Provide Persistent Storage for AWS EKS with Cloud Volumes ONTAP

- AWS Prometheus Service: Getting to Know the New Amazon Managed Service for Prometheus

- How to Build a Multicloud Kubernetes Cluster in AWS and Azure Step by Step

- AWS EKS: 12 Key Features and 4 Deployment Options

- AWS Container Features and 3 AWS Container Services

- AWS ECS in Depth: Architecture and Deployment Options

- EKS vs AKS: Head-to-Head

- AWS ECS vs EKS: 6 Key Differences

- Kubernetes on AWS: 3 Container Orchestration Options

- AWS EKS Architecture: Clusters, Nodes, and Networks

- EKS vs GKE: Managed Kubernetes Giants Compared

- AWS ECS vs Kubernetes: An Unfair Comparison?

- AWS Kubernetes Cluster: Quick Setup with EC2 and EKS

Subscribe to our blog

Thanks for subscribing to the blog.

May 13, 2021

Topics: Cloud Volumes ONTAP AWSElementary5 minute readKubernetes

What is AWS EKS?

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed AWS Kubernetes service that scales, manages, and deploys containerized applications. It typically runs in the Amazon public cloud, but can also be deployed on premises. The Kubernetes management infrastructure of Amazon EKS runs across multiple Availability Zones (AZ). AWS EKS is certified Kubernetes-conformant, which means you can integrate EKS with your existing tools.

In this article, you will learn:

This is part of our series of articles about Kubernetes on AWS.

How Does Amazon EKS Work?

EKS clusters are composed of the following main components—a control plane and worker nodes. Each cluster runs in its own, fully managed Virtual Private Cloud (VPC).

The control plane is composed of three master nodes, each running in a different AZ to ensure AWS high availability. Incoming traffic directed to the Kubernetes API passes through the AWS network load balancer (NLB).

Worker nodes run on Amazon EC2 instances located in a VPC, which is not managed by AWS. You can control and configure the VPC allocated for worker nodes. You can use a SSH to give your existing automation access or to provision worker nodes.

There are two main deployment options. You can deploy one cluster for each environment or application. Alternatively, you can define IAM security policies and Kubernetes namespaces to deploy one cluster for multiple applications.

To restrict traffic between the control plane and a cluster, EKS provides Amazon VPC network policies. Only authorized clusters and accounts, defined by Kubernetes role-based access control (RBAC), can view or communicate with control plane components.

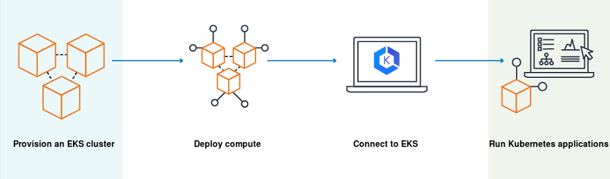

The following diagram illustrates the process of deploying a cluster on EKS - you instruct EKS to provision a cluster, cloud resources are provisioned in the background, and you can then connect to the Kubernetes cluster and run your workloads.

Image Source: AWS

Image Source: AWS

AWS EKS Components

The AWS EKS architecture is composed of the following main components: clusters, nodes, and networking.

Amazon EKS Clusters

Clusters are made up of a control plane and EKS nodes.

EKS control plane

The control plane runs on a dedicated set of EC2 instances in an Amazon-managed AWS account, and provides an API endpoint that can be accessed by your applications. It runs in single-tenant mode, and is responsible for controlling Kubernetes master nodes, such as the API Server and etcd.

Data on etcd is encrypted using Amazon Key Management (KMS). Kubernetes master nodes are distributed across several AWS availability zones (AZ), and traffic is managed by Elastic Load Balancer (ELB).

EKS nodes

Kubernetes worker nodes run on EC2 instances in your organization’s AWS account. They use the API endpoint to connect to the control plane, via a certificate file. A unique certificate is used for each cluster.

Related content: AWS Kubernetes Cluster: Quick Setup with EC2 and EKS

Amazon EKS Nodes

Amazon EKS clusters can schedule pods using three primary methods.

Self-Managed Nodes

A “node” in EKS is an Amazon EC2 instance that Kubernetes pods can be scheduled on. Pods connect to the EKS cluster’s API endpoint. Nodes are organized into node groups. All the EC2 instances in a node group must have the same:

- Amazon instance type

- Amazon Machine Image (IAM)

- IAM role

You can have several node groups in a cluster, each representing a different type of instance or instances with a different role.

Managed Node Groups

Amazon EKS provides managed node groups with automated lifecycle management. This lets you automatically create, update, or shut down nodes with one operation. EKS uses Amazon’s latest Linux AMIs optimized for use with EKS. When you terminate nodes, EKS gracefully drains them to make sure there is no interruption of service. You can easily apply Kubernetes labels to an entire node group for management purposes.

Managed nodes are operated using EC2 Auto Scaling groups that are managed by the Amazon EKS service. You can define in which availability zones the groups should run. There are several ways to launch managed node groups, including the EKS console, eksctl, the Amazon CLI, Amazon API, or Amazon automation tools including CloudFormation.

Amazon Fargate

You can use Amazon Fargate, a serverless container service, to run worker nodes without managing the underlying server infrastructure. Fargate bills you only for actual vCPUs and memory used. It provisions more computing resources according to what is actually needed by your cluster nodes.

Amazon EKS Networking

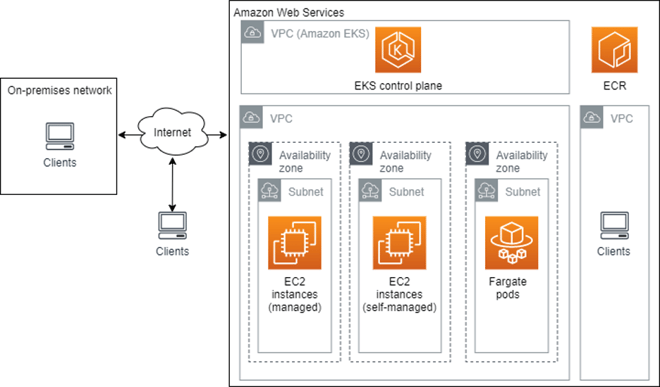

The following diagram shows the network architecture of an EKS cluster:

Image Source: AWS

Image Source: AWS

An Amazon EKS cluster operates in a Virtual Private Cloud (VPC), a secure private network within an Amazon data center. EKS deploys all resources to an existing subnet in a VPC you select, in one Amazon Region.

Network interfaces used by the EKS control plane

The EKS control plane runs in an Amazon-managed VPC. It creates and manages network interfaces in your account related to each EKS cluster you create. EC2 and Fargate instances use these network interfaces to connect to the EKS control plane.

By default, EKS exposes a public endpoint. If you want additional security for your cluster, you can enable a private endpoint, and/or limit access to specific IP addresses. You can configure connectivity between on-premises networks or other VPCs and the VPC used by your EKS cluster.

Networking for EKS nodes

Each EC2 instance used by the EKS cluster exists in one subnet. You have two options for defining networking:

- Use AWS CloudFormation templates to create subnets—in this case, nodes in public subnets are given a public IP, and a private IP from the CIDR block used by the subnet.

- Use custom networking via Container Networking Interface (CNI)—this lets you assign IP addresses to pods from any subnets, even if the EC2 instance is not part of the subnet. You must enable custom networking when you launch the nodes.

AWS EKS Storage with Cloud Volumes ONTAP

NetApp Cloud Volumes ONTAP, the leading enterprise-grade storage management solution, delivers secure, proven storage management services on AWS, Azure and Google Cloud. Cloud Volumes ONTAP supports up to a capacity of 368TB, and supports various use cases such as file services, databases, DevOps or any other enterprise workload, with a strong set of features including high availability, data protection, storage efficiencies, Kubernetes integration, and more.

In particular, Cloud Volumes ONTAP supports Kubernetes Persistent Volume provisioning and management requirements of containerized workloads.

Learn more about how Cloud Volumes ONTAP helps to address the challenges of containerized applications in these Kubernetes Workloads with Cloud Volumes ONTAP Case Studies.