Subscribe to our blog

Thanks for subscribing to the blog.

February 21, 2022

Topics: Azure NetApp FilesAstra Advanced11 minute readKubernetes Protection

Introduction

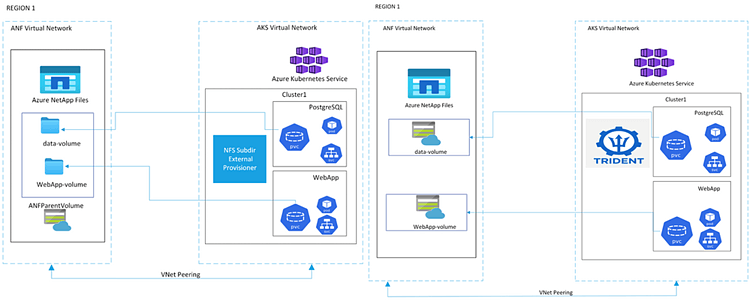

When it comes to accessing Azure NetApp Files volumes for Kubernetes applications, there are a few ways to achieve it:

- Static creation of volumes, handled outside Kubernetes. ANF volumes are created and then exposed to Kubernetes apps by providing connectivity info on a per-volume basis.

- Using an automatic provisioner, such as the NFS Subdir External Provisioner.

- Using Astra Trident, NetApp’s preferred solution for on-demand, Kubernetes-native ANF storage.

This blog walks you through the options, as well as factors to consider when selecting a persistent volume provisioner.

Terms to Remember

Before jumping into the specifics, here are some key concepts to remember:

- Azure NetApp Files (ANF) is a shared first-party Azure file-storage service that is designed for running performance-intensive workloads on Azure. ANF volumes are created in capacity pools with a specific service level corresponding to a range of throughput capacities (16/64/128 MB/sec/TiB). Every volume takes an Azure virtual network (VNet) and a delegated subnet that it can be accessed on. Export policies further help in defining access rules for clients in the virtual network. In addition, volumes can be accessed over NFS, SMB, or both. In this blog we will focus on NFS.

- A Persistent Volume (PV) is “... a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes.” A PersistentVolume represents a logical unit of storage that maps back to an ANF volume.

- StorageClasses are used to define multiple tiers of storage that can be used through Kubernetes to request for storage dynamically. Kubernetes administrators define storage classes that will be then used to create PersistentVolumeClaims.

- A PersistentVolumeClaim (PVC) is a “... request for storage by a user”.

The NFS Subdir External Provisioner: What you need to know

Unless you are looking to create a handful of PVs, a solution that is automated and invoked directly through the Kubernetes plane is what you want. External provisioners such as the NFS Subdir External Provisioner provide Kubernetes and storage admins with the ability to:

- request storage using StorageClasses and PersistentVolumeClaims. Users deploy Kubernetes apps with YAML manifests and ask for storage the same way.

- configure the connection to the NFS server (server IP and root path). This is where volumes, aka subdirectories will be created.

So how does it work? Here’s a step-by-step guide on setting it all up. I am working on a 3-node Azure Kubernetes Service (AKS) cluster installed in South Central US.

Step 1: Basics about the NFS Subdir External Provisioner + How to deploy

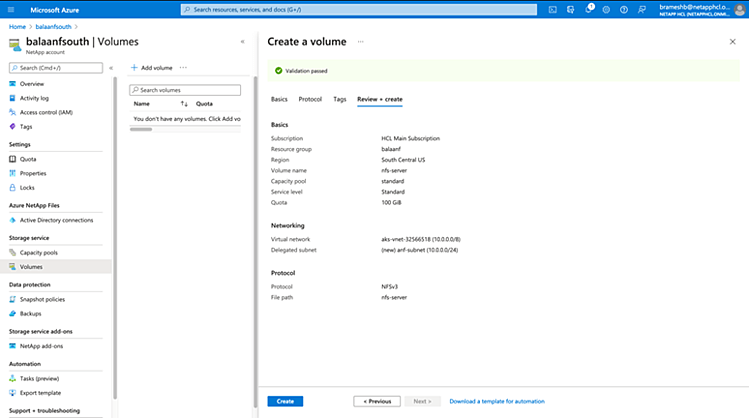

The NFS Subdir External Provisioner works by creating subdirectories within an NFS server, each of which represents a PV. It requires an “existing and already configured NFS server”. In my case, I am going to:

- Create an ANF volume. This will be the NFS server that will have subdirectories created for every PV. Keep in mind that the properties of this volume (size, virtual network, designated subnet, export policy) will determine how PVs are created within.

- Deploy the provisioner. The GitHub repo provides you with all the instructions needed to do this. I am also sharing the steps below.

This isn’t the only way to go. Multiple options exist. Alternatively, you can choose to write an external provisioner.

Deploy the external provisioner by cloning the GitHub repo and following the instructions provided. I am creating a dedicated namespace called “external-provisioner”.

mkdir external-provisioner

cd external-provisioner/

git clone https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner.git

cd nfs-subdir-external-provisioner/

kubectl create ns external-provisioner

NS=$(kubectl config get-contexts|grep -e "^\*" |awk '{print $5}')

NAMESPACE=${NS:-external-provisioner}

sed -i'' "s/namespace:.*/namespace: $NAMESPACE/g" ./deploy/rbac.yaml ./deploy/deployment.yaml

kubectl create -f deploy/rbac.yamlEdit the deployment.yaml file to add the NFS server IP as well as the path under which PVs will be created. Before this step, let us create an ANF volume that will be used as the NFS server. You can do this using the CLI or the Azure dashboard.

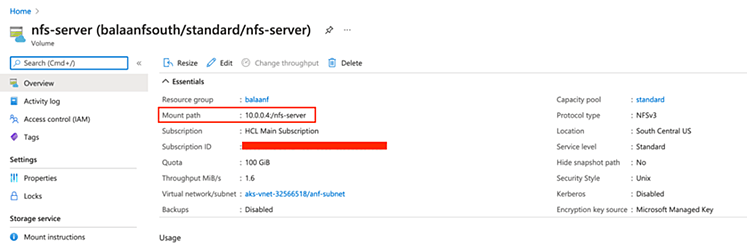

Once the ANF volume has been created, obtain the NFS server IP.

You are now ready to deploy the external provisioner. Go ahead and edit the deploy/deployment.yaml file and make the following change:

- NFS_SERVER should be set to the mount IP of the ANF volume (10.0.0.4)

- NFS_PATH is set to the mount path of the ANF volume (nfs-server)

- “nfs-client-root" is a volume that takes the server IP and the mount path (10.0.0.4 and "nfs-server")

cat deploy/deployment.yaml | grep "NFS_SERVER" -A 10

- name: NFS_SERVER

value: 10.0.0.4

- name: NFS_PATH

value: /nfs-server

volumes:

- name: nfs-client-root

nfs:

server: 10.0.0.4

path: /nfs-server

kubectl create -f deploy/deployment.yaml

Step 2: Creating a storageClass + installing an app

The last thing to do before deploying applications and creating PVCs is to define a storageClass.

cat deploy/class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: external-anf-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

onDelete: "retain"

archiveOnDelete: "false"

kubectl create -f deploy/class.yaml

We are now ready to test it out. With all the pieces in place, it is now time to deploy an application and create a PVC. In this example, PostgreSQL is installed using the Helm chart available here.

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3chmod 700 get_helm.sh./get_helm.sh

kubectl create ns postgresql

helm repo add azure-marketplace https://marketplace.azurecr.io/helm/v1/repo helm install postgresql --set global.storageClass=external-anf-storage azure-marketplace/postgresql -n postgresql

kubectl get pvc,pods -n postgresql

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-postgresql-postgresql-0 Bound pvc-500aa086-9461-411a-92aa-3c74a4efae49 8Gi RWO external-anf-storage 3m6s

NAME READY STATUS RESTARTS AGE

pod/postgresql-postgresql-0 1/1 Running 0 3m6s

And there you have it. A running instance of PostgreSQL that writes to a volume created by the external provisioner.

Taking a closer look

Let’s take a deeper look at how it all works:

- It is a core requirement to create a source ANF volume. All PVs created by the external provisioner are subdirectories created within the ANF volume. If you had access to the ANF volume, mounting it and reading its contents would reveal all subdirectories created within it. This can be a security risk if access to the ANF volume isn’t controlled properly.

- The parent ANF volume must be as big as the volumes it contains. It must be continuously examined and resized if additional volumes are to be created by the external provisioner.

- This method lets you define strict Azure RBAC roles to be exposed through Kubernetes. Volumes are subdirectories and do not require specific permissions for creation.

- The size of the PV requested by a K8s application is not enforced by the provisioner. Consequently, a rogue application can exceed the size of the PV and fill up the entire parent ANF volume, regardless of the provisioned size.

- Storage resize/expansion operations are not supported.

- Protecting volumes individually becomes complicated. While the ANF volume can have snapshots, it will not apply to subdirectories.

- Provided the container image supports it, users can potentially fetch the server IP and mount path of the parent volume and try to look at its contents. Remember; the parent volume can be mounted by nodes in the AKS vnet. Mounting it on a bastion node in the same vnet can expose the contents of all subdirectories. To prevent this from happening, client apps should not support fetching mount information for volumes created by the external provisioner.

- Each volume is a subdirectory; mount IP is the same for all volumes. This helps you keep network interfaces in the ANF subnet to a theoretical minimum of 1.

- Applications with bursty I/O patterns can take advantage of the entire throughput capacity of the parent ANF volume.

Astra Trident: Dynamic, on-demand, Kubernetes-native

Astra Trident offers a dynamic CSI specification compliant storage provisioner for Kubernetes with a rich set of features. It is a storage orchestrator purpose-built for Kubernetes. With 10 CSI compliant storage drivers, it provides a one-stop integration point for storage across NetApp platforms on-premises and in the cloud. For this discussion, we will keep it specific to ANF.

Let’s install it and see how it works!

Step 1: Install Trident + Configure ANF backends

Installing Trident is easy. You can choose from multiple options. I am using the Trident operator.

wget https://github.com/NetApp/trident/releases/download/v21.10.1/trident-installer-21.10.1.tar.gz

tar xzvf trident-installer-21.10.1.tar.gz

kubectl create ns trident

kubectl create -f trident-installer/deploy/bundle.yaml -n trident

kubectl create -f trident-installer/deploy/crds/tridentorchestrator_cr.yaml

To check that it’s installed:

kubectl get pods -n trident

NAME READY STATUS RESTARTS AGE

trident-csi-6g6mc 2/2 Running 0 61s

trident-csi-7dfb84875-kvr82 6/6 Running 0 61s

trident-csi-8s7f6 2/2 Running 0 61s

trident-csi-rjkmm 2/2 Running 0 61s

trident-operator-68fdbccdb7-gjph7 1/1 Running 0 91s

trident-installer/tridentctl version -n trident

+----------------+----------------+

| SERVER VERSION | CLIENT VERSION |

+----------------+----------------+

| 21.10.1 | 21.10.1 |

+----------------+----------------+

Trident is up and running! Next, we provide the details of the Azure subscription to utilize. Refer to the documentation for detailed instructions.

cat backend-anf.json

{

"version": 1,

"storageDriverName": "azure-netapp-files",

"backendName": "anf-standard",

"location": "southcentralus",

"subscriptionID": "xxxxxxxxxx-xxxx-xxx",

"tenantID": "xxxxxxxxxxxxxxxxxxx",

"clientID": " xxxxxxxxxxxxxxxxxxx ",

"clientSecret": " xxxxxxxxxxxxxxxxxxx ",

"serviceLevel": "Standard",

"virtualNetwork": "aks-vnet-32566518",

"subnet": "anf-subnet"

}

tridentctl create backend -f backend-anf.json -n trident

+--------------+--------------------+--------------------------------------+--------+---------+

| NAME | STORAGE DRIVER | UUID | STATE | VOLUMES |

+--------------+--------------------+--------------------------------------+--------+---------+

| anf-standard | azure-netapp-files | ca468b15-7a7a-4b13-ba03-b90cb1c5cd45 | online | 0 |

+--------------+--------------------+--------------------------------------+--------+---------+

Step 2: Creating a storageClass + Installing an app

We are almost ready to start using Trident. The last step is to create a StorageClass as shown below.

cat storage-class-anf.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: trident-anf-standard

provisioner: csi.trident.netapp.io

parameters:

backendType: "azure-netapp-files"

fsType: "nfs"

kubectl create -f storage-class-anf.yaml

With that in place, let us install postgreSQL in a different namespace using Astra Trident.

kubectl create ns postgresql-trident

helm install postgresql-trident --set global.storageClass=trident-anf-standard azure-marketplace/postgresql -n postgresql-trident

kubectl get pods,pvc -n postgresql-trident

NAME READY STATUS RESTARTS AGE

pod/postgresql-trident-postgresql-0 1/1 Running 0 58s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-postgresql-trident-postgresql-0 Bound pvc-4789e5ee-a5d4-406a-9ed0-051a60737790 100Gi RWO trident-anf-standard 58s

Appreciating the finer details

There are notable differences between Astra Trident and the external subdir provisioner:

- Each PVC corresponds to a unique ANF volume for Astra Trident. As PVCs are created in Kubernetes, Astra Trident responds by creating a dedicated ANF volume.

- There is no source ANF volume required. The chicken and egg problem introduced by the external NFS provisioner does not apply here.

- Each volume is a distinct entity. Users cannot peek into volumes other than their own.

- Each volume has a throughput limit determined by the service level of the capacity pool.

- Volumes can be resized through Kubernetes. Volume expansions result in the file system on the application and the ANF volume being resized transparently.

- Snapshotting and cloning volumes are supported as Kubernetes-native features providing a robust foundation for supporting application data management functionality including local application data protection, disaster recovery, and mobility.

- Astra Trident requires an app registration to communicate with Azure and handle ANF volumes throughout their provisioning cycle.

Data management: The missing piece in the puzzle

Key to every organization is a data fabric. Applications and their associated data must be protected and easily transferable between clusters. For Kubernetes, it boils down to:

- Volume snapshots and clones.

- Application backups and migrations, whether it be scheduled or on-demand.

- Application-aware snapshots that can quiesce existing data streams.

Astra Control Service is a fully managed solution that is hosted on Azure by NetApp. It enables Kubernetes admins to:

- Automatically manage persistent storage.

- Create application-aware, on-demand snapshots and backups.

- Automate policy-driven snapshot and backup operations.

- Migrate applications and data from one Kubernetes cluster to another.

- Easily clone an application from production to staging.

Astra Trident works in conjunction with Astra Control Service to offer comprehensive data management through a unified control pane.

Which one should I choose?

Here is a table that summarizes the key differentiators.

|

Factor |

NFS Subdir Provisioner |

Astra Trident |

|

Volume Snapshots and clones |

Not supported |

Supported |

|

Volume expansions |

Not supported |

Supported |

|

Data management (app backup/DR/migration) |

Not supportable |

With Astra Control Service |

|

Minimum volume size |

1MiB |

100GiB |

|

Number of used IPs in a VNet |

1 |

Number of volumes created |

|

Number of volumes per capacity pool |

N/A |

500 [adjustable upon request] |

|

Throughput limit |

Depends on parent volume size. Shared by constituent volumes |

Depends on volume size. Independent for each volume |

|

Testing and maintenance |

Community supported |

Open-source and supported by NetApp |

Astra Trident provides a richer set of features and is foundational for application data protection and mobility use cases for business-critical workloads when used with Astra Control Service. The NFS subdir external provisioner is a good fit for basic Kubernetes environments that don’t have strict requirements for secure multi-tenancy, data protection and data mobility. Starting out with the external nfs-provisoner for ANF may seem quick and easy but the limitations can outweigh the benefits fast.

Resources

Here are some helpful links: