Subscribe to our blog

Thanks for subscribing to the blog.

July 7, 2022

Topics: Cloud Volumes ONTAP Advanced9 minute readPerformance

Legacy storage systems relied on magnetic tapes and HardDisk Drives (HDDs) for data storage where data access and exchange were performed by serial protocols (SATA and SAS). With the entry of SSDs, the storage landscape quickly transformed and the foundation for fast, non-volatile memory (NVM) for massive, enterprise-grade I/O operations was built. In its infancy, serial transfer protocols interfacing with SSDs were considered unsuitable for modern workloads that require large bandwidths and sub-second latencies. Over time, storage protocols have evolved further to support parallel IOPS, reduced latency, and accelerated command-queuing.

In this article, we compare NVMe vs NVMe-oF (NVMe over Fabric) as modern storage access protocols that support distributed, cloud-native storage through dynamic storage disaggregation and abstraction.

Here’s what we’ll cover in this article:

- What Is NVMe (Non-Volatile Memory Express)?

- Features of NVMe Storage

- Benefits of NVMe

- What Is NVMe-oF (Non-Volatile Memory express-over Fabrics)?

- Features of NVMe-oF-Based Storage

- Benefits of NVMe-oF

- NVMe vs NVMe-oF: Comparison Snapshot

What Is NVMe (Non-Volatile Memory Express)?

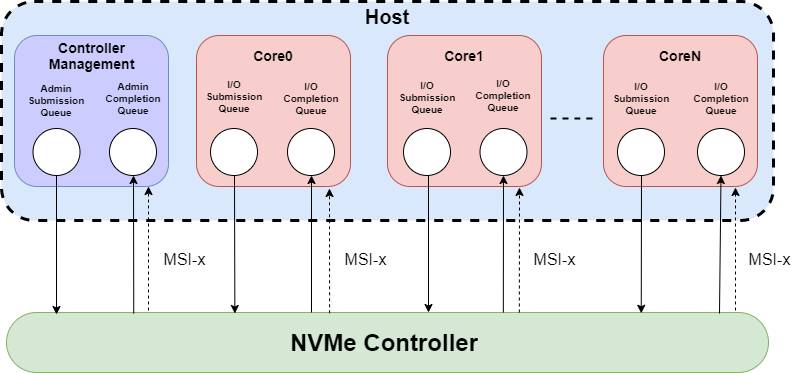

NVMe is a modern storage protocol developed to accelerate data access by performing parallel I/O operations with multicore processors to overcome CPU bottlenecks and facilitate higher throughput. The specification relies on a host controller interface that is designed to connect flash-based devices to the CPU via the Peripheral Component Interconnect Express (PCIe). Although it’s commonly used in SSDs, the NVMe specification applies to all Non-Volatile Memory (NVM) devices interfaced with the CPU through the PCIe.

Components of NVMe (Source: MayaData)

Components of NVMe (Source: MayaData)

The NVMe host interface uses the submission-and-completion command queue mechanism for I/O operations. The interface leverages a simple command set that consists of 13 commands, including 10 system administration and three NVM input-output commands. To accelerate command queueing for better management of data access requests, NVMe additionally breaks down single tasks and runs smaller I/O tasks in parallel.

Features of NVMe Storage

NVMe is purpose-built to support efficient data transfer between a server and storage systems. This is achieved through a number of features that the NVMe architecture fundamentally offers. Some of such features include:

IO Multipath Support

The NVMe standard allows for the same NVM I/O request or response to be sent along multiple paths. When one path is busy or inaccessible, data can be accessed via a replicated path. This is useful in modern networks as it enables high availability, load-balancing, and data redundancy.

Multi-stream Write Support

Since flash memory can only endure a finite number of writes in program/erase cycles, SSDs have a limited lifetime. NVMe supports multi-stream write features to help place similar data within bordering locations in the SSD, reducing write amplification. This minimizes the number of system writes and increases the number of I/O writes that can be performed, thereby increasing the SSD’s lifetime.

Zoned Namespaces for Aligned Data Placement

NVMe compartmentalizes storage into a collection of logical block addresses, commonly known as zoned namespaces, to enable logical isolation of data and applications. Data within each zone is written sequentially but can be read randomly. Data management through zones minimizes write amplification and storage over-provisioning resulting in better performance, write-protection for sensitive data, and cost optimization.

Supports Multiple Queues

While older standards (such as SATA) had one command queue with up to 32 commands, NVMe is designed to handle up to 65,535 queues with a queue depth of 65,536 commands. This allows the host controller to execute a greater number of commands simultaneously.

Supports I/O Virtualization Techniques

NVMe uses host software to abstract physical storage from upper-layer protocols, allowing for hyper-converged, hyper-scale server-network access for enterprise-grade Storage Area Networks (SAN). NVMe also allows for Single Root I/O Virtualization (SR-IOV) to support multiple server VMs to share a single PCIe interface.

Benefits of NVMe

Though reduced latency and enhanced IOPS are two of the most common benefits, there are some additional benefits of the NVMe storage model:

Faster Throughput

NVMe supports multiple queues with large queue depths, thereby reducing latencies for I/O operations and increasing the number of requests for concurrent processing.

High I/O Consistency

By leveraging the host software’s implementation of a unified virtual environment for I/O operations, NVMe offers optimized performance across workloads without having to partition them.

Better Compatibility

The NVMe host interface directly communicates with the CPU and is compatible with all available SSD form factors, enabling seamless connectivity with storage controllers.

Offers Higher Bandwidth

Fourth generation NVMs enable a better transfer rate with bandwidth and throughput of up to 7.9 GB/s and 3.9 GB/s respectively.

Compatible with All Major OSs Regardless of Form Factor

Instead of relying on custom device drivers, the NVMe specification leverages out-of-the-box support from all modern hypervisors and operating systems. The availability of a standardized host interface facilitates SSDs to support multi-vendor form factors by using a single device driver. Form factors supported by the NVMe specification include M.2, PCIe add-in cards, U.2, U.3, etc.

Supports Tunneling for Data Privacy

Organizations can extend NVMe on a TCP over Time Sensitive Network (TSN) to build a PCIe tunnel that keeps communication between NVMe SSDs and hosts in private. These tunneling protocols are typically offered by trusted security groups such as Trusted Computing Group (TCG) that help administer robust security controls including purge-level erase, simple access controls, and protection for data at rest.

What Is NVMe-oF (Non-Volatile Memory express-over Fabrics)?

NVMe-oF is an augmented storage protocol developed to extend the performance benefits of NVMe over Fibernet and Ethernet channels. The protocol uses NVMe commands to exchange data between various media channels over a fabric network to connect arrays of storage controllers. These channels are built to facilitate faster and more efficient communication between server and storage while reducing CPU utilization for host servers. There are a few different types of NVMe-oF transport channels:

NVMe over TCP/IP

NVMe over TCP/IP uses the Transport Control Protocol (TCP) to transfer NVMe data as datagrams over Ethernet. Considered to be one of the most promising next-gen protocols for mainstream enterprise storage, NVMe over TCP doesn’t rely on specialized hardware, thereby making it the cheapest and most flexible end-to-end network storage solution over the NVME-oF protocol.

NVMe over Fiber Channel (FC-NVMe)

FC-NVMe is a robust protocol that encapsulates I/O commands inside Fibre Channel (FC) frames to facilitate data transfer between servers and storage arrays. While FC-NVMe is considered efficient and matches the standard FC protocol compared to legacy storage protocols, the FC-NVMe storage protocol is also known for its sub-optimal performance when interpreting and translating FC frames into NVMe commands.

NVMe-oF over RDMA

NVMe-oF over RDMA enables the transfer of messages between servers and storage arrays using Remote Direct Memory Access (RDMA). RDMA enables the direct exchange of data between two hosts’ main memory without consuming OS, processor, or cache resources of either machine.

Features of NVMe-oF-Based Storage

Being transport agnostic, NVMe-oF leverages the features of NVMe over a number of network protocols for enhanced performance for network storage. Some of these include:

Supports Multiple Transport Protocols

NVMe-oF enables enterprises to build Storage Area Networks using a wide range of transport protocols. These include RDMA over converged Ethernet (RoCE), Fiber Channel, and TCP among others.

Uses a Scatter-Gather List for Data Transfer

NVMe-oF divides the total storage array into logical blocks, with each segment defined by a Scatter-Gather List (SGL) descriptor. The SGL is a data structure that defines a data space, whether it’s a source or target, and represents continuous physical memory space.

Credit-based Flow Control

The sender awaits credits from the recipient indicating the availability of buffers before transmitting the message. This enables a self-throttling connection that guarantees delivery at the physical layer without having to reduce frames due to congestion.

Scales up to Thousands of Devices

The fabric can scale up to tens of thousands of devices or even more depending on the resources available and workload requirements.

Low Network Latency

NVMe-oF uses low-latency fabrics for increased performance. The maximum latency for fabrics is ideally considered to be 10 microseconds end-to-end.

Benefits of NVMe-oF

As an extension to the NVMe storage protocol, NVME-oF offers various benefits over and above the benefits of NVMe, such as:

Increased Overall Storage Performance

The fabric supports the parallel exchange of data between multiple hosts and storage arrays, further accelerating I/O request processing for optimum performance.

Reduces the Size of Server-Side OS Storage Stacks

NVMe-oF’s improved I/O capabilities allow multiple machines to share a storage array without requiring a different storage interface for each machine.

Enables Different Implementation Types for Different Use Cases

Whether it's for faster throughputs or larger workloads, storage administrators can configure customized NVMe-oF networks using channels that can handle specific resource requirements.

Supports Additional Parallel Requests

Larger network fabrics build upon the innate parallelism of NVMe I/O queues and can be used to break down requests further for streamlined data access and exchange.

NVMe vs NVMe-oF: Comparison Snapshot

As NVMe-oF was built to extend NVMe, both protocols share approximately 90% of their features. There are, however, fundamental differences in their use-cases and applications. Some key differences include:

|

Feature |

NVMe |

NVMe-oF |

|

Complexity |

Requires simple installation steps by directly connecting the SSD to the CPU. |

Installation is more complex and typically varies depending on the size of the storage array and the number of hosts. |

|

Setup cost |

Moderately cheaper as the setup requires only the purchase of an SSD form factor. |

Involves setting up a channel for the network fabric with moderate-to-high costs depending on the fabric size. |

|

Transport protocol |

Communicates with the compute element through the PCI-express interface. |

Supports multiple transport protocols such as Fiber Channel and Ethernet (RDMA, Infiniband, RoCE). |

|

Parallelism |

Supports up to 64K queues. |

Supports up to 64K queues for each host in the fabric. |

|

Use-cases |

High-speed SSD data access. |

High-speed Storage Area Network access for high-performance, low-latency enterprise workloads. |

Conclusion

Flash-based memory devices enable high-speed data exchange between CPU and storage. The adoption of SSDs on a large scale facilitated the creation of storage protocols that matched their low-latency, high throughput capabilities. When comparing NVMe vs NVMe-oF, both protocols achieve this by connecting the SSD storage to the CPU directly through the PCIe interface. These protocols facilitate several advantages over legacy protocols, including wider compatibility, larger bandwidth, and enhanced security management.

NetApp’s Cloud Volumes ONTAP leverages NVMe intelligent caching to help improve storage latency for prominent public cloud services, including AWS, GCP, and Azure. ONTAP seamlessly integrates with your private/public cloud while offering a seamless upgrade to an NVMe-over-TCP storage protocol. NVMe-over-TCP will be supported in Cloud Volumes ONTAP and in FSx for ONTAP in future releases.