More about HPC on Azure

- Azure HPC Cache: Use Cases, Examples, and a Quick Tutorial

- What is Cloud Performance and How to Implement it in Your Organization

- Chip Design and the Azure Cloud: An Azure NetApp Files Story

- Energy Leader Repsol Sees a Surge in Performance in Azure NetApp Files

- Cloud Architects: Supercharge Your HPC Workloads in Azure

- To Migrate or Not to Migrate? Legacy Apps and Line-of-Business Applications in the Cloud

- Supercharge Cloud Performance: Why Azure NetApp Files Is a Game Changer for the Energy Sector

Subscribe to our blog

Thanks for subscribing to the blog.

March 17, 2020

Topics: Azure NetApp Files Advanced5 minute read

Semiconductor, or chip, design firms are most interested in time to market (TTM); TTM is often predicated upon the time it takes for workloads, such as chip design validation, and pre-foundry work, like tape-out, to complete. That said, the more bandwidth available to the server farm, the better. Azure NetApp Files (ANF) is the ideal solution for meeting both the high bandwidth and low latency storage needs of this most demanding industry. Read on to discover why you should consider this HPC on Azure solution.

More bandwidth means more jobs may be run in parallel, more checks performed simultaneously, more, more, more, all without the added expense of time. ANF’s quality of service offering allows the scale out to 200, 500, 1000 concurrent jobs, as various Azure customers have done, without effecting run time.

Read on to see the options made available to the semiconductor/chip industry when running their environments within Azure atop Azure NetApp Files.

Please note that all test scenarios documented in this paper are the result of running a standard industry benchmark for electronic design automation (EDA).

All test scenarios documented in this paper are the result of a standard industry benchmark we ran for electronic design automation (EDA) on Azure NetApp Files.

Configurations

|

Scenario |

Volumes |

Clients SLES15 D16s_v3 |

|

One |

1 |

1 |

|

Two |

6 |

24 |

|

Three |

12 |

24 |

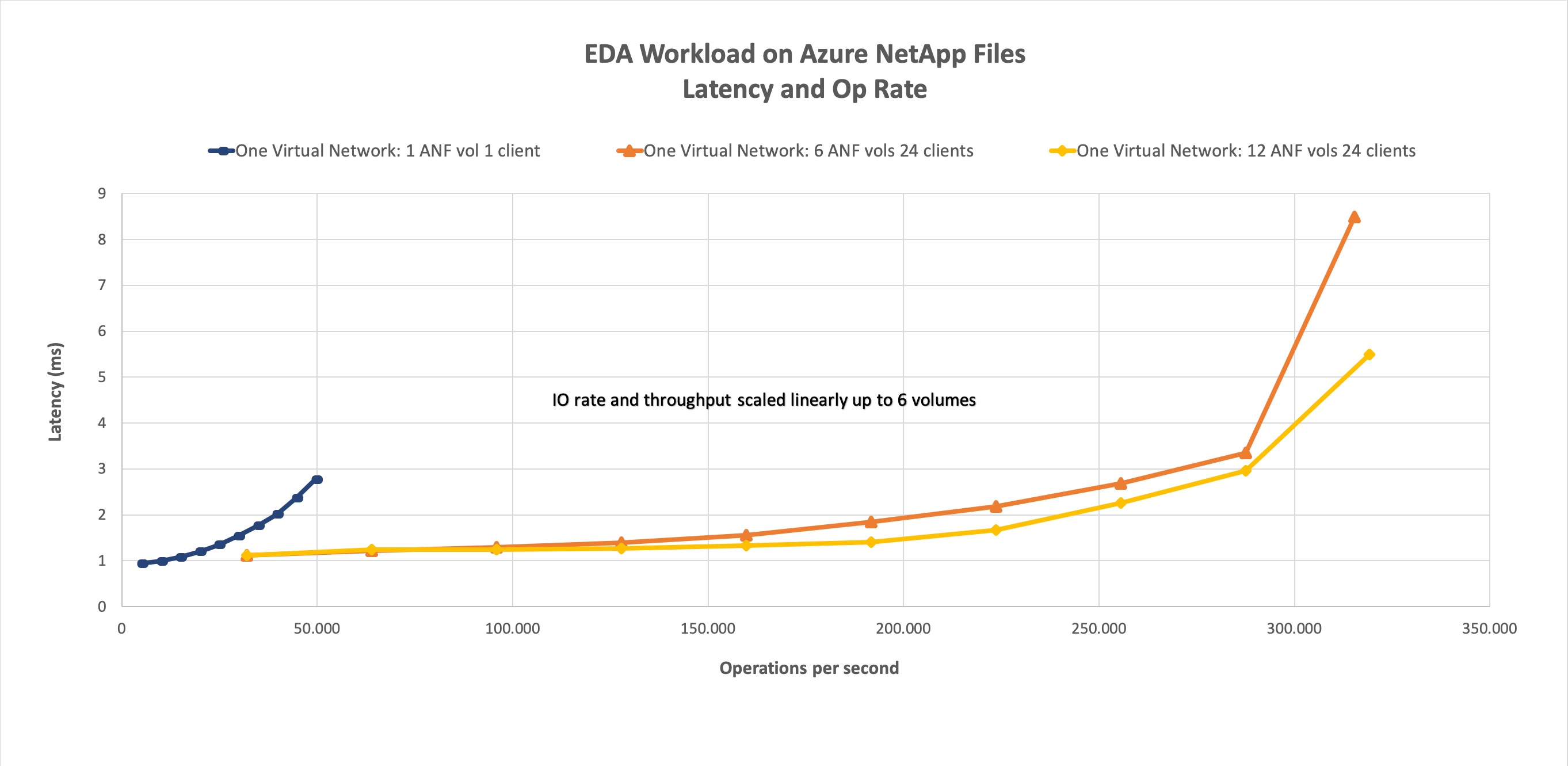

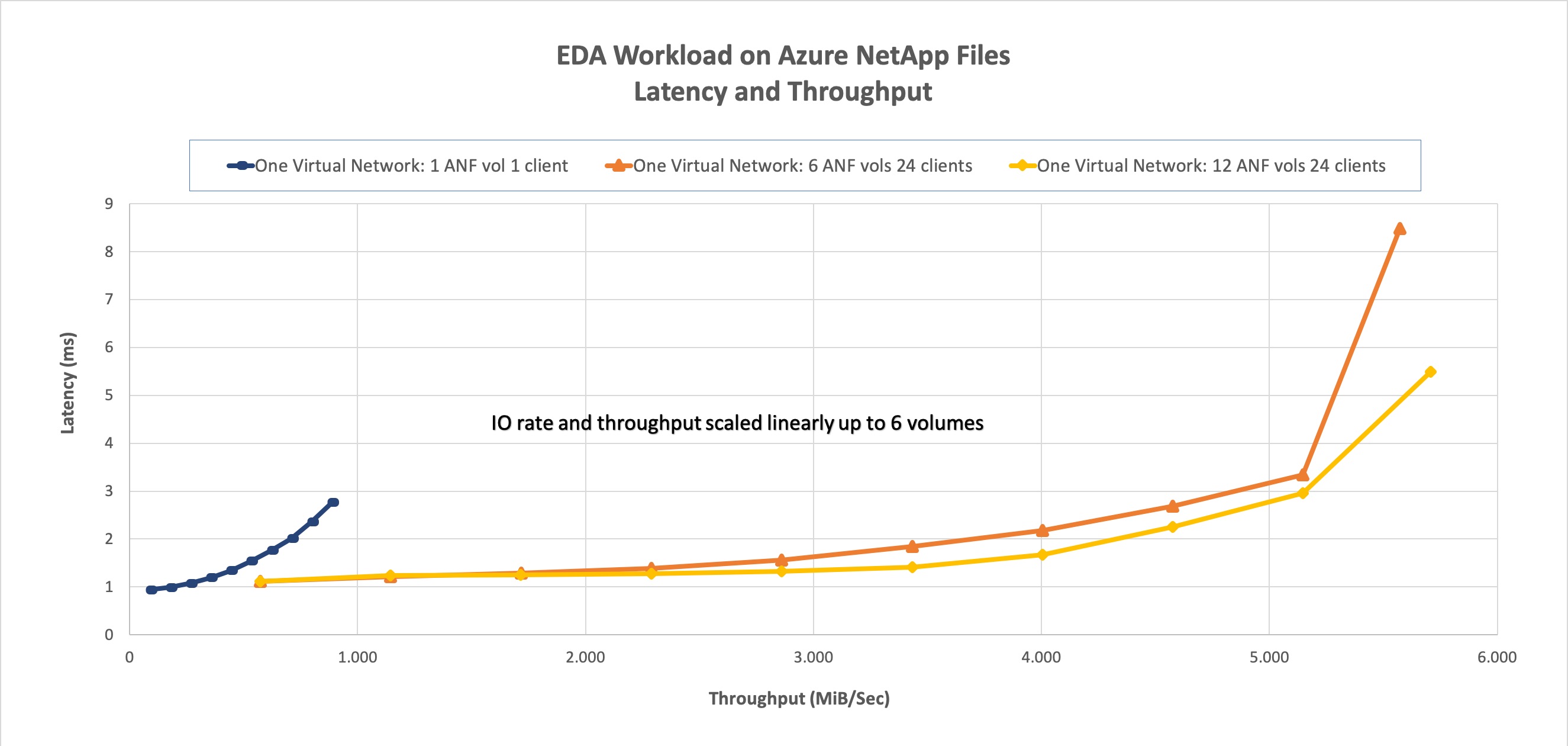

Scenario One answers the most basic question: How far can a single volume be driven? We ran Scenarios Two and Three to evaluate the limits of a single Azure NetApp Files endpoint looking for potential benefits in terms I/O upper limits and/or latency.

Scenario Results

|

Scenario |

I/O Rate at 2ms |

I/O Rate at the Edge |

Throughput at 2ms |

Throughput at the Edge |

|

1 Volume |

39,601 |

49,502 |

692MiB/s |

866MiB/s |

|

6 Volumes |

255,613 |

317,000 |

4,577MiB/s |

5,568MiB/s |

|

12 Volumes |

256,612 |

319,196 |

4,577MiB/s |

5,709MiB/s |

Scenario Results Explained

- Single volume: This represents the most basic application configuration; as such, it is the baseline scenario for follow-on test scenarios.

- 6 volumes: This scenario demonstrates a linear increase (600%) relative to the single volume workload.

- More information about this configuration: In most cases, all volumes within a single virtual network are accessed over a single ip address, which was the case in this instance.

- 12 volumes: This scenario demonstrates a general decrease in latency over the 6 volumes scenario, but without a corresponding increase in achievable throughput.

Pictures are Worth a Thousand Words:

The Layout of the Tests

|

Test Scenario |

Total Number of Directories |

Total Number of Files |

|

Single Volume |

88,000 |

880,000 |

|

6 volumes |

568,000 |

5,680,000 |

|

12 volumes |

568,000 |

5,680,000 |

The complete workload is a mixture of concurrently running functional and physical phases and, as such, overall represents a typical flow from one set of EDA tools to another.

The functional phase consists of initial specifications and logical design. The physical phase takes place when converting the logical design into a physical chip. During the sign-off and tape-out phases, final checks are completed, and the design is delivered to a foundry for manufacturing. Each of these phases present differently when it comes to storage, as described below.

The functional phases are metadata intensive—think file stat and access calls—though they do include a mixture of both sequential and random read and write I/O as well. Although metadata operations are effectively without size, the read and write operations range between less than 1K and 16K; he majority of reads are between 4K and 16K. Most writes are 4K or less. The physical phases, on the other hand, are entirely composed of sequential read and write operations, and a mixture of 32K and 64K OP size.

Most of the throughput shown in the graphs above comes from the sequential physical phases of workload, whereas the I/O comes from the small random and metadata intensive functional phases–both of which happen in parallel.

In conclusion, pair Azure Compute with Azure NetApp files for EDA design to get bandwidth delivered at scale. After all, bandwidth drives business success.

Learn More About Running EDA on ANF

Read the eBook on HPC workloads, especially EDA, or get started running EDA on Azure NetApp Files today.

More HPC on Azure content:

- HPC on Azure: Meet the Critical Needs of your Environment

- Why Azure NetApp Files is a Game Changer for the Energy Sector

- Cloud Architects: Supercharge Your HPC Workloads in Azure

- How Azure NetApp Files Supports HPC Workloads in Azure

- How to Solve Challenges with Azure HPC Environments

- Subscribe to Azure NetApp Files today