More about Google Cloud Migration

Subscribe to our blog

Thanks for subscribing to the blog.

November 18, 2019

Topics: Cloud Volumes ONTAP Data MigrationGoogle CloudAdvanced5 minute read

No matter if you are migrating from on-premises to cloud or between cloud providers, it is a universal truth what the most tedious part of the process is: transferring data from one storage repository to another. This is true for any AWS, Azure or Google Cloud migration.

Depending on the volume of the data, this process can take up to several hours or even several days. A traditional way to do it implies deploying servers, establishing connectivity, and writing some scripts. However, for users that want to transfer data to Google Cloud Storage from a source such as a server or a cloud repository such as Amazon S3 or Azure Blob, there is a better and more enjoyable way to do this.

In this post we’ll take a close look at how you can use Google Cloud Storage Transfer Service to move data between any server (whether on premises or with another cloud provider) and Google Cloud. This can be a big help to users who have decided to migrate to Google Cloud, or for use with Cloud Volumes ONTAP on Google Cloud.

What Is the Google Cloud Storage Transfer Service?

Google Cloud offers different data transfer solutions and services. One of these services is Google Cloud Storage Transfer service, a built-in feature from Google Cloud Storage and GCP’s object storage service. It enables the capability to create and process transfer operations to the GCP Storage service in a fully managed and serverless way, without the need for any custom code or infrastructure. It provides options for both one-time and scheduled recurring transfers.

This powerful service makes migrating to Google Cloud Storage much faster and decreases the operational overhead. By supporting multiple sources of data such as Amazon S3 or Azure Blob, HTTP/HTTPS locations, or Google Cloud Storage buckets, it provides a lot of flexibility. For users who are comparing AWS, Azure, and Google Cloud hands on, the Cloud Storage Transfer Service is an optimal tool to use.

How to Transfer Data to Google Cloud Storage Using the Cloud Storage Transfer Service

To get started with the Google Cloud Storage Transfer Service and initiate the transfer of data, we will need to have a couple of things in place. In addition to a Linux server with our files stored in it, we will need to make those files available via HTTP/HTTPS to be able to transfer them using Google Storage Transfer.

We’ll also need a Google Cloud Storage bucket to serve as a destination to the files. If you don’t have one yet, you can learn how to create a GCP bucket here.

Make the Source Files Available via HTTP/HTTPS

Our first step is to make your source files available via the internet.

1. In your Linux server, make sure you have installed Curl, OpenSSL, and Python 3.0.

2. Create a file named generate.sh in the directory where your data is located. The file will be used to generate an index.tsv that contains a list of files to be transferred. Your generate.sh file should contain the following:

#!/bin/bash

TSV="TsvHttpData-1.0"

IP=$(curl http://ipecho.net/plain -s)

for i in `ls -I generate.sh -I index.tsv`; do

filesize=$(stat -c%s "$i")

MD5Hash=$(openssl md5 -binary $i | openssl enc -base64)

TSV="$TSV\nhttp://$IP/$i\t$filesize\t$MD5Hash"

done

echo -e $TSV > index.tsv

#Output

echo "http://$IP/index.tsv"

echo "-----"

cat index.tsv

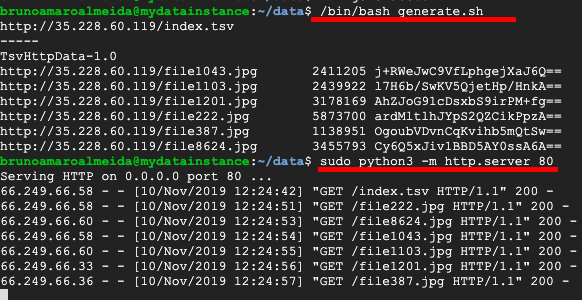

3. The script can be customized if needed. When ready, execute the script using /bin/bash generate.sh, this will generate a file named index.tsv.

4. You can initiate a web server in the current local directory where your files are located, using the command sudo python3 -m http.server 80

Generate the tsv index file and start a webserver in the current directory.

Generate the tsv index file and start a webserver in the current directory.

Create a New Google Storage Transfer Operation

Now we need to transfer the data to Google Storage.

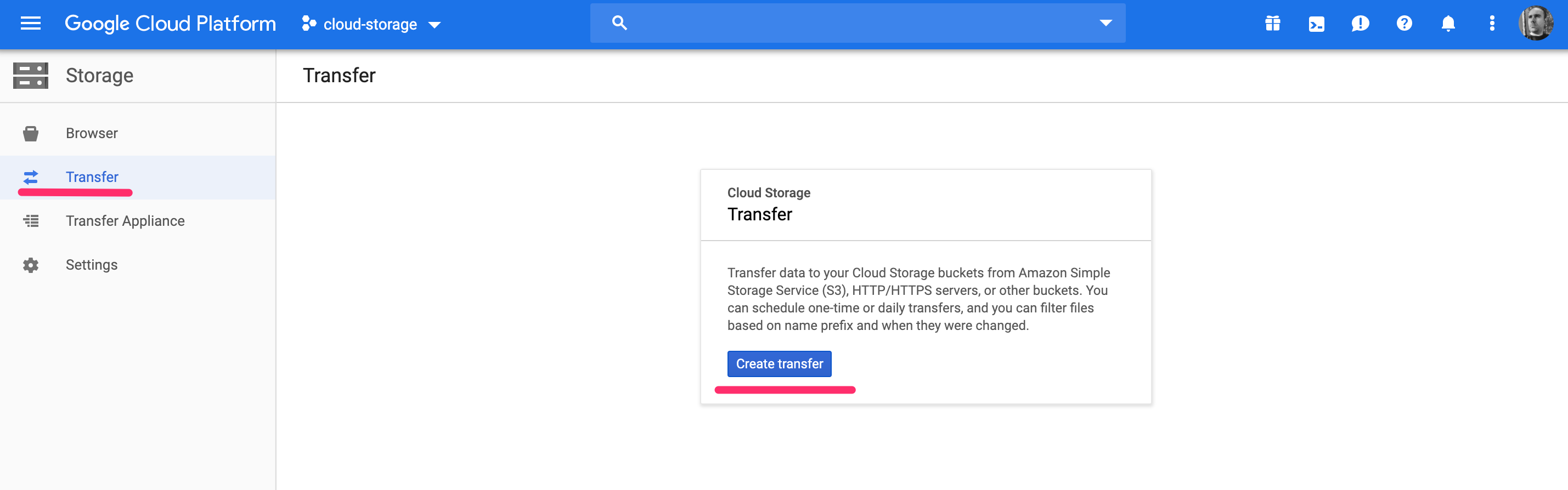

1. Start by navigating to the Storage dashboard in the Google Cloud Platform Console. In the left-hand panel, select the option “Transfer,” and initiate the process by clicking the “Create transfer” button.

1. Start by navigating to the Storage dashboard in the Google Cloud Platform Console. In the left-hand panel, select the option “Transfer,” and initiate the process by clicking the “Create transfer” button.

The first Transfer screen in Google Cloud Platform Storage.

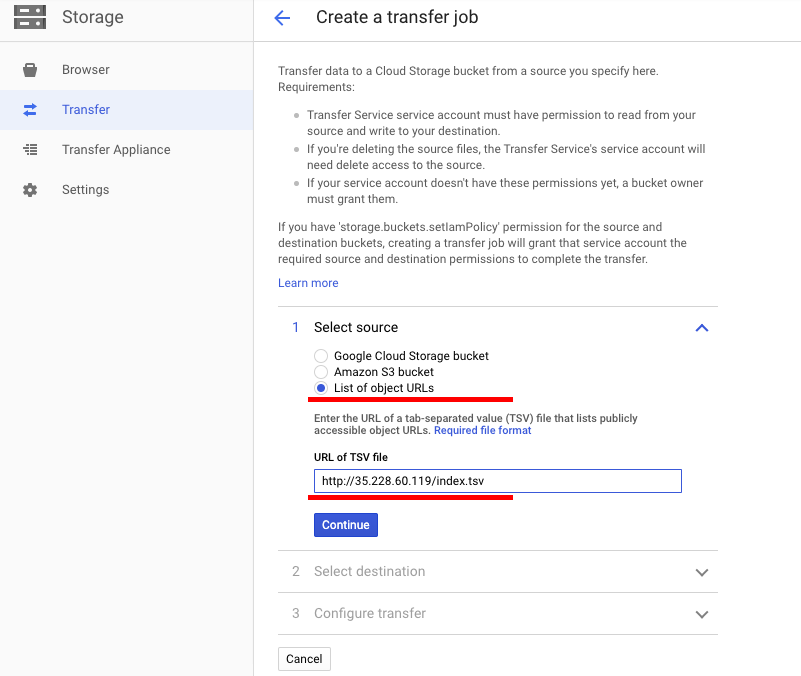

2. Now select the source of our data. In this case, we will choose “List of object URLs.”

Fill in the details of the URL of TSV file. In our example, that is http://35.228.60.119/index.tsv. Click on the “Continue” button to move forward.

The initial step of creating a transfer is to define the data source.

The initial step of creating a transfer is to define the data source.

3. With the source defined and saved, we need to select the Google Cloud Storage bucket to use as a destination and then schedule of the transfer operation. Note that the schedule can be configured as either a one-time or a periodical operation. Keep the default setting of “Run now” and then click “Save” to finalize the transfer job creation and initiate the operation.

.png) Selecting the destination and schedule for the transfer.

Selecting the destination and schedule for the transfer.

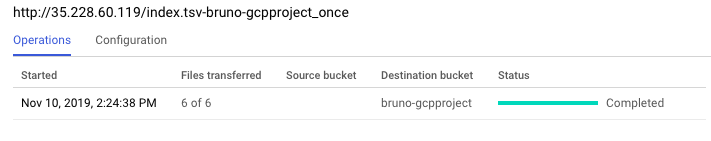

4. Since the transfer job was created and configured with a schedule to run once, the transfer operation will start immediately. The screen will show all the transfer operations and their current status. If all goes well, when the transfer is finished, you will see the status change to “Completed.”

Here you can see the progress status of the transfer operation.

Here you can see the progress status of the transfer operation.

Verifying the Transfer to Google Cloud Storage

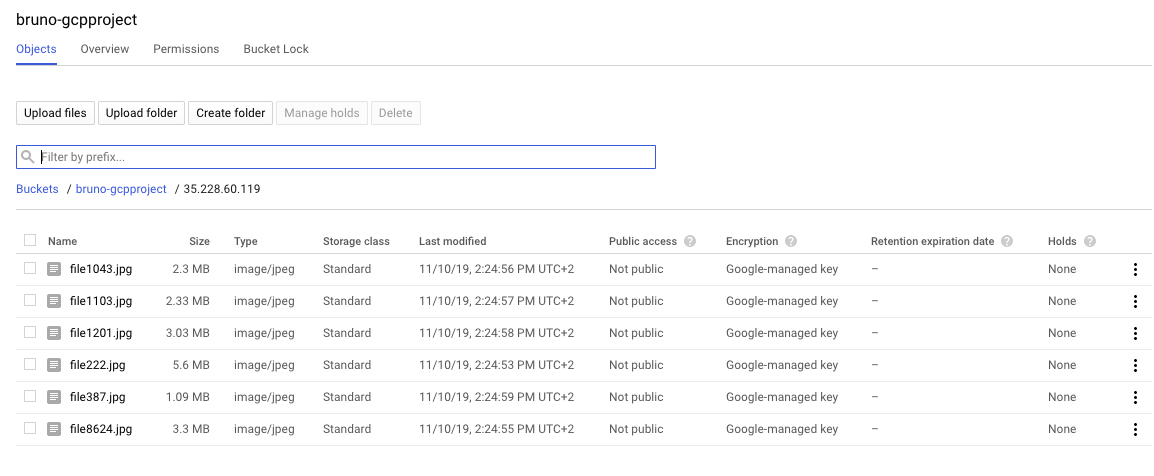

Now that the transfer was completed, you can head to the Bucket Browser on the left-hand panel menu and see the contents of the bucket. All the files that are in your Linux server will be now visible in your GCP cloud storage bucket.

Under the bucket details we can view and verify the transferred files.

Under the bucket details we can view and verify the transferred files.

Conclusion

The possibility to use the Google Cloud Storage Transfer service to automate data transfer from any server, both on-premises or in another public cloud such as Azure Blob and Amazon S3, to a Google Cloud Storage bucket is a very useful and time-saving feature. As demonstrated, it is an out-of-the-box functionality that does not require custom infrastructure and code, therefore reducing significantly the operational overhead and accelerating your Google Cloud migration.

For more on how to use Google Cloud, check out our articles on how to use the gsutil tool, how to switch between Google Cloud Storage classes, and about the different storage offerings on Google Cloud.

Since you are learning about the Google storage services offers and built-in capabilities, you might be interested in exploring additional premium services from NetApp that can be an integral part to deploying in the Google Cloud. To move data from any repository to the cloud, there is the Cloud Sync data migration service tool, and for a complete data management platform there is Cloud Volumes ONTAP. The same storage management capabilities that have been widely used in Cloud Volumes ONTAP for AWS and Azure such as storage efficiency, data protection and cloning are now available for Google Cloud.