More about Azure High Availability

Subscribe to our blog

Thanks for subscribing to the blog.

September 16, 2020

Topics: Cloud Volumes ONTAP AzureHigh AvailabilityAdvanced7 minute read

In large-scale Azure cloud deployments, selecting the right Azure regions and availability zones plays an important role in managing latency. Both play key parts in Azure HA (high availability).

Every Azure region each has at least three interconnected availability zones, with each zone consisting of one or more data centers.

Deployments within Azure regions and availability zones help in managing the latency between components. However, as Azure footprint within a region grows, the number of data centers in use will increase. Your application components could be placed in any one of these data centers spread across the region. This placement spreads out your deployment, increasing the space between your application components, thereby increasing your network latency. That higher latency may have a negative impact on your application’s performance. To address this performance concern for latency-sensitive, business critical use cases, Azure offers a new resource type called proximity placement groups.

This blog will explore the features of Azure proximity placement groups and how customers can leverage the solution in NetApp Cloud Volumes ONTAP to address Azure latency concerns.

What Is a Proximity Placement Group?

In basic terms, a proximity placement group is a logical grouping of Azure resources within the same Azure data center in order to reduce latency.

The cloud abstracts the process of deploying virtual machines and underlying hardware from the customer. However, the physical placement of application components still plays an important role in shaping the user experience. For example, consider an application with VMs deployed in the same region. Since the data centers that host VMs are automatically selected by Azure, those VMs could wind up being spread out across multiple, physically distant data centers. Such deployments will experience more latency when compared to VMs that are placed within the same data center. This latency could just be a few milliseconds, but for latency-sensitive workloads, even these few milliseconds can prove to be detrimental.

Azure proximity placement groups help in achieving co-location of resources in the IaaS deployment model. When resources are deployed in proximity placement groups, they are located inside the same Azure data center, thereby reducing the network latency between application components.

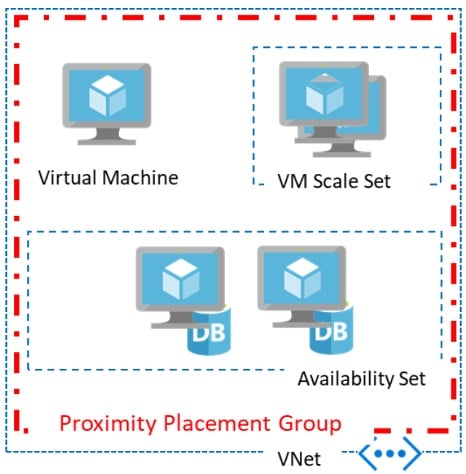

A proximity placement group is a logical construct for physical proximity of your IaaS resources, as depicted in the picture below:

Image courtesy: Microsoft

Image courtesy: Microsoft

In general, proximity placement groups can be used in any scenarios where low latency is desirable. It could be as simple as two standalone VMs that need to communicate with each other. It could also be a complex multi-tier application with VMs deployed in multiple availability sets or VMs deployed in different scale sets.

For example, consider a multi-tier application with web, application, and database tiers deployed in VMs or VM scale sets or VMs in availability sets. Using the same proximity placement group ensures that all the machines in all these tiers are deployed in the same data center. That means the network latency for your app tier to retrieve or write data to your database is considerably reduced, which delivers a better user experience. Note that these VMs could all still use different configurations or SKUs as mandated by specific tier performance requirements.

A proximity placement group is created as an Azure resource. You can choose the proximity placement group to be used when creating availability sets, VMs, or VM scale sets. While using availability sets and VM scale sets, the proximity placement group is configured at the resource level as opposed to the VM level.

Any of your existing Azure resources can also be moved into proximity placement groups. This would require the VM to be stopped and redeployed to the proximity placement group so that the co-location requirements are met.

Best Practices with Proximity Placement Groups

Let’s take a look at some of the recommended best practices for using proximity placement groups.

- Accelerated networking: Proximity placement groups, along with accelerated networking, help to achieve lowest latencies for applications. The accelerated networking configuration uses single-root I/O virtualization. This virtualization level goes a long way to enhancing VM networking performance and eliminates jitter, all while reducing the overall CPU utilization.

- Template deployment: Though proximity placement groups help in addressing the network latency issues, they can add a deployment constraint, since all the components in the group need to be allocated from the same data center. With the data center selected during the first deployment, any subsequent deployments could fail if specific SKUs are not supported by that data center’s hardware. To avoid this, it’s recommended to use a single ARM template for deployment where multiple VM SKUs are involved. The ARM template helps in automating the process all the while defining deployment dependencies that increase the deployments success rate.

- Reuse and redeployment: If you ever need to redeploy a placement group, make sure all the VMs that were previously deployed in that placement group have been deleted. In case of deployment failures due to unavailability of any SKU, changing the sequence during redeployment so that the failed SKUs are deployed first, may help to resolve the issue, as the deployment would be initiated in a data center where the SKUs are available.

- Usage with availability zones: Proximity placement groups can’t span availability zones; however, they can be used in conjunction with application architectures that use availability zones. And while proximity placement groups can’t span availability zones, they can still be used to reduce latencies of components within an availability zone. For example, in active-standby deployment architectures where VMs are deployed in different zones, proximity placement groups can be used within each zone.

Cloud Volumes ONTAP and Proximity Placement Groups

NetApp Cloud Volumes ONTAP is the enterprise-class data management solution in Azure, AWS and Google Cloud based on the trusted ONTAP data management platform.

High availability, low-latency data access, and data resilience are key goals for applications managed by Cloud Volumes ONTAP, and proximity placement groups can have a big impact on how they’re achieved.

When used along with proximity placement groups, customers can design the Cloud Volumes ONTAP environment to deliver the lowest possible latencies, thereby enhancing performance.

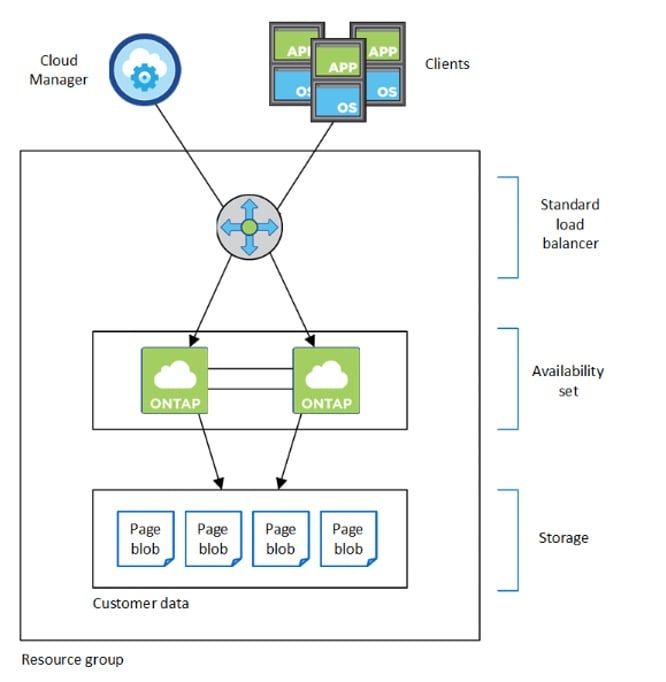

A highly available Cloud Volumes ONTAP deployment consists of two Cloud Volumes ONTAP instances deployed in availability sets, with traffic paths managed by a load balancer, as shown in the architecture below:

Learn more about Azure High Availability Architecture with Cloud Volumes ONTAP.

By adding the availability sets to proximity placement groups, the network latencies are considerably reduced, thereby improving the overall performance. Alternatively, you can choose to place the individual VMs in separate proximity placement groups in a primary/secondary deployment architecture if the goal is improved resiliency.

Customers can also choose to deploy single-node Cloud Volumes ONTAP instances and replicate Cloud Volumes ONTAP volumes across them for the purpose of DR along with highly available primary region configurations. If the deployments are in different availability zones for high availability, the overall round trip latency will be around 2ms, as found in Azure latency tests. This is less than latency between Azure regions, but can be brought down even further by grouping together Cloud Volumes ONTAP instances in an Azure proximity placement group within a zone.

Conclusion

Azure proximity placement group helps organizations to gain better control over how their compute resources are deployed in Azure and the associated network latencies. Combining Cloud Volumes ONTAP with proximity placement groups helps fine-tune this process, by ensuring high availability of data without impacting performance and latency.

The benefits in Cloud Volumes ONTAP deployments in proximity placement groups are clear:

- Increase performance when coupled with an availability set due to proximity of resources.

- Achieve higher resiliency by creating several proximity placement groups and decoupling resources (primary-secondary single-node deployments).

- Lower latencies both in single-node and high availability configurations.

High availability is just the start of the benefits to Cloud Volumes ONTAP deployment. The service spans across hybrid and multicloud deployments, thereby delivering a unified plane of data management without compromising on Azure backup data protection, security, or storage economy on Azure.

Cloud Volumes ONTAP simplifies data management with tools and features such as seamless data replication with SnapMirror®, space-efficient and instant data volume cloning with FlexClone®,, and incremental, automatic NetApp Snapshot™ copies are some of the other features that help in this process.