Subscribe to our blog

Thanks for subscribing to the blog.

August 2, 2022

Topics: Azure NetApp FilesAstra Advanced25 minute readKubernetes Protection

Introduction

NetApp® Astra™ Control is a solution that makes it easier to manage, protect, and move data-rich Kubernetes workloads within and across public clouds and on-premises. Astra Control provides persistent container storage that leverages NetApp’s proven and expansive storage portfolio in the public cloud and on-premises, supporting Azure managed disks as storage backend options as well.

Astra Control also offers a rich set of application-aware data management functionality (like snapshot and restore, backup and restore, activity logs, and active cloning) for local data protection, disaster recovery, data audit, and mobility use cases for your modern apps. Astra Control provides complete protection of stateful Kubernetes applications by saving both data and metadata, like deployments, config maps, services, secrets, that constitute an application in Kubernetes. Astra Control can be managed via its user interface, accessed by any web browser, or via its powerful REST API.

Astra Control has two variants:

- Astra Control Service (ACS) – A fully managed application-aware data management service that supports Azure Kubernetes Service (AKS), Azure Disk Storage, and Azure NetApp Files (ANF).

- Astra Control Center (ACC) – application-aware data management for on-premises Kubernetes clusters, delivered as a customer-managed Kubernetes application from NetApp.

To demonstrate Astra Control’s backup and recovery capabilities in AKS, we use CloudBees CI, a solution for providing Continuous Integration based on Jenkins instances. CloudBees Software Delivery Automation CI is a fully-featured, cloud native capability that can be hosted on-premises or in the public cloud and is used to deliver CI at scale. It provides a shared, centrally managed, self-service experience for all your development teams running Jenkins. CloudBees CI on modern cloud platforms is designed to run on Kubernetes. In this case, we are deploying CloudBees CI on Azure Kubernetes Service (AKS).

The Kubernetes resources created by CloudBees CI are the following:

- 1 Load Balancer with public IP address that is normally pointing to main domain name (resolvable by DNS), e.g., my-cloudbees.com

- 1 statefulset + 1 PVC + 1 service + 1 ingress controller for CloudBees Jenkins Operations Console (CJOC): my-cloudbees.com/cjoc

- 1 statefulset + 1 PVC + 1 service + 1 ingress controller for each controller aka Jenkins instance that is created inside CloudBees: my-cloudbees.com/my-cool-cicd-1

- All ingress controllers belong to the same public IP address: the one of the Load Balancer for the main domain name.

- With new Kubernetes versions the ingress class used by CloudBees is nginx type instead of using default ingress controllers

- The PVCs are backed by Azure NetApp Files, dynamically provisioned using Astra Trident – NetApp’s CSI spec compliant K8s storage orchestrator.

Thanks to the latest improvements in Astra Control Service, we can now protect with snapshots and backups, not only the data stored by Jenkins in the PVC, but also ALL the above Kubernetes resources.

Scenario

In the following scenario, we will demonstrate how Astra Control Service can protect all resources in a CloudBees CI deployment on an AKS cluster in a European region and recover the full application in a different AKS cluster in a US region.

Deploying CloudBees CI - Jenkins

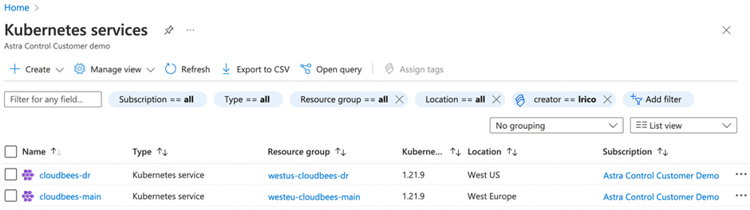

First, we create two AKS clusters, our main "production" cluster called cloudbees-main in West Europe and another cluster for disaster-recovery called cloudbees-dr in West US regions.

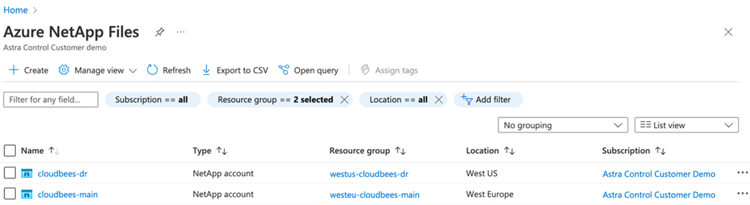

Then, we create Azure NetApp Files (ANF) accounts and capacity pools in both regions. We also have to connect via a specific vnet the AKS cluster with ANF account in the same region. We can see below two different ANF accounts with the same names as the AKS clusters, and using the same Resource Groups, in the same regions.

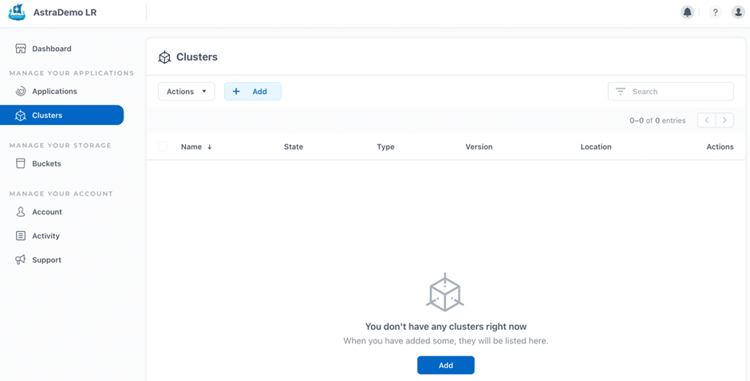

After that, we can log on to our Astra Control Service (ACS) account and "Add" both clusters to be registered and protected by ACS.

In our ACS console we don't have any managed Kubernetes clusters yet, we must click on the button add in the Clusters section:

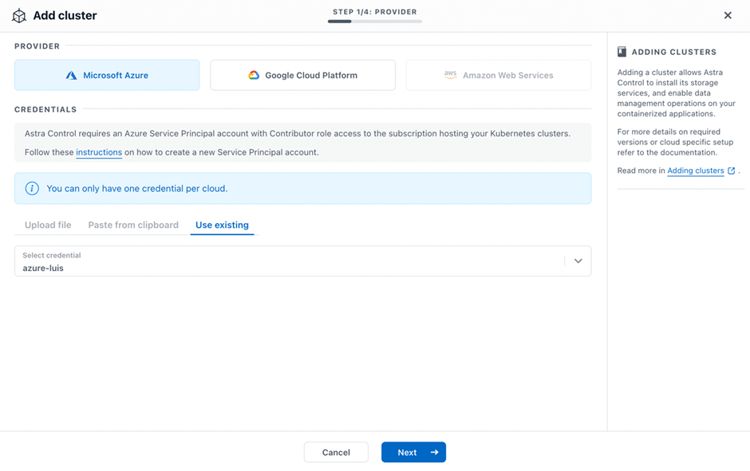

Then, we provide the right credentials at the beginning of the wizard, selecting Microsoft Azure, to let ACS scan with that credential which AKS clusters are available to register.

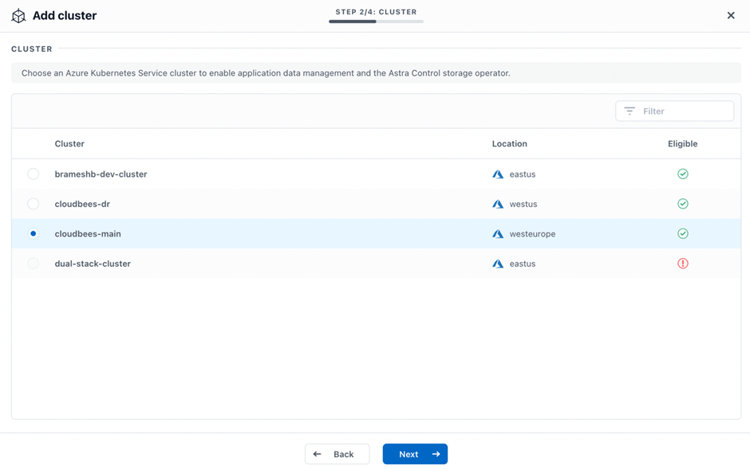

Now, we select first the cluster cloudbees-main, to be registered by ACS. ACS checks that there is Azure NetApp Files available to be used by AKS through CSI provisioner Astra Trident, marking the OK green sign in the Eligible column:

ACS detects automatically ANF capacity pools reachable by the AKS cluster and will install and automatically configure Astra Trident in the namespace trident in the AKS cluster, for enabling dynamic provisioning of ANF volumes, creating a new Storage Class for ANF.

Note: ACS can also protect applications on AKS clusters using Azure Disk for persistent storage

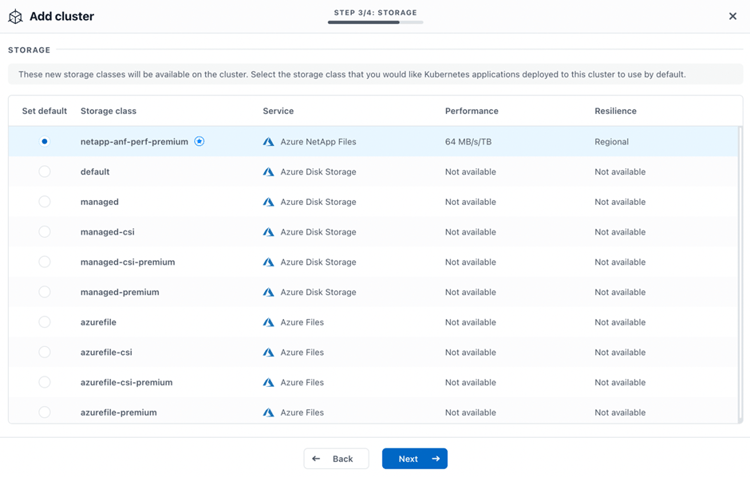

In the next step of the wizard, we will select the CSI storage class we want to set as Default Storage Class for our AKS cluster. In this case, we choose the ANF storage class netapp-anf-perf-premium as the default.

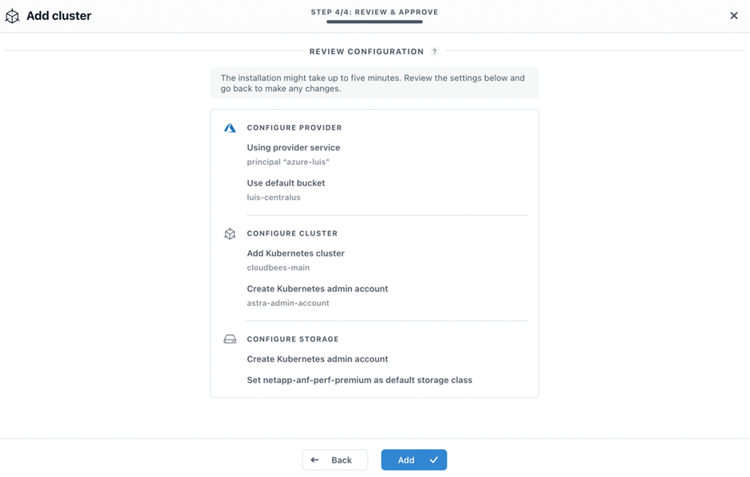

In the last step, a summary of our actions appears to get confirmation.

After clicking "Add", ACS installs and configures Astra Trident in the AKS cluster.

We can repeat the same steps with our AKS cluster for Disaster Recovery cloudbees-dr.

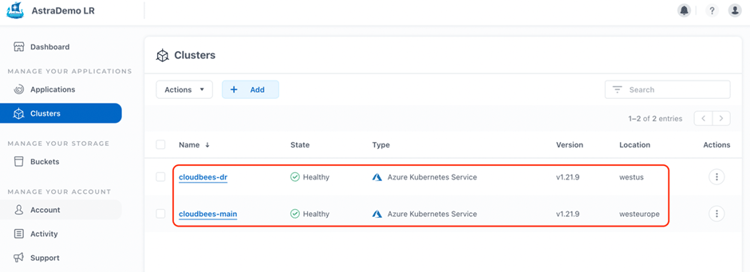

Now, we have in our ACS console both AKS clusters managed by ACS, providing information about the health, location, and version of the clusters, in this case we are using Kubernetes v1.21.9:

Now, we are ready to deploy CloudBees CI in our cloudbees-main AKS cluster, using ANF as the default persistent storage.

Following the instructions on how to deploy CloudBees CI on modern cloud platforms, we need to use a helm chart. We add the repo, update it, create a dedicated namespace called cloudbees-core for our deployment, and deploy CloudBees using helm with nginx as ingressclass:

$ kubectl cluster-info

Kubernetes control plane is running at https://cloudbees--westeu-cloudbees-b2896f-19fbb235.hcp.westeurope.azmk8s.io:443

CoreDNS is running at https://cloudbees--westeu-cloudbees-b2896f-19fbb235.hcp.westeurope.azmk8s.io:443/api/v1/namespaces/k...

Metrics-server is running at https://cloudbees--westeu-cloudbees-b2896f-19fbb235.hcp.westeurope.azmk8s.io:443/api/v1/namespaces/k...

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-17876121-vmss000000 Ready agent 18h v1.21.9

aks-nodepool1-17876121-vmss000001 Ready agent 18h v1.21.9

aks-nodepool1-17876121-vmss000002 Ready agent 18h v1.21.9

$ kubectl get namespaces

NAME STATUS AGE

default Active 18h

kube-node-lease Active 18h

kube-public Active 18h

kube-system Active 18h

trident Active 15h

$ helm repo add cloudbees https://public-charts.artifacts.cloudbees.com/repository/public/

"cloudbees" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cloudbees" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "azure-marketplace" chart repository

Update Complete. ⎈Happy Helming!⎈

$ kubectl create namespace cloudbees-core

namespace/cloudbees-core created

$ helm install cloudbees-core \

cloudbees/cloudbees-core \

--namespace cloudbees-core \

--set ingress-nginx.Enabled=true

NAME: cloudbees-core

LAST DEPLOYED: Thu Jun 16 13:08:42 2022

NAMESPACE: cloudbees-core

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Once Operations Center is up and running, get your initial admin user password by running:

kubectl rollout status sts cjoc --namespace cloudbees-core

kubectl exec cjoc-0 --namespace cloudbees-core -- cat /var/jenkins_home/secrets/initialAdminPassword

2. Get the OperationsCenter URL to visit by running these commands in the same shell:

If you have an ingress controller, you can access Operations Center through its external IP or hostname.

Otherwise you can use the following method:

export POD_NAME=$(kubectl get pods --namespace cloudbees-core -l "app.kubernetes.io/instance="cloudbees-core",app.kubernetes.io/component=cjoc" -o jsonpath="{.items[0].metadata.name}")

echo http://127.0.0.1:80

kubectl port-forward $POD_NAME 80:80

3. Login with the password from step 1.

For more information on running CloudBees Core on Kubernetes, visit:

https://go.cloudbees.com/docs/cloudbees-core/cloud-admin-guide/

After a few minutes, we can check that all the resources are deployed and get the admin temporary password for configuring CloudBees CI:

$ kubectl -n cloudbees-core get pvc,all,ing

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins-home-cjoc-0 Bound pvc-8d52d00a-9760-4f58-838a-2a1b889be99e 100Gi RWO netapp-anf-perf-premium 63m

NAME READY STATUS RESTARTS AGE

pod/cjoc-0 1/1 Running 0 63m

pod/cloudbees-core-ingress-nginx-controller-d4784546c-lphd5 1/1 Running 0 63m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cjoc ClusterIP 10.0.195.237 <none> 80/TCP,50000/TCP 63m

service/cloudbees-core-ingress-nginx-controller LoadBalancer 10.0.124.250 20.126.199.140 80:31865/TCP,443:30049/TCP 63m

service/cloudbees-core-ingress-nginx-controller-admission ClusterIP 10.0.251.134 <none> 443/TCP 63m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cloudbees-core-ingress-nginx-controller 1/1 1 1 63m

NAME DESIRED CURRENT READY AGE

replicaset.apps/cloudbees-core-ingress-nginx-controller-d4784546c 1 1 1 63m

NAME READY AGE

statefulset.apps/cjoc 1/1 63m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/cjoc nginx * 20.126.199.140 80 63m

$ kubectl rollout status sts cjoc --namespace cloudbees-core

statefulset rolling update complete 1 pods at revision cjoc-c8b96dfdc...

$ kubectl exec cjoc-0 --namespace cloudbees-core -- cat /var/jenkins_home/secrets/initialAdminPassword

73f27870bb81419081e04ad009819fa7 We can also check that a new cluster scoped resource, ingress class of type NGINX has been created:

$ kubectl get ingressclass -o yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

meta.helm.sh/release-name: cloudbees-core

meta.helm.sh/release-namespace: cloudbees-core

creationTimestamp: "2022-06-16T11:08:48Z"

generation: 1

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: cloudbees-core

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/version: 1.1.0

helm.sh/chart: ingress-nginx-4.0.13

name: nginx

resourceVersion: "212469"

uid: ddaf66b2-339e-48f8-8394-3bb19dfe5688

spec:

controller: k8s.io/ingress-nginx

kind: List

metadata:

resourceVersion: ""

selfLink: "" For the sake of simulating a full Disaster Recovery, we are going to use the public IP address provided by the Load Balancer as if it was resolvable by an external DNS server but using our own /etc/hosts. We'll call the domain: my-cloudbees.com, and use it for everything related to our CloudBees CI deployment:

$ cat /etc/hosts

##

# Host Database

#

# localhost is used to configure the loopback interface

# when the system is booting. Do not change this entry.

##

127.0.0.1 localhost

255.255.255.255 broadcasthost

::1 localhost

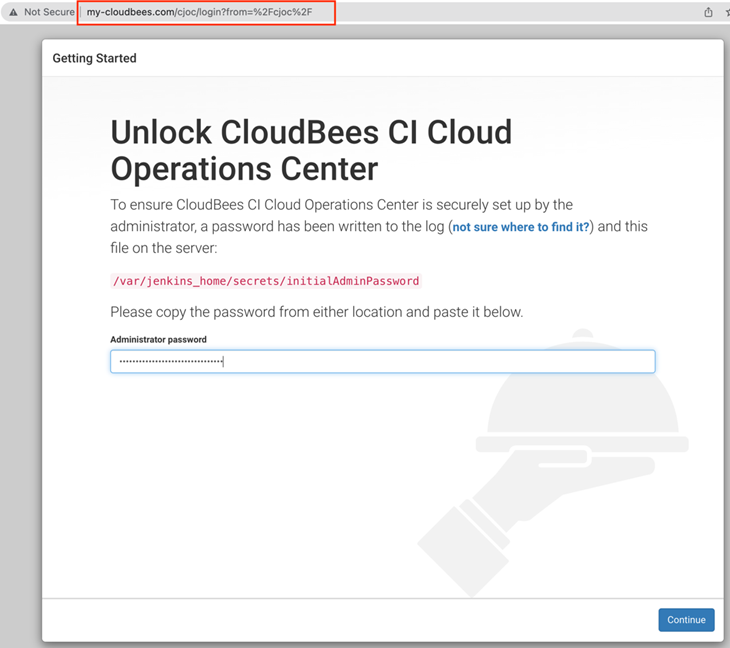

20.126.199.140 my-cloudbees.com Now, we can navigate in our local browser to my-cloudbees.com and complete the configuration of the CloudBees CI.

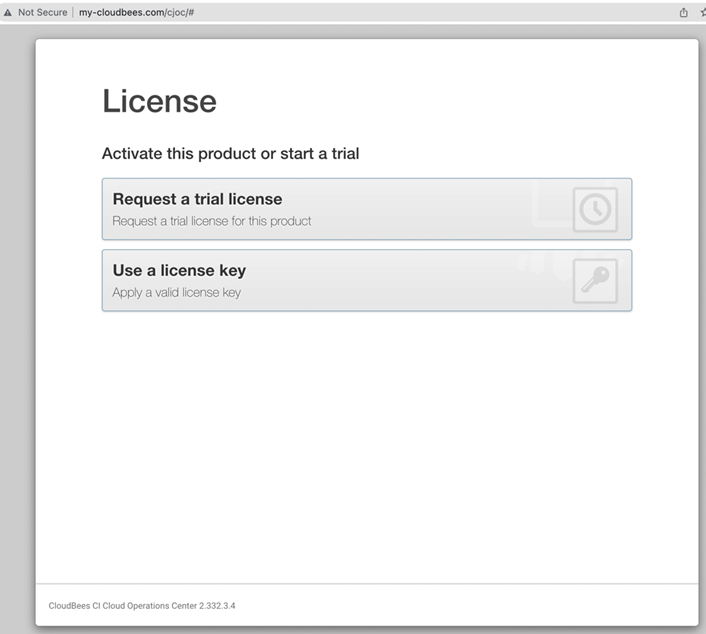

In the next step, we can request a trial license to activate the product, filling in a form with our corporate details.

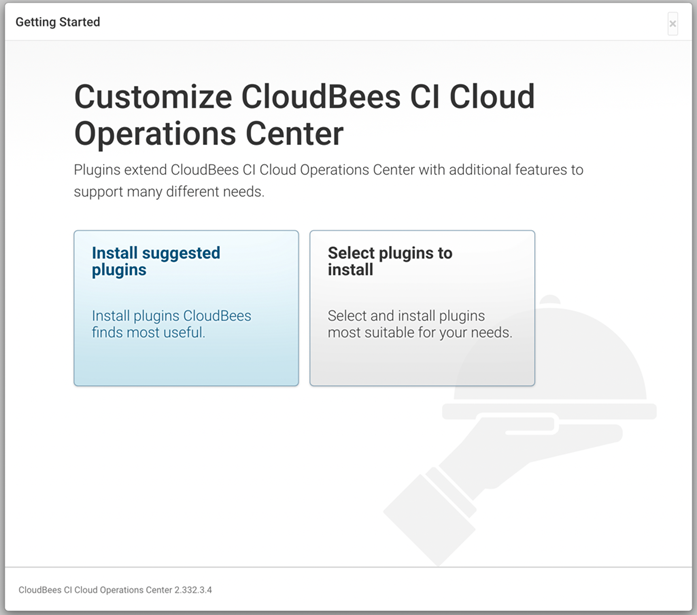

After that, CloudBees CI will propose to install a series of recommended/suggested plug-ins for general management.

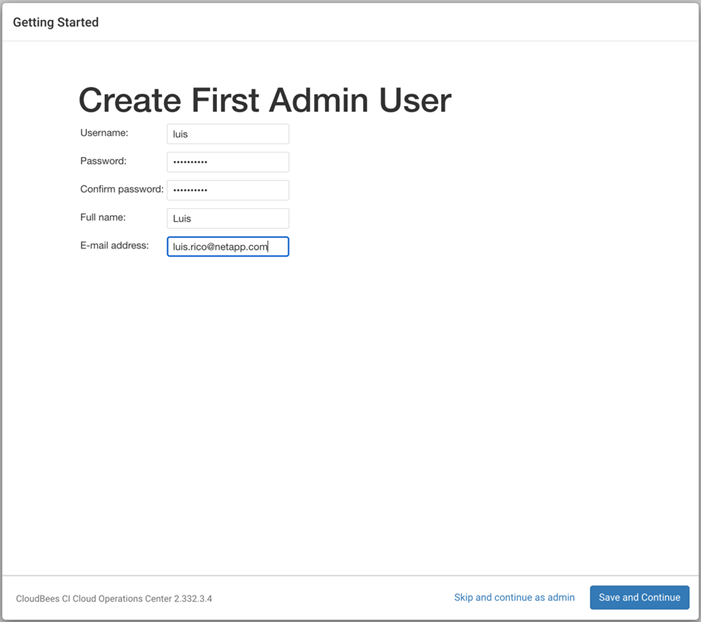

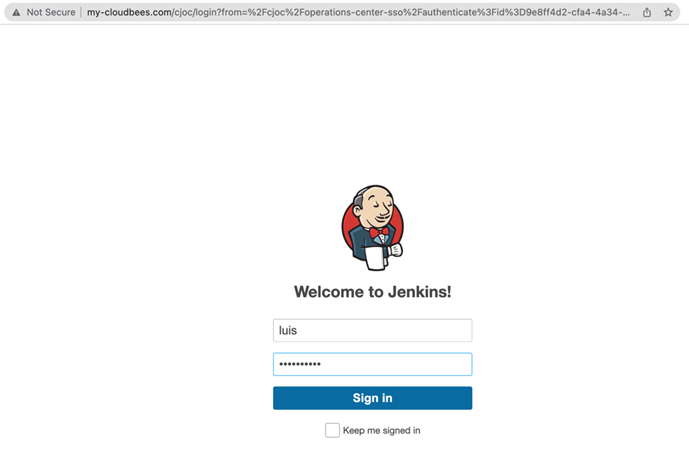

Then, we create our first Admin user luis to avoid using the Admin temporary password again.

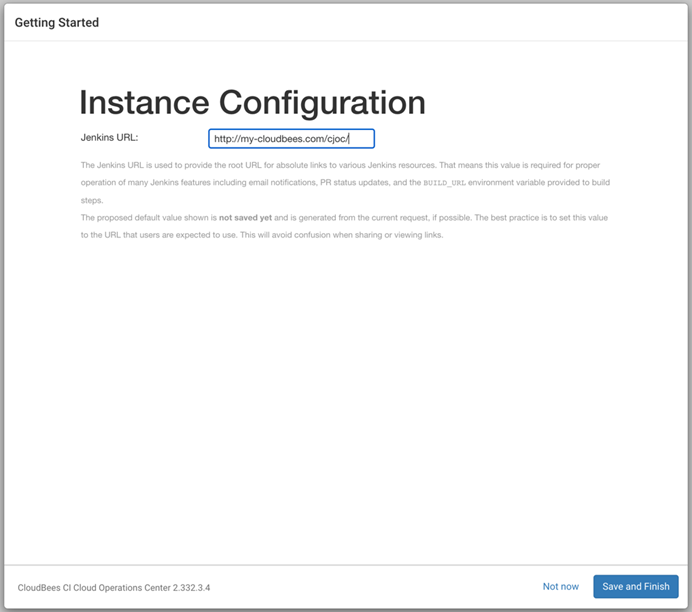

Then, CloudBees gets configured using the domain name we specified when accessing with the browser: mycloudbees.com/cjoc

This is important because when recovering from a disaster we need to change the IP address of this domain name, as all the components are sub-domains using same public IP address. We change the corporate DNS (in our case, the local /etc/hosts) to point to the new IP address for the recovered CloudBees CI service, that will be the public IP address of the new Load Balancer in our cloudbees-dr AKS cluster. Using domain names facilitate the recovery, as all Jenkins instances will be sub-domains of the same main domain name, and therefore resolvable after Disaster Recovery.

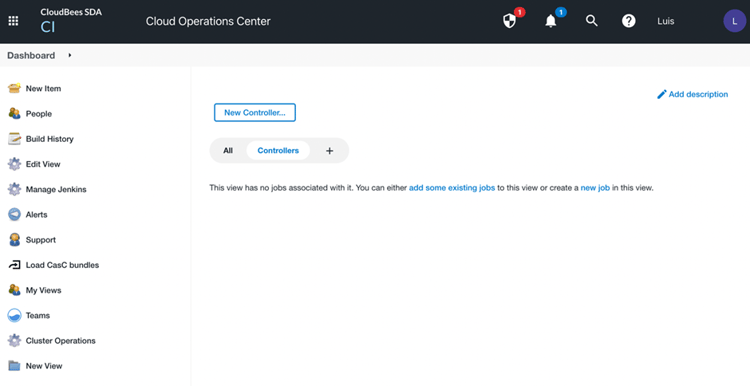

After that, we'll land in the CJOC (CloudBees Jenkins Operations Center) dashboard:

Configuring CloudBees-CI-Jenkins

To demonstrate how Astra Control Service protects and provides DR for a complex Kubernetes deployment that is massively scalable like CloudBees CI, we'll configure our CloudBees CI deployment to have a more realistic environment.

CloudBees allows us to centrally manage and control Jenkins instances to provide CI/CD pipelines for the development of new applications. Every Jenkins instance is called a Controller. As explained before, each Controller creates in the cloudbees-core namespace (each controller can be created in a different namespace), the following Kubernetes resources: 1 Stateful Set + 1 PVC + 1 service + 1 ingress controller for each Controller (Jenkins instance) that is created inside CloudBees, and it creates an ingress controller as a new path in the main domain name, for example: my-cloudbees.com/my-cool-cicd-1

Dozens of controllers can be created in a single CloudBees CI deployment, to dedicate Jenkins instances for different development teams or applications.

We are going to create 4 new controllers, Jenkins instances, with some pipeline jobs inside the controllers. These are example pipelines, but for the purpose of simulating the usage of persistent storage by Jenkins instances, one of them will have a special pipeline that artificially stores a big file every time it is built.

This is the aspect of the pipeline code for artificially using more storage in the PV, called make-pv-fat:

pipeline {

agent {

kubernetes {

label 'mypodtemplate-v2'

defaultContainer 'jnlp'

yaml """

apiVersion: v1

kind: Pod

metadata:

labels:

some-label: some-label-value

spec:

containers:

- name: ubuntu

image: ubuntu

command:

- cat

tty: true

"""

}

}

stages {

stage('Inflate') {

steps {

sh 'date'

sh 'dd if=/dev/urandom of=./$(date +%Y%m%d-%H%M).tmp bs=1M count=10000'

}

}

stage('Archive') {

steps {

archiveArtifacts artifacts: '*.tmp',

allowEmptyArchive: true,

fingerprint: true,

onlyIfSuccessful: true

}

}

}

} As we can see, the pipeline just creates a 10 GB random generated file with dd and stores it in the archive of the Jenkins instance.

We won't go over the details and screenshots about how to configure CloudBees for creating all these controllers and pipelines, but we'll show some pieces of the final configuration in the CloudBees console. More details in the official documentation: https://docs.cloudbees.com/docs/cloudbees-ci/latest/cloud-setup-guide/cloud-setup-guide-intro .

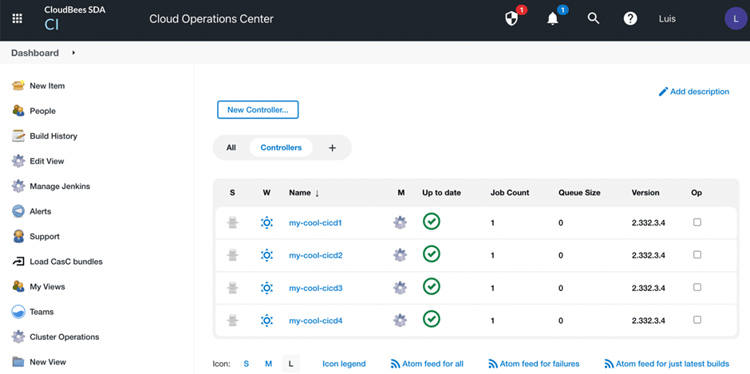

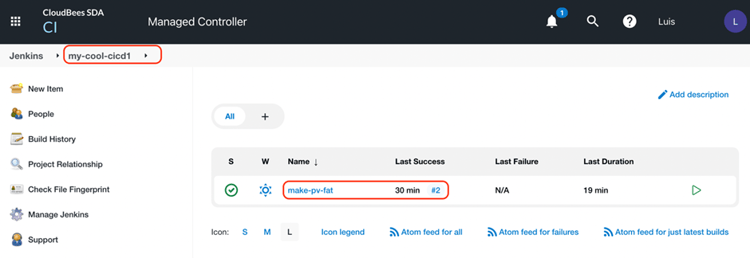

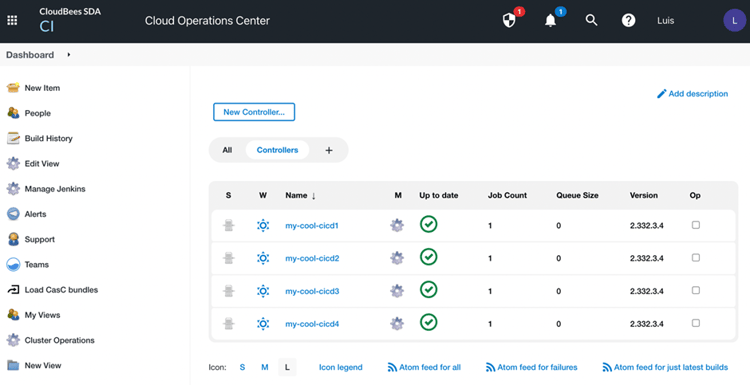

The four controllers, Jenkins instances, up and running:

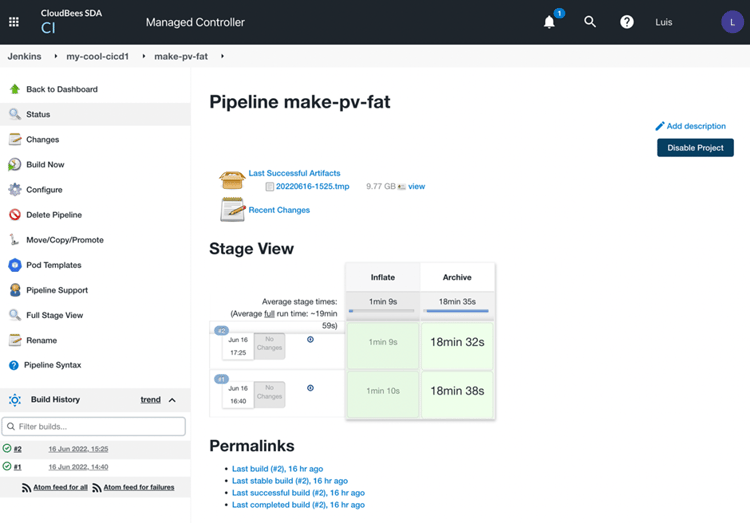

The pipeline job mentioned earlier called make-pv-fat in the first Controller my-cool-cicd1,

with 2 builds already executed:

It's important to remark again that the pipeline and controller, as marked in red, are available at http://my-cloudbees.com/my-cool-cicd1 that being the subdomain for that Jenkins instance, CloudBees controller.

After this configuration of CloudBees CI, the number of Kubernetes resources in our cloudbees-core namespace has grown considerably, making it more complex to protect as an entire application in Kubernetes with a lot of dependencies between them:

$ kubectl -n cloudbees-core get pvc,all,ing

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins-home-cjoc-0 Bound pvc-8d52d00a-9760-4f58-838a-2a1b889be99e 100Gi RWO netapp-anf-perf-premium 5h16m 5

persistentvolumeclaim/jenkins-home-my-cool-cicd1-0 Bound pvc-2076e858-4b91-4ee7-9680-58bf3290029e 150Gi RWO netapp-anf-perf-premium 143m 7

persistentvolumeclaim/jenkins-home-my-cool-cicd2-0 Bound pvc-452e271b-62b3-44c3-80e0-d54818754454 100Gi RWO netapp-anf-perf-premium 135m 7

persistentvolumeclaim/jenkins-home-my-cool-cicd3-0 Bound pvc-dec3a2be-c815-4353-b8c2-74f49457e80f 125Gi RWO netapp-anf-perf-premium 114m 7

persistentvolumeclaim/jenkins-home-my-cool-cicd4-0 Bound pvc-37fd5e5d-1477-4379-ab35-a8b4adf36d5b 175Gi RWO netapp-anf-perf-premium 103m 7

NAME READY STATUS RESTARTS AGE

pod/cjoc-0 1/1 Running 0 5h16m 5

pod/cloudbees-core-ingress-nginx-controller-d4784546c-lphd5 1/1 Running 0 5h16m 2

pod/my-cool-cicd1-0 1/1 Running 0 143m 7

pod/my-cool-cicd2-0 1/1 Running 0 135m 7

pod/my-cool-cicd3-0 1/1 Running 0 114m 7

pod/my-cool-cicd4-0 1/1 Running 0 103m 7

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cjoc ClusterIP 10.0.195.237 <none> 80/TCP,50000/TCP 5h16m 7

service/cloudbees-core-ingress-nginx-controller LoadBalancer 10.0.124.250 20.126.199.140 80:31865/TCP,443:30049/TCP 5h16m 1

service/cloudbees-core-ingress-nginx-controller-admission ClusterIP 10.0.251.134 <none> 443/TCP 5h16m

service/my-cool-cicd1 ClusterIP 10.0.243.43 <none> 80/TCP,50001/TCP 143m 6

service/my-cool-cicd2 ClusterIP 10.0.34.177 <none> 80/TCP,50002/TCP 135m 6

service/my-cool-cicd3 ClusterIP 10.0.13.172 <none> 80/TCP,50003/TCP 114m 6

service/my-cool-cicd4 ClusterIP 10.0.148.35 <none> 80/TCP,50004/TCP 103m 6

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cloudbees-core-ingress-nginx-controller 1/1 1 1 5h16m 2

NAME DESIRED CURRENT READY AGE

replicaset.apps/cloudbees-core-ingress-nginx-controller-d4784546c 1 1 1 5h16m 2

NAME READY AGE

statefulset.apps/cjoc 1/1 5h16m 5

statefulset.apps/my-cool-cicd1 1/1 143m 7

statefulset.apps/my-cool-cicd2 1/1 135m 7

statefulset.apps/my-cool-cicd3 1/1 114m 7

statefulset.apps/my-cool-cicd4 1/1 103m 7

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/cjoc nginx * 20.126.199.140 80 5h16m 3

ingress.networking.k8s.io/my-cool-cicd1 nginx * 20.126.199.140 80 143m 4

ingress.networking.k8s.io/my-cool-cicd2 nginx * 20.126.199.140 80 135m 4

ingress.networking.k8s.io/my-cool-cicd3 nginx * 20.126.199.140 80 114m 4

ingress.networking.k8s.io/my-cool-cicd4 nginx * 20.126.199.140 80 103m 4We can see there the following

|

1 |

Load Balancer service and its public IP address as the main entry point for the outside world, sending traffic to (2) |

|

2 |

cloudbees-core-ingress-nginx-controller pod, replicaset and deployment, that is responsible to distribute traffic depending on the path to the following nginx class ingress-controllers |

|

3 |

ingress controller for the CJOC Operations Center, created by default with the deployment of CloudBees CI |

|

4 |

ingress controllers for each Controller, Jenkins instance, that has been created |

|

5 |

service attending the traffic from (3) ingress controller for the CJOC, statefulset, PVC and pod for CJOC. |

|

6 |

services attending the traffic from (4) ingress controllers for each Controller, Jenkins instance, sending traffic to: |

|

7 |

pods, statefulsets and PVCs for each Controller, with each Jenkins instance. |

Protecting CloudBees-CI-Jenkins with Astra Control Service

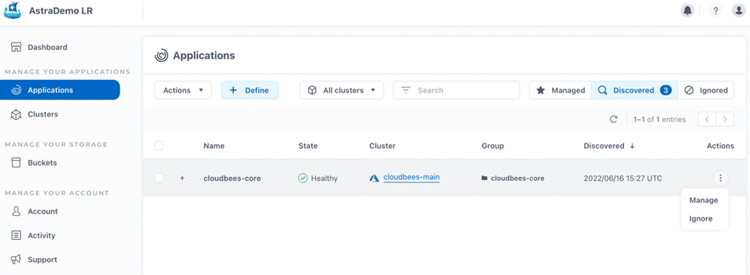

Switching to the ACS UI, we can see that the CloudBees CI application and cloudbees-core namespace have been discovered by ACS in the "Discovered" tab of Applications section.

and we can Manage the namespace.

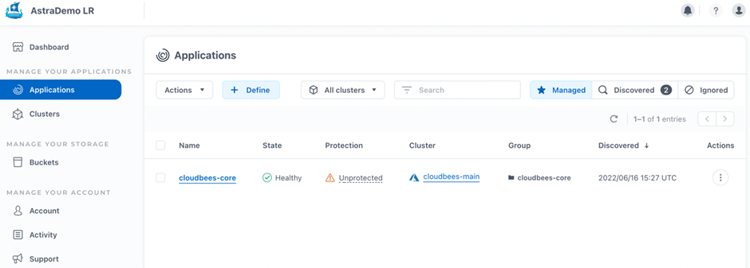

Now, that is visible in the "Managed" tab, we can see the Warning side explaining that our namespace is "Unprotected".

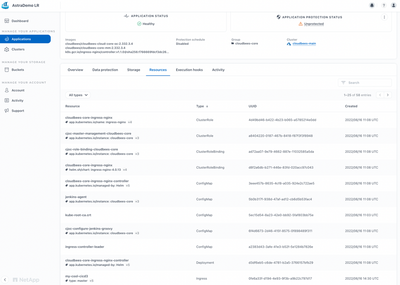

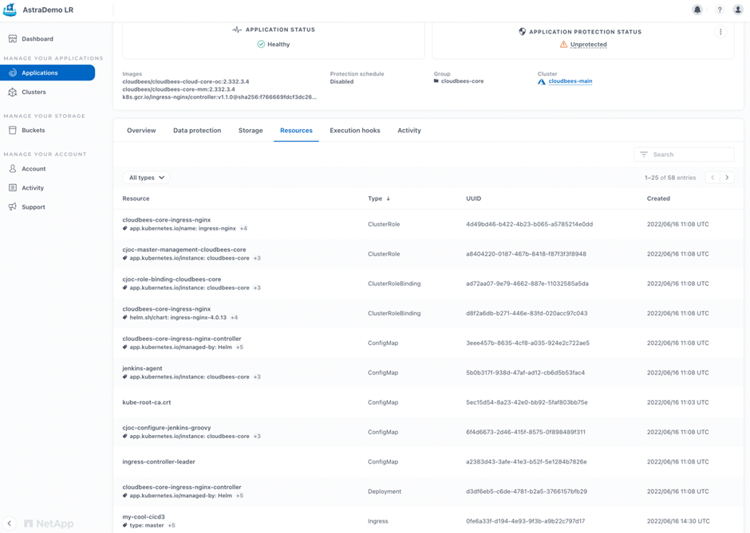

Before we start protecting the application, let's highlight what Kubernetes Resources are visible in the UI that we are going to protect:

As we can see, there are 58 Kubernetes Resources, that are going to be protected by Astra. In addition to the Deployments, StatefulSets, Services, ReplicaSets, Pods, PVCs, and Ingress controllers explained earlier, we have the ClusterRole, ClusterRoleBinding, Role, RoleBinding, ConfigMap, Secret, ServiceAccount, and ValidatingWebhookConfiguration objects inside the cloudbees-core namespace.

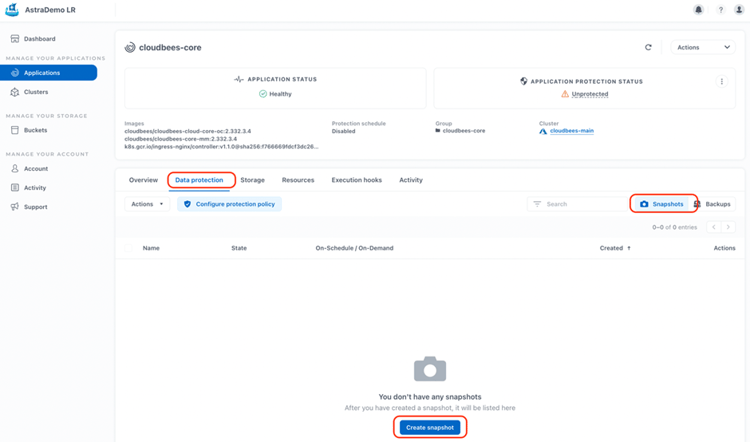

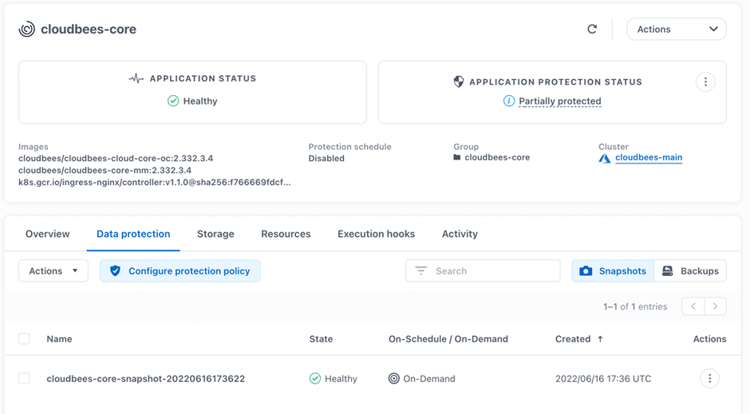

In the "Data protection" tab, we can execute an on-demand snapshot, in the "Snapshots" category button:

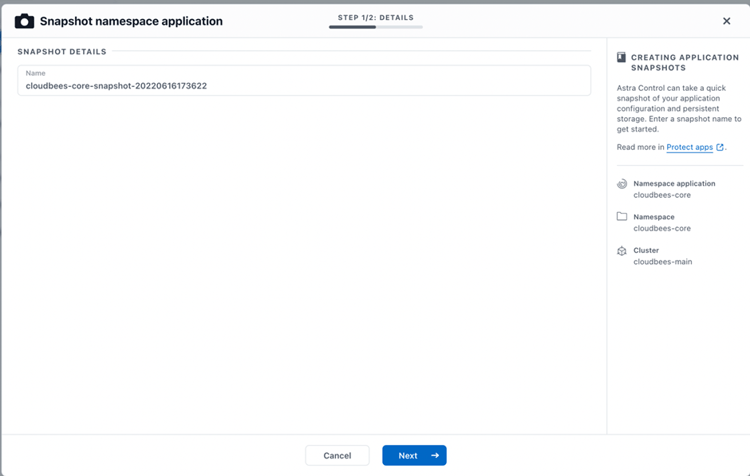

There is a wizard, asking for a name for the snapshot:

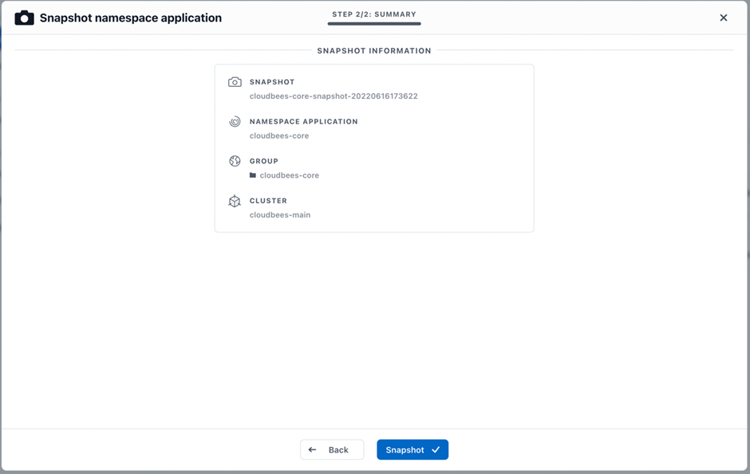

And a summary window to confirm the selection.

After a few seconds, the snapshot is taken and available. These are CSI compliant snapshots for the PVC part, but all the Kubernetes resources are stored safely as well.

Snapshots are local to the AKS cluster. If the AKS cluster is destroyed or the namespace is accidentally deleted, snapshots of PVCs are lost. For providing Disaster Recovery, we need backups. Backups are stored externally in Object Storage which can be configured with cross-region redundancy to provide more protection as explained here: https://docs.netapp.com/us-en/astra-control-service/release-notes/known-issues.html#azure-backup-buc...

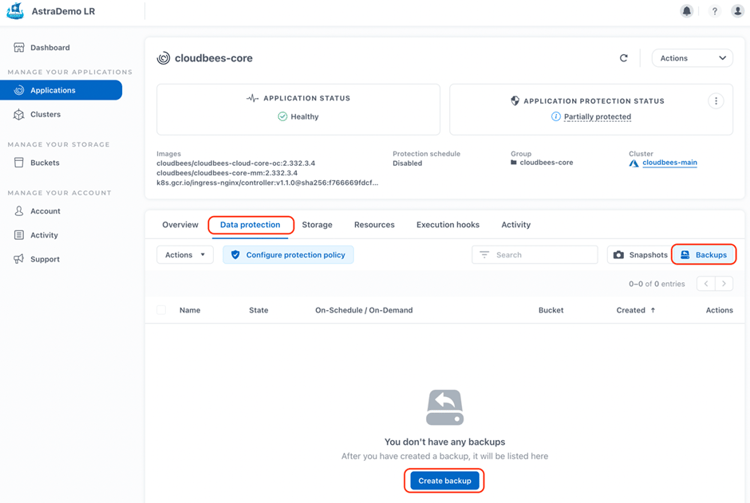

In the "Data Protection" tab, we can execute an on-demand backup, in the "Backups" category button:

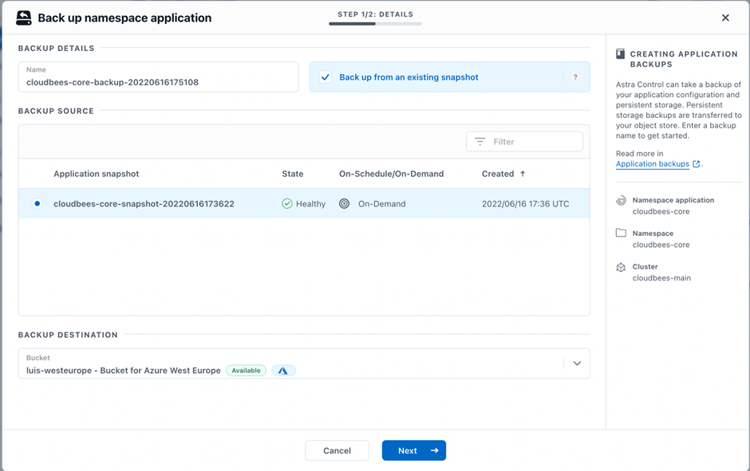

There is another wizard to guide us through the backup creation. We need to provide a name for the backup, and also select the object storage bucket for the destination of the backup (ACS uses object storage buckets for storing backups, which can be specified by the user or configured automatically by the service). We also need to decide if we want to use an existing snapshot or create a new snapshot while taking a backup.

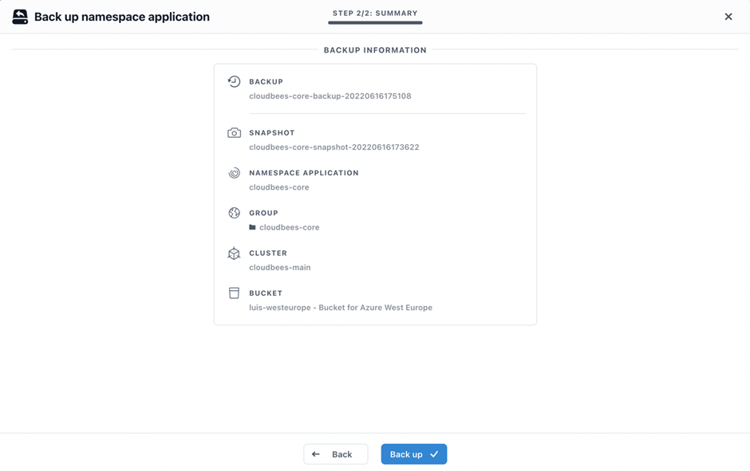

Then a summary window appears for confirmation:

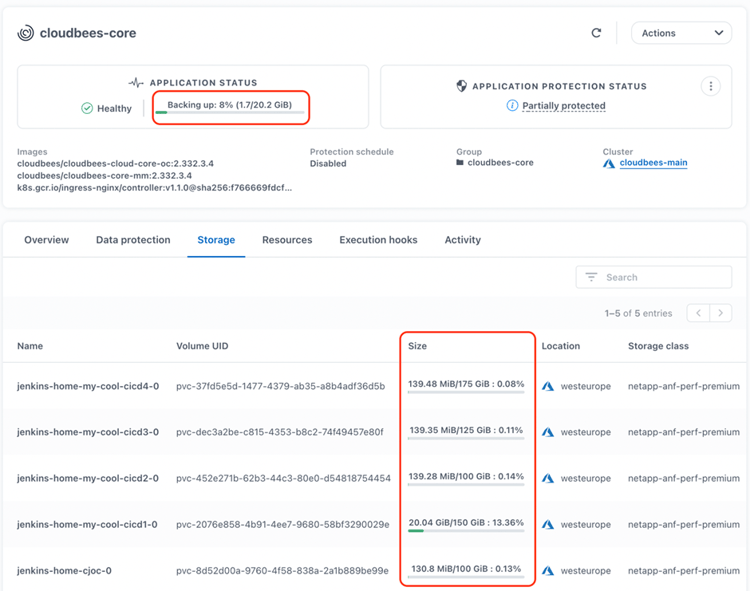

When the backup is initiated, a progress bar appears in the Application Status. We can also see in the "Storage" tab the information about used space for the PVCs coming from ANF storage class.

Where the largest used size is coming from the PVC used by the Jenkins instance with the pipeline make-pv-fat (20 GB).

With ACS, we can configure a protection policy that takes snapshots and backups periodically (hourly, daily, weekly and monthly) to match RPO/RTO goals for the application.

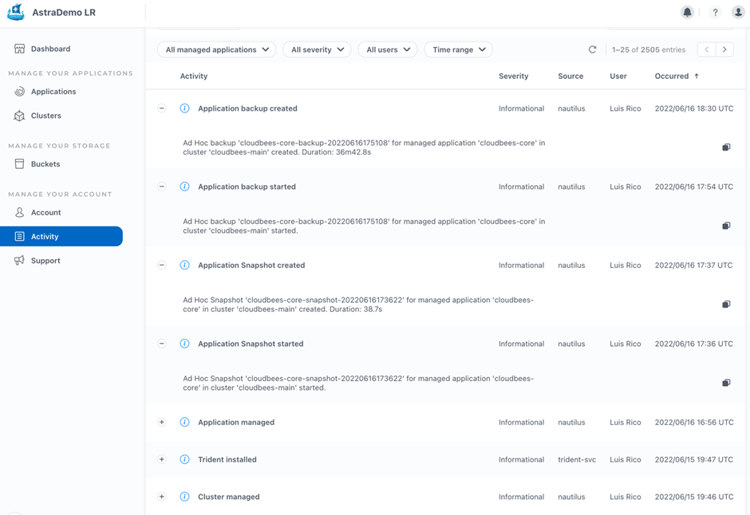

In the Activity section of the ACS console, we can also track all important activities as snapshots and backups created, and time consumed in those activities:

Simulating disaster and recovering the application to another cluster

In the next step, we want to test the recovery of the CloudBees CI platform after a simulated disaster.

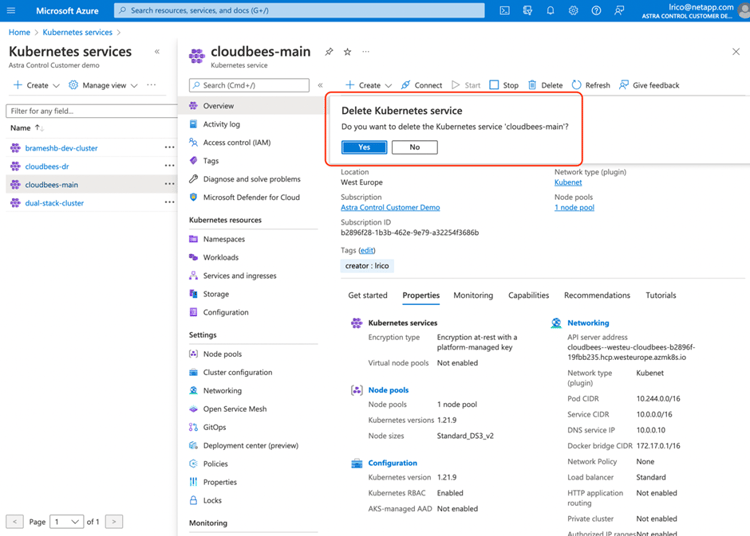

We'll destroy the cloudbees-main AKS cluster to simulate that disaster and recover from the backup into the cloudbees-dr AKS cluster.

The only resource that is not protected by ACS today is the cluster scope resource ingress class, that is deployed by CloudBees CI as part of the helm chart deployment.

Prior to starting the recovery of cloudbees-core namespace in the cloudbees-dr cluster, we need to create that ingress class. For creating the ingress class, we can export to a yaml file the definition of ingress class in cloudbees-main cluster, "clean" the yaml of uids and state related parameters, and create the yaml in cloudbees-dr cluster:

$ kubectl cluster-info

Kubernetes control plane is running at https://cloudbees--westus-cloudbees-b2896f-6566be8e.hcp.westus.azmk8s.io:443

CoreDNS is running at https://cloudbees--westus-cloudbees-b2896f-6566be8e.hcp.westus.azmk8s.io:443/api/v1/namespaces/kube-...

Metrics-server is running at https://cloudbees--westus-cloudbees-b2896f-6566be8e.hcp.westus.azmk8s.io:443/api/v1/namespaces/kube-...

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-11120100-vmss000000 Ready agent 26h v1.21.9

aks-nodepool1-11120100-vmss000001 Ready agent 26h v1.21.9

aks-nodepool1-11120100-vmss000002 Ready agent 26h v1.21.9

$ kubectl get namespaces

NAME STATUS AGE

default Active 26h

kube-node-lease Active 26h

kube-public Active 26h

kube-system Active 26h

trident Active 23h

$ cat ingressclass.yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

meta.helm.sh/release-name: cloudbees-core

meta.helm.sh/release-namespace: cloudbees-core

generation: 1

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: cloudbees-core

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/version: 1.1.0

helm.sh/chart: ingress-nginx-4.0.13

name: nginx

spec:

controller: k8s.io/ingress-nginx

kind: List

metadata:

resourceVersion: ""

selfLink: ""

$ kubectl create -f ingressclass.yaml

ingressclass.networking.k8s.io/nginx created

$ kubectl get ingressclass

NAME CONTROLLER PARAMETERS AGE

nginx k8s.io/ingress-nginx <none> 60sNow, let's destroy the cloudbees-main cluster to simulate our disaster!

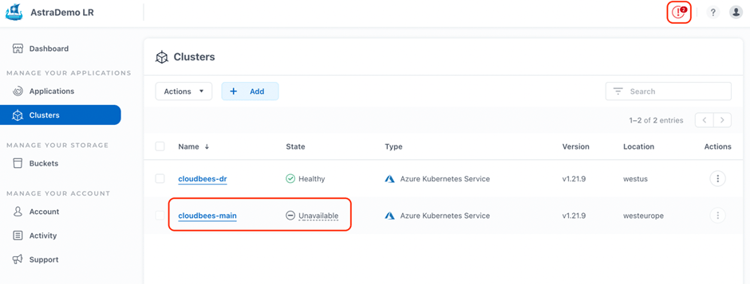

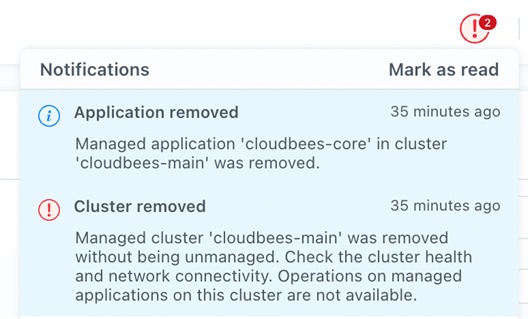

After a couple of minutes, in the ACS console, in the cluster section, we can see how the cluster cloudbees-main is now unavailable, and there are critical notifications about it:

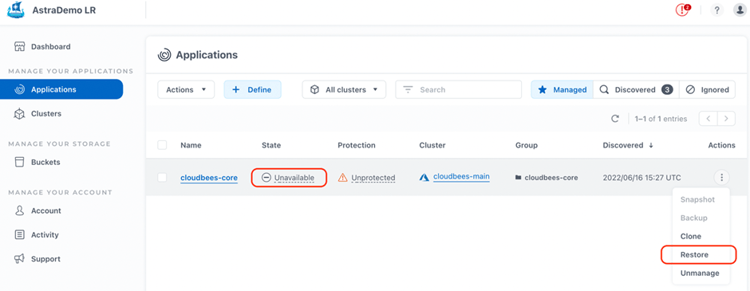

Now, to recover our CloudBees CI application in the cloudbees-core namespace, we have to return to the "Managed Applications" section, where the application state is "Unavailable", and in the Actions panel, select Restore:

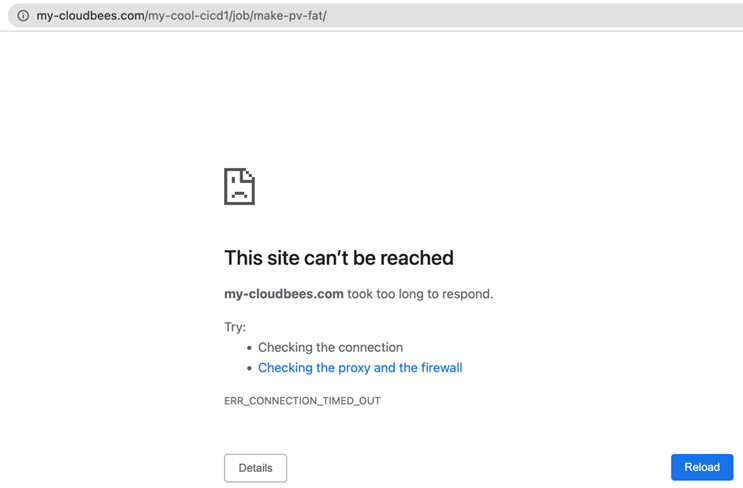

We note that the pipelines in the my-cloudbees.com are no longer reachable:

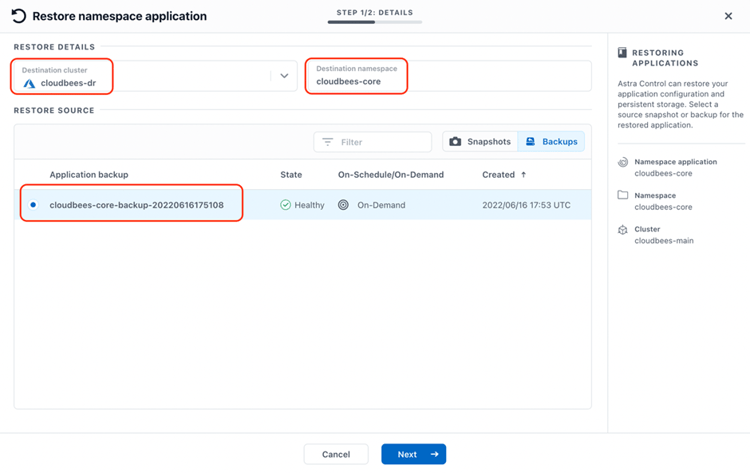

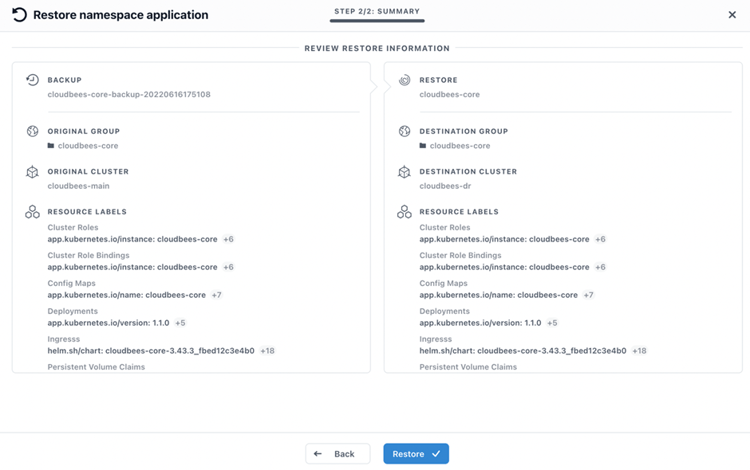

In the wizard to guide the Restore operation, we select the "Destination cluster" as cloudbees-dr, specify cloudbees-core as the "Destination namespace" as in the original cluster and select from which backup we want to restore:

In the last step, we can see the summary of the Restore operation, with original and destination cluster and ALL the resources that will be restored in the destination namespace:

Monitoring the Restore in the cloudbees-dr AKS cluster we can see how PVCs have been created (using the same StorageClass available in destination, if the same storage class is not available, the default StorageClass is used). And there are Restore Jobs (r-xxx) restoring from the object storage bucket where the backup is stored to the PVCs:

$ kubectl get namespaces

NAME STATUS AGE

cloudbees-core Active 12m

default Active 28h

kube-node-lease Active 28h

kube-public Active 28h

kube-system Active 28h

trident Active 25h

$ kubectl -n cloudbees-core get pvc,all,ing

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins-home-cjoc-0 Bound pvc-5a35f848-04a1-46ac-a606-36a82823487d 100Gi RWO netapp-anf-perf-premium 12m

persistentvolumeclaim/jenkins-home-my-cool-cicd1-0 Bound pvc-a44dd9a3-4ac3-4805-9906-5dedc1728c2c 150Gi RWO netapp-anf-perf-premium 12m

persistentvolumeclaim/jenkins-home-my-cool-cicd2-0 Bound pvc-aead74aa-9c35-4a27-9c18-8944236bd793 100Gi RWO netapp-anf-perf-premium 12m

persistentvolumeclaim/jenkins-home-my-cool-cicd3-0 Bound pvc-3dab7d86-8d1d-4695-aa35-1cd45c284d60 125Gi RWO netapp-anf-perf-premium 12m

persistentvolumeclaim/jenkins-home-my-cool-cicd4-0 Bound pvc-49aa2aac-2d05-4f24-9fe5-fa3f31857775 175Gi RWO netapp-anf-perf-premium 12m

NAME READY STATUS RESTARTS AGE

pod/r-jenkins-home-cjoc-0-jqdrc 0/1 Completed 0 12m

pod/r-jenkins-home-my-cool-cicd1-0-blb6g 1/1 Running 0 12m

pod/r-jenkins-home-my-cool-cicd2-0-sn587 0/1 Completed 0 12m

pod/r-jenkins-home-my-cool-cicd3-0-wp694 0/1 Completed 0 12m

pod/r-jenkins-home-my-cool-cicd4-0-kfvl7 0/1 Completed 0 12m

NAME COMPLETIONS DURATION AGE

job.batch/r-jenkins-home-cjoc-0 1/1 5m7s 12m

job.batch/r-jenkins-home-my-cool-cicd1-0 0/1 12m 12m

job.batch/r-jenkins-home-my-cool-cicd2-0 1/1 5m50s 12m

job.batch/r-jenkins-home-my-cool-cicd3-0 1/1 4m38s 12m

job.batch/r-jenkins-home-my-cool-cicd4-0 1/1 4m34s 12m When the restore jobs finish, ACS creates all resources in the namespace cloudbees-core of the AKS cluster cloudbees-dr:

$ kubectl -n cloudbees-core get pvc,all,ing

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins-home-cjoc-0 Bound pvc-65fe1d2a-0a14-4999-bc42-17cbe0bf68f8 100Gi RWO netapp-anf-perf-premium 7h22m

persistentvolumeclaim/jenkins-home-my-cool-cicd1-0 Bound pvc-56d7c09f-f0a3-4f11-9bcc-e72cbdf56282 150Gi RWO netapp-anf-perf-premium 7h22m

persistentvolumeclaim/jenkins-home-my-cool-cicd2-0 Bound pvc-5dd08471-c8d3-418c-a53a-a3bedd5de074 100Gi RWO netapp-anf-perf-premium 7h22m

persistentvolumeclaim/jenkins-home-my-cool-cicd3-0 Bound pvc-03267e3f-f112-4373-ada9-f01c6317394e 125Gi RWO netapp-anf-perf-premium 7h22m

persistentvolumeclaim/jenkins-home-my-cool-cicd4-0 Bound pvc-2cfaa826-65d9-46b6-b686-b18e6ebd7e42 175Gi RWO netapp-anf-perf-premium 7h22m

NAME READY STATUS RESTARTS AGE

pod/cjoc-0 1/1 Running 0 6h40m

pod/cloudbees-core-ingress-nginx-controller-684dfdf69-dwp48 1/1 Running 0 6h40m

pod/my-cool-cicd1-0 1/1 Running 0 6h43m

pod/my-cool-cicd2-0 1/1 Running 0 6h42m

pod/my-cool-cicd3-0 1/1 Running 0 6h43m

pod/my-cool-cicd4-0 1/1 Running 0 6h40m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cjoc ClusterIP 10.0.132.172 <none> 80/TCP,50000/TCP 6h43m

service/cloudbees-core-ingress-nginx-controller LoadBalancer 10.0.98.27 20.228.87.215 80:30049/TCP,443:32544/TCP 6h42m

service/cloudbees-core-ingress-nginx-controller-admission ClusterIP 10.0.124.195 <none> 443/TCP 6h43m

service/my-cool-cicd1 ClusterIP 10.0.208.211 <none> 80/TCP,50001/TCP 6h42m

service/my-cool-cicd2 ClusterIP 10.0.206.83 <none> 80/TCP,50002/TCP 6h40m

service/my-cool-cicd3 ClusterIP 10.0.119.29 <none> 80/TCP,50003/TCP 6h43m

service/my-cool-cicd4 ClusterIP 10.0.211.240 <none> 80/TCP,50004/TCP 6h42m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cloudbees-core-ingress-nginx-controller 1/1 1 1 6h40m

NAME DESIRED CURRENT READY AGE

replicaset.apps/cloudbees-core-ingress-nginx-controller-684dfdf69 1 1 1 6h40m

NAME READY AGE

statefulset.apps/cjoc 1/1 6h40m

statefulset.apps/my-cool-cicd1 1/1 6h43m

statefulset.apps/my-cool-cicd2 1/1 6h42m

statefulset.apps/my-cool-cicd3 1/1 6h43m

statefulset.apps/my-cool-cicd4 1/1 6h40m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/cjoc nginx * 20.228.87.215 80 6h40m

ingress.networking.k8s.io/my-cool-cicd1 nginx * 20.228.87.215 80 6h40m

ingress.networking.k8s.io/my-cool-cicd2 nginx * 20.228.87.215 80 6h39m

ingress.networking.k8s.io/my-cool-cicd3 nginx * 20.228.87.215 80 6h39m

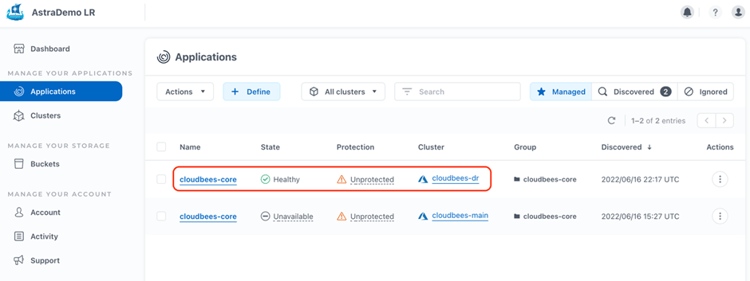

ingress.networking.k8s.io/my-cool-cicd4 nginx * 20.228.87.215 80 6h39m As we can see, all Kubernetes resources have been restored. In the ACS console, within the "Managed Applications" tab, a new managed namespace cloudbees-core has appeared in the cloudbees-dr AKS cluster.

All restored or cloned applications are automatically managed by ACS. In this way, we can start protecting it with snapshots and backups, so that at some point we can do a "Failback" to recover an AKS cluster back to our preferred production region.

As in the restored namespace in the cloudbees-dr cluster, the Load Balancer has been created with a different public IP address, as the last step of our Disaster Recovery, we should update in our corporate DNS the entry for my-cloudbees.com to point to this new IP address. In our case, we'll do that in the local /etc/hosts:

$ sudo vi /etc/hosts

Password:

$ cat /etc/hosts

##

# Host Database

#

# localhost is used to configure the loopback interface

# when the system is booting. Do not change this entry.

##

127.0.0.1 localhost

255.255.255.255 broadcasthost

::1 localhost

#20.126.199.140 my-cloudbees.com

20.228.87.215 my-cloudbees.comNow, we should be able to reach our CloudBees CI controllers, and check the status of our controllers and pipelines:

All the controllers are up and healthy:

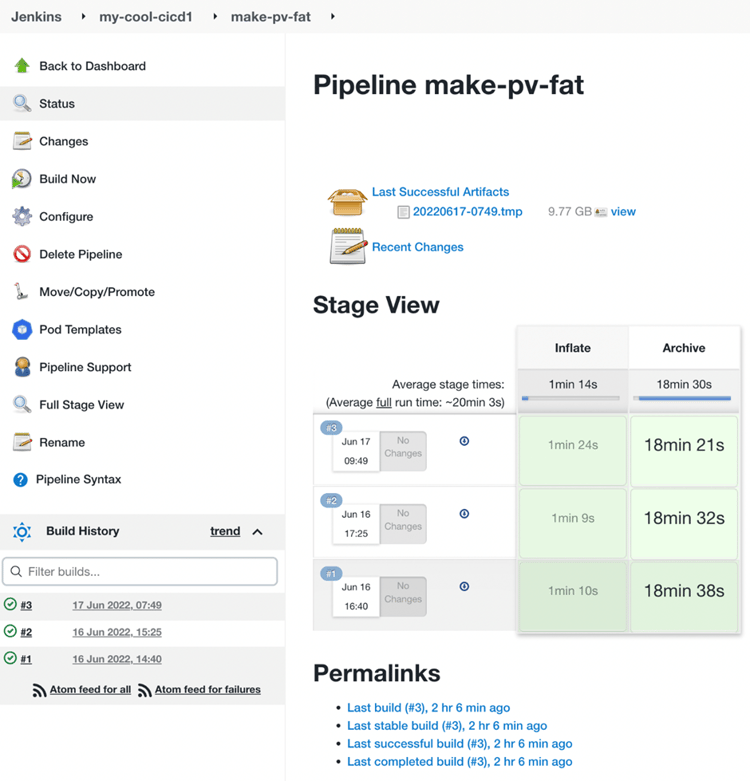

Our my-cool-cicd1 controller still has 2 builds executed for the make-pv-fat pipeline:

And now, we can run another build, the #3 of make-pv-fat, just to check that Jenkins is completely operational:

All our developers can resume their work after successful Disaster Recovery of all Jenkins controllers and the entire CloudBees CI platform.

Summary

In this article we described how we can make CloudBees CI, for massively managed Jenkins instances running on AKS using Azure NetApp Files, resilient to disasters, enabling us to provide business continuity for the platform. NetApp® Astra™ Control makes it easy to protect business-critical AKS workloads (stateful and stateless) with just a few clicks. Get started with Astra Control Service today with a free plan.