Subscribe to our blog

Thanks for subscribing to the blog.

Scalability is one of the key benefits offered by the cloud, especially for storage solutions. Cloud Volumes ONTAP, the storage management solution offered by NetApp in AWS, Azure, and Google Cloud takes this one step further by now allowing you to scale your available cloud storage capacity into the petabytes, all while using a single compute instance.

This blog will explain how to enable this capability and the best-suited use cases for the increased storage capacity:

- What Is Cloud Scalability?

- What Makes Scalability Challenging?

- Extend Cloud Storage Limits with Cloud Volumes ONTAP

- Setting Up Increased Cloud Storage Capacity with Cloud Volumes ONTAP

- Customer Case Studies

What Is Cloud Scalability?

Cloud scalability is the capability of adding more compute, storage, or networking resources for your application workloads on demand. It is one of the cornerstones to the cloud proposition: the cloud can scale, data centers can’t (at least not without lots of planning, CAPEX spending, and a long delivery window).

There are two types of scaling—vertical scaling and horizontal scaling:

- Vertical scaling allows you to add resources to existing instances, such as increasing the number of CPU cores or number of disks in an existing virtual machine.

- Horizontal scaling is where additional instances are added to handle increased workload, such as increasing the number of VMs or containers whenever demand increases.

But simply saying the cloud offers infinite scalability is a bit of an overstatement. The reality is that even in the cloud, scaling has limits.

What Makes Scalability Challenging?

Though cloud storage is scalable, the capacity is not infinite. There are certain service-related limits associated with cloud storage scalability, especially when it comes to disk-based block storage.

Challenge 1: Compute Has to Scale Up with Storage

The number of disks that can be attached to a VM and the maximum capacity for these disks will be defined by the instance type being used. Consider the following examples:

- In Google Cloud, you can attach up to 128 Persistent Disks to both customer-defined and predefined machine types. However, there is a maximum limit of 257 TB of total space capacity that can be attached to any single Cloud Compute instance.

- AWS EBS volumes have a limit of 16 TB per disk. Hence a C5, m5, or r5 instance that includes 21 disks is limited to a maximum capacity of 336 TB per Amazon EC2 instance.

- Azure VMs also have a limit of the number of disks that can be attached depending on the VM instance being used. For example, a single DS2_v2 instance can support up to eight data disks each with a maximum size of 32 TB. Hence the Azure VM maximum storage capacity is capped at 256 TB.

If you need to scale beyond the disk capacity supported by the single virtual machine you’re using, you need to stack additional virtual machines to increase the capacity. That means additional management overhead, data sync issues and costs.

Bottom line: increased costs and a risk of potential data loss.

As the data estate expands, the whole process becomes more cumbersome. If you add more VMs to meet the storage capacity demands of your application, the compute cost of the VMs also gets added to your cloud bill, thereby reducing the ROI on using the cloud.

This is clearly not a scalable solution for applications, where required storage capacity will increase over time. Another important point to note is that there is no native option to tier the data from your disks to much less expensive and scalable cloud object storage. If your data is in block storage, it stays in block storage.

Challenge 2: What Happens In Block Storage Stays In Block Storage

Even if the data used by the application increases, it is possible that a major chunk of it could be “cold data” that is not frequently used by applications. While using disk storage attached to VMs, all the hot and cold data still reside in the disks, thereby incurring higher storage costs. This calls for a storage management system that can reduce the complexity and enable scalability beyond the cloud storage limits.

Extend Cloud Storage Limits with Cloud Volumes ONTAP

Cloud Volumes ONTAP is an enterprise class storage management solution from NetApp that enables customers to leverage the benefits of trusted ONTAP storage solution in the cloud. As a hybrid solution, it helps manage on-premises and cloud storage across providers all from a single control pane, with support for HA, data protection, storage efficiencies, enhanced performance, file services, hybrid and multicloud, data mobility, cloud automation, and more.

But can Cloud Volumes ONTAP storage scale?

While for years, Cloud Volumes ONTAP has been packaged with a maximum storage capacity of 368 TB per instance, there’s a new way to scale up your storage to as high as 2-3 PBs, making the challenges of cloud scalability a thing of the past.

Here’s how.

How Cloud Volumes ONTAP Increases Cloud Storage Capacity Into the Petabytes

The way that Cloud Volumes ONTAP storage scales up into the PBs is a combination between two factors:

- Tiering infrequently used data to object storage from block storage

- Stacking Cloud Volumes ONTAP licenses without additional compute

Let’s take a look at them both.

Tiering Cold Data to Object Storage

Cloud Volumes ONTAP enables cloud scalability by tiering inactive data to low-cost object storage in all the hyperscalers.

In this setup, cloud-based block storage is used as the performance tier to store active, hot data while infrequently accessed, cold data is automatically and seamlessly tiered to a capacity tier on cloud object storage. Tiering data this way both saves space in the primary storage and increases scalability by effectively leveraging cloud object storage.

The advantage of the process is twofold:

- Tiering data helps overcome the maximum limits typically associated with block storage, i.e., the number of disks that can be connected to VM instances. Since tiered data doesn’t count towards the 368 TB limit, more block storage is available to you.

- Object storage has increased limits in the range of petabytes.

Stacking Cloud Volumes ONTAP Licenses

The maximum capacity supported by Cloud Volumes ONTAP is dependent on the license being used. As discussed previously, the highest capacity limit of 368 TB was often due to the cloud provider limit on the number of disks that can be attached to a VM instance. Now you have an option to overcome that limitation by stacking additional BYOL licenses to the same Cloud Volumes ONTAP instance.

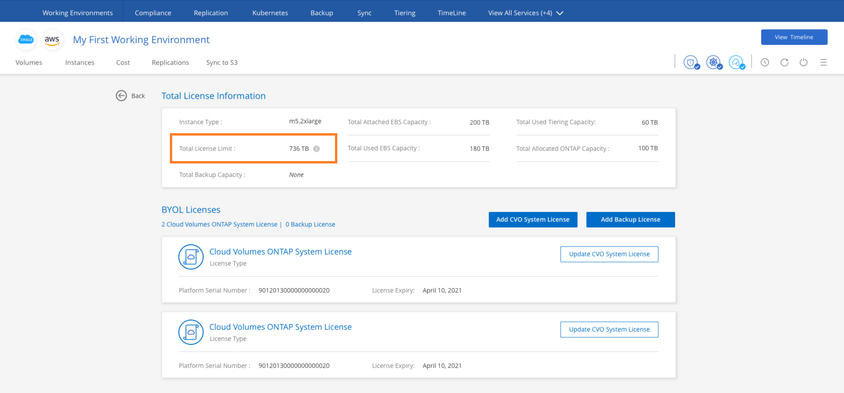

How does this work? Consider this: two Cloud Volumes ONTAP BYOL licenses will help increase the capacity to 736 TB. Using four licenses will help scale storage capacity up to 1.4 PB.

Remember that by using NetApp’s data tiering technology, object storage is being leveraged for infrequently used data. Stacking BYOL licenses extends the total capacity available, further expanding the amount of storage available for a single compute instance.

Setting Up Increased Cloud Storage Capacity with Cloud Volumes ONTAP

Below we’ll show you how to stack BYOL licenses directly from Cloud Manager interface.

Note: Tiering should be enabled for the Cloud Volumes ONTAP instance as a prerequisite to make use of the additional capacity.

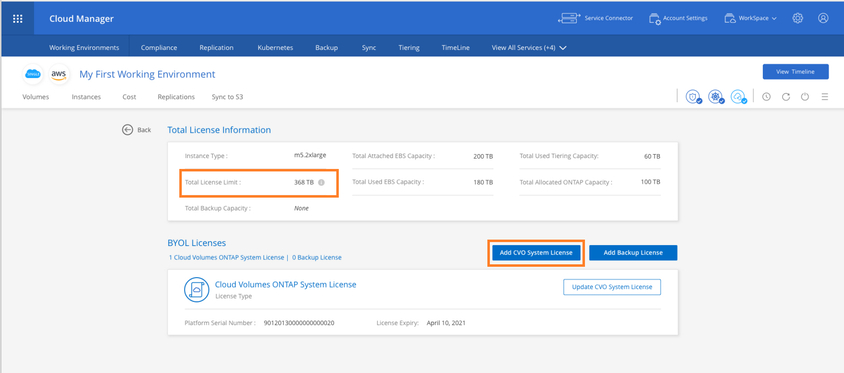

1. From the Cloud Manager interface, select the Cloud Volumes ONTAP instance and click on “Licenses” from the sandwich menu. You can see that there is one license added currently and total available capacity is 368 TB. Click on “Add CVO system license” to add another BYOL license:

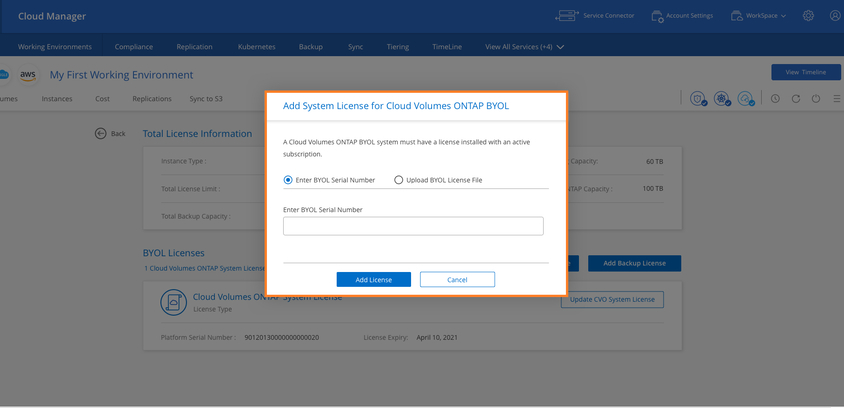

2. You will get an option to enter either the BYOL license key or upload the license file:

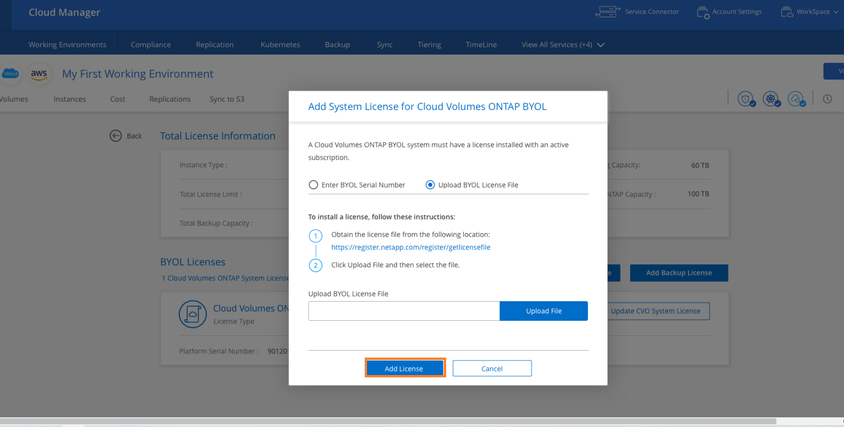

3. Click on add license to complete the process:

4. You can see that the storage capacity is now increased to 736 TB through stacking of an additional license:

Additional BYOL licenses can be added using the same process to further increase the capacity by increments of 368 TB with each new license.

Note that there is no additional compute cost involved here as the license is added to an existing Cloud Volumes ONTAP instance. In addition to that savings, your storage capacity can increase beyond the original 368 TB limit by leveraging object storage. This helps to reduce the TCO and overcome the cloud provider block storage limits.

Even better: adding additional BYOL licenses is supported in all three leading cloud service providers Cloud Volumes ONTAP supports: AWS, Azure, and Google Cloud.

Considerations and Use Cases

While additional BYOL licenses will help to scale storage capacity, the additional capacity will be extended to object storage.

This feature works best in use cases where data will most likely not require reheating of all the data at once. Suitable use cases include archival data, backup, and compliance related data that needs to be retained for long periods of time.

Customer Case Studies with Cloud Volumes ONTAP

Many organizations are already reaping the benefits of extended scalability offered by Cloud Volumes ONTAP. Let’s see how in these two case studies.

Expanding Storage to Over 1 PB at a Publicly-Traded Fortune 500 Construction Company

A public Fortune 500 construction company from the US that specializes in the construction of luxury homes was undertaking a cloud migration. As existing NetApp users, they turned to Cloud Volumes ONTAP as it integrated the cloud with their existing systems seamlessly.

The initial use case was shifting their on-premises tape backup solution to backup stored in the cloud. This was a large amount of data: around 1 PB. They leveraged Cloud Volumes ONTAP for this transition and migrated backup data to AWS through NetApp’s SnapMirror® replication. Scaling beyond Cloud Volumes ONTAP’s 368 TB limit for the storage requirement of 1000 TB was achieved by stacking additional BYOL licenses.

- Shifted backup data from the data center to the cloud.

- 1000 TB footprint using multiple Cloud Volumes ONTAP licenses.

A Canadian Multinational Insurance Company Brings Hundreds of TBs to the Cloud

A Canadian multinational insurance company with over one trillion Canadian Dollars in assets and 26 million customers across the world has embarked on their cloud adoption journey with Azure as their cloud of choice.

This company turned to Cloud Volumes ONTAP as a centralized storage management solution to carry out their migration, manage their cloud file share data, increase their DevOps agility, and optimize costs. The company was able to manage hundreds of TBs of data in cloud at the scale only offered by Cloud Volumes ONTAP.

- Centralized storage for their entire deployment.

- Scale for hundreds of TBs in the cloud.

- Tiered archives, log files, and DR to Azure Blob, greatly minimizing costs.

Read more about how Cloud Volumes ONTAP benefits the finance industry.

Semiconductor Manufacturer Migrates DR and Dev/Test to Azure

This company manufactures semiconductors with operations that span across 16 countries from its headquarters in Europe. It currently supplies the majority of the integral parts for semiconductor circuits at use in the world, with a customer base that includes some of the largest tech giants in the electronics industry.

As a long-time NetApp storage user, this company was looking for a way to reduce costs, and targeted their DR data center as an ideal candidate to move to Azure. Cloud Volumes ONTAP provided the scale to migrate this massive DR data set—3.2 PB—to Azure, where it could be cost-effectively stored using object storage in Azure Blob. It also gave them hybrid manageability to seamlessly integrate their existing on-prem systems with the new cloud environment, which will be leveraged for dev/test purposes.

- Seamless migration of 3.2 PB of data

- Tiering infrequently used data to object storage

- Cost-effective dev/test and training operations in the cloud with SnapMirror® copies

- Potential cloud bursting with NetApp FlexCache for HPC workloads

Conclusion

Cloud scalability is one of the key considerations for moving data to cloud, and Cloud Volumes ONTAP helps overcome the limitations of cloud service providers through tiering data to object storage capability, combined with stacking of BYOL licenses. Organizations with large data estate, especially for archival/backup data, can benefit from this capability to meet growing cloud storage capacity demands.